Yes, you!

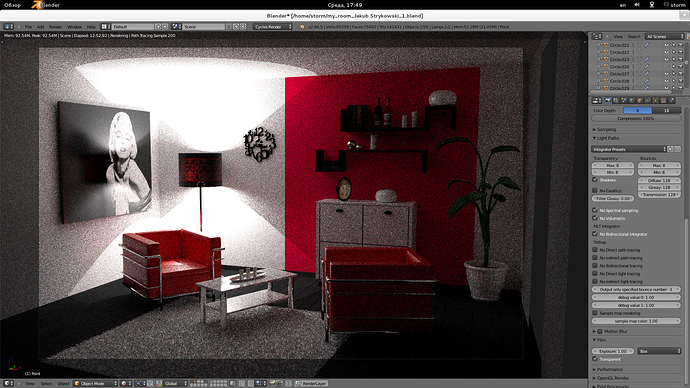

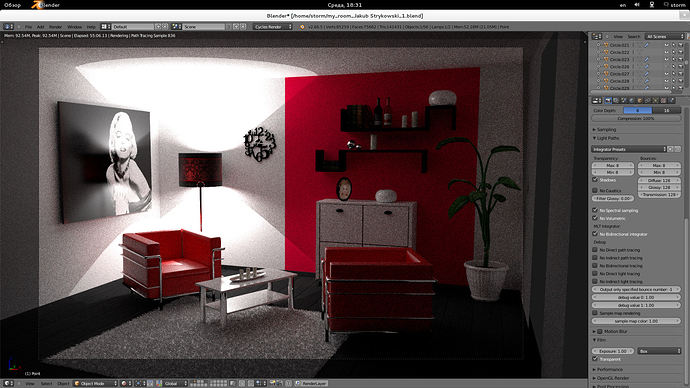

I think that even in current half arsed state, it can compete with trunk on selected scenes, due to better sampling of places that hard to hit from camera as in current path tracer. On average typical scenes, on my CPU it 4x slower then trunk Cycles.

So, i claim that bidirectional sampling is definitely useful, add to it fact that some very special scenes have better noise cleaning speed, and as it have light tracer as any other bidirectional by definition, it can make caustics from point/spot light.

The problem is it mostly one man project, code is bad and full of bugs, and as someone already noticed that bidirectional algorithm have a bit steep learning curve, so i did not expect many people start hacking it immediately.

But i know myself as guy who losing interest quickly as soon as main hard principal task solved (and it solved, as last 2 weeks i have only positive local tests), and that mean we must have more ppl that get familiar with algorithm to make it robust supported part of Blender.

What is bidirectional tracer, and why it better?

It is almost same as Cycles, in fact it is Cycles, with small addition that trace paths not only from camera (eye) but from light sources like point light or mesh with emission material. It work better when scene have complex geometry as some areas explored faster, sometime orders of magnitude faster then classic path tracer.

What is current state in general?

It can render few scenes in preview window, No GPU, only CPU, step right step left and it segault/crash or wipie your hard disc and do other very bad things. (Ouch!)

What it need to make first glaphicall build for more wide testing?

Main stopper is tiling render feature, that i cannot workaround, need help with it. In final render, Cycles split image by tiles, and allocate them on demand to save GPU memory. But some of contribution pixels must be written in other tiles, that maybe not exist, and we get segfault / program crash.

Are there any similar projects, maybe better do not make another unfinished crap but unite effort around big single project ?

Hard question.

Luxrender of course is very mature, long time proven and supported that have similar algorithm, and already have more features. The only reason i play with Cycles instead, that i never understand SLG/main Luxrender relation, it only was explained last year, and unfortunately have very bad luck to make it compile and work here, but Cycles start working immediately after make install since first published.

What help you need beside that obvious bug that make final render unusable?

- Perspective camera DoF suppport, connect_camera() must respect lens aperture circle.

- Better understanding of non symmetric BSDF due interpolated normal, for now i use hack, not sure it work for anything but diffusion BSDF

- Code refactoring, it is still nightmare, maybe split unfinished mlt related from kernel_mlt.h to other file

- many places like kernel_light.h and kernel_random.h have questionable parts, they complex to explain, related to how QMC work and how sample initial direction from light. Really need help from other ppl who understand that matter.

- too many fireflies in some scenes, i suspect ray pdf go negative or very close to zero when it does not, maybe precision related.

- MIS with background as light is broken, i just have no time to look at it as more important parts pull my attention.

-broken MIS when “shadow” feature used, it double hilight some parts, need more work to fix, so no nice “milk in glass” pictures posted by me.

-Russian roulette feature not supported at all, you MUST set min samples=max samples, i am too lazy to fix it, as it not very hard and interesting ^^

Bidirectional is cool, but what happened with Volumetric, MLT, spectral sampling?

Volumetic part work long ago, if you can call “work” that it can produce light beams and few other cool pictures. After some weeks of render per frame , ofc. I barely change it, only reuse as debug tool to debug bidirectional part. Very nice feature when combined with bidirectional, for single point/light source in fog atmosphere is produce rays, with acceptable speed if low bounces (0 or 1) used.

MLT, or Mitropolis Light Transport, or MCMC (Markov Chain Monte Carlo). Another hard question. In short, sometime it so nice i cannot believe. But in 99,99% it require terrific bounce number to produce something that looks like object, not abstract point cloud. I am still very believe with that algorithm, maybe need to selectively use it on parts like caustics from light. Too big question to write detail here.

Spectral sampling, it just joke, not real physically correct, it was quick hack to sample wave length , assume black body related light source. It can make diamonds on floor and colored volume Gaussian cones behind glass globes in fog. Not very useful.

Update:

MCMC/MLT start to show it awesomeness, few interior scenes with only diffusion flat meshes converging almost as quick as Maxwell (at least I have that feeling after many noisy previous attempts :P)