Hello

I have recently tried out blender’s Motion Tracking tools and specifically for facial capture. While my results are quite good and useful I was wondering if anyone could help me improve it. Ok so I will start with what I have done.

- Recorded a short clip where I have tracking markers on my face.

- No head mount but camera stationary along with NO HEAD movement beyond facial expressions.

- Tracked all the points and did camera solve. Now I wanted this to be attached to a characters face so using the “Setup Tracking Scene” did not give best results so used “LINK EMPTY TO TRACK” this gave me a facial movement on 2 axis’s only but was perfect for what I wanted to do.

- Created bones for every point relevant to TRACKING MARKER and attached to Track marker empties using BONE CONSTRAINTS then applied bones to geometry with automatic weights.

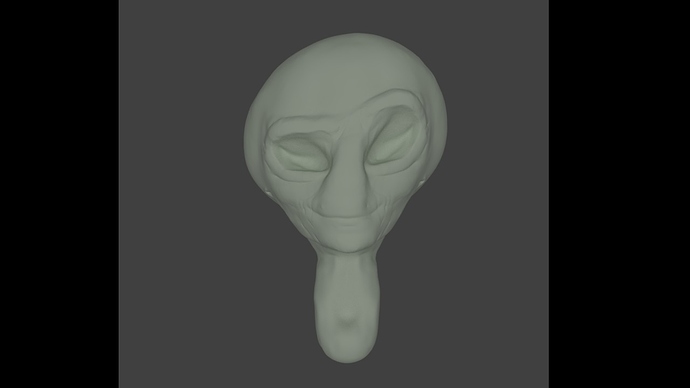

- Played animation and it looks great expect for the fact that the characters proportions are larger that mine.(cartoon style character) The results are that some expressions even when exaggerated display small and not quite in line with the character.

Now I have set up SHAPE KEYS with the character before but I was unable to get them to express properly with the motion tracking. I used the BONES as DRIVERS and while it works the results are also not great. Reason for this is that the TRACKING MARKERS move around at different points, resulting in inconsistent DRIVING sums. Tried to do some variables but just could not get it to work. This results in some horrible head aches and might as well just do everything by hand then.

Am I missing something? This was really my first time using motion tracking so if there are any other steps that I can follow please help me so I can improve.

Though this sounds crazy even when I type it what I believe I require would be something like a SHAPE KEY calculator that would calculate the highest driving point for each motion tracker and then automatically make that the 1 and the lowest point make that the 0. If there is such a thing please help me find it.

OR

Maybe there is a way that I haven’t tried where I can increase the BONES movement distance? BONE CONSTRAINT INFLUENCE does not go past 1.

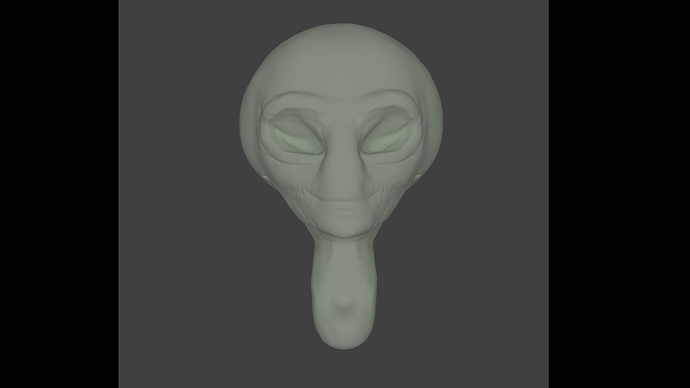

So thank you if you can help. Below are 3 pictures. 1 is base pose the others are some expressions.