What are render passes?

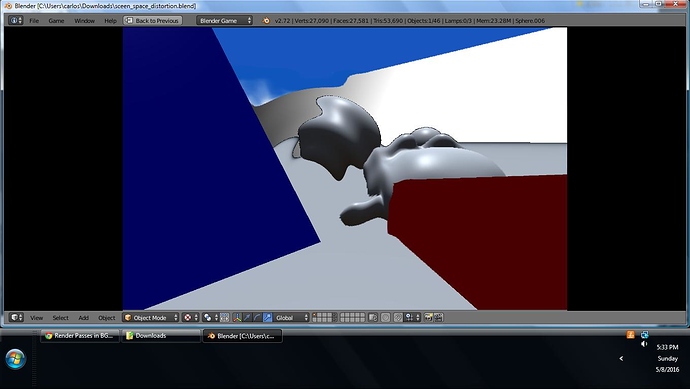

Render passes are a great trick in game engines. They allow you to outline objects:

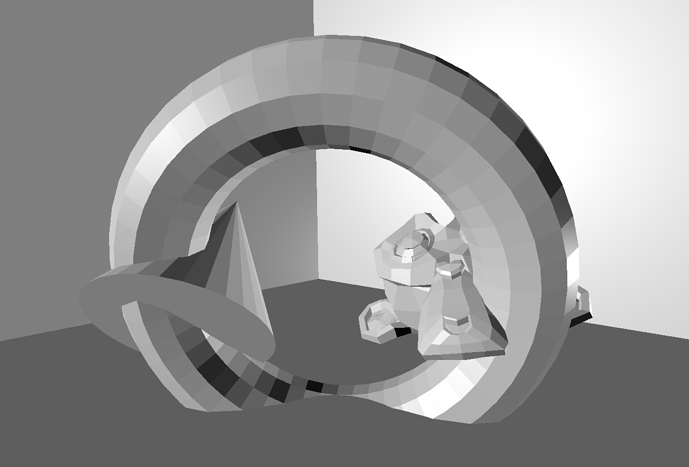

And do many weirder and more wonderful things.

Basically a render pass is a rendering where you render only a subset of the scene. It may be only specific objects (as in the example above - the outline pass is a render showing just the chair), or it may be a specific attribute (eg all objects specular)

What this tutorial is not:

This tutorial is not a collection of sample code. If I get around to it, I’ll provide some, but for now it is just a knowledge dump.

So how do we do it:

Pretty much this all stems from the fact that the RenderToTexture update happens when you call source.refresh(True). So you can twiddle object parameters all you like before then. Then you can combine render passes in the node editor as the passes are just textures, and put a plane in front of the camera to display it.

One comment before we get into some examples: if you’re going to be displaying render passes directly on the scene (as in the first image), you’ll have to have two camera’s in the same location: bge.texture.ImageRender does not allow the active camera as a source, but it does allow one in the exact same location.

The most basic is to simply render only some objects. In the case of the outline effect above

overlay_obj = cont.owner.scene.objects['Overlay']

made_invisible = list()

for obj in [o for o in cont.owner.scene.objects if o.visible]:

if obj is not pickups.get_looking_at():

obj.visible = False

made_invisible.append(obj)

RenderToTexture.update(overlay_obj)

for obj in made_invisible:

obj.visible = True

Now we’ve set every object but one to be invisible for the render pass, and then set them all back the way they were.

How about, say, a specular pass?

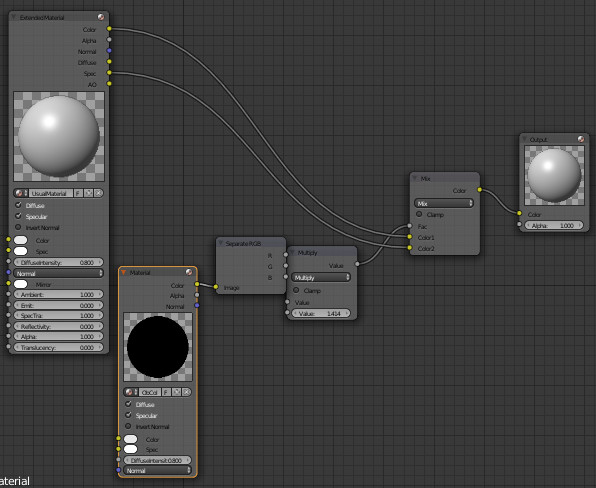

What happens if we have this node setup:

(note that the material that’s currently black has Object Colour enabled and is shadeless).

So now we can, by twiddling the object colour we can get the material to be specular only. Aint that grand? With a bit of twiddling, I think you should be able to get a normal pass as well.

How about passing arbitary data into a render pass? So instead of a pass of specularity you want, say, a pass of object density, or mass, or some other non-material parameters? In one case I worked on a render pass needing lots of data (potentially tens of parameters) about thousands of objects. This was achieved by:

- Assign every object a unique colour (by setting obj.color on a shadeless material)

- Compile the GLSL shader that will display the render pass with a great big long array of structs containing the relevant data

- Do a render pass of said color

- Inside the fragment shader perform a lookup of the object in the array of structs, and extract the reqired data.

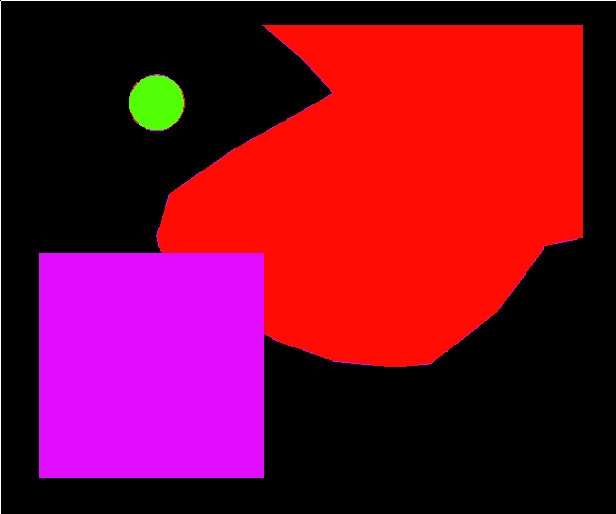

Implementing said system took several hours. It works where you don’t need lighting information (ie objects are uniform), but mipmapping can cause interesting issues.

In that image, the render pass is simply shades of red, and the colours are added by the fragment shader displaying the render pass - the colours are loaded from a JSON file along with several other parameters. Mipmapping is responsible for the purple blur around the red object.

Conclusions:

You can do a lot of stuff by rendering non-materials using the render to texture functionality - but beware, ever render pass costs quite a lot of processor time.

Things you can do with render passes:

-

Object effects

[LIST] -

Outlines and highlights

-

Bloom on specific objects

-

Screen-space distortion around specific objects (if you’re brave)

-

Scene Effects

-

Bloom only on specular highlights or emissive objects

-

Ambient Occlusion in the node editor (if you’re brave - you have to use the normal data)

-

Soft particles (if you’re braver: fading out objects based on normals of nearby objects)

-

Weird and Wonderful:

-

Writing a renderer inside a fragment shader (if you’re a legend: ie martinsh - who did)

[/LIST]

Attachments