Hi!

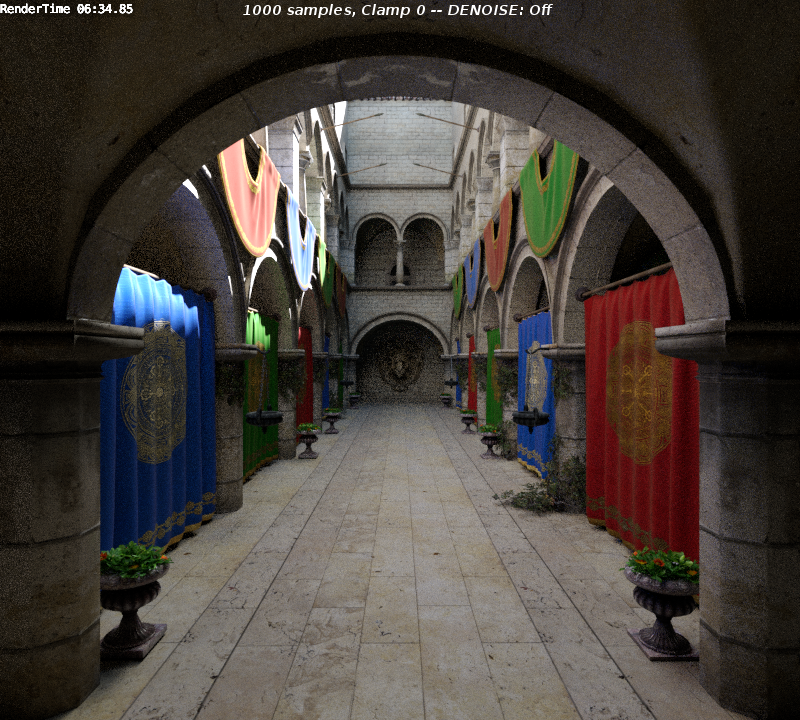

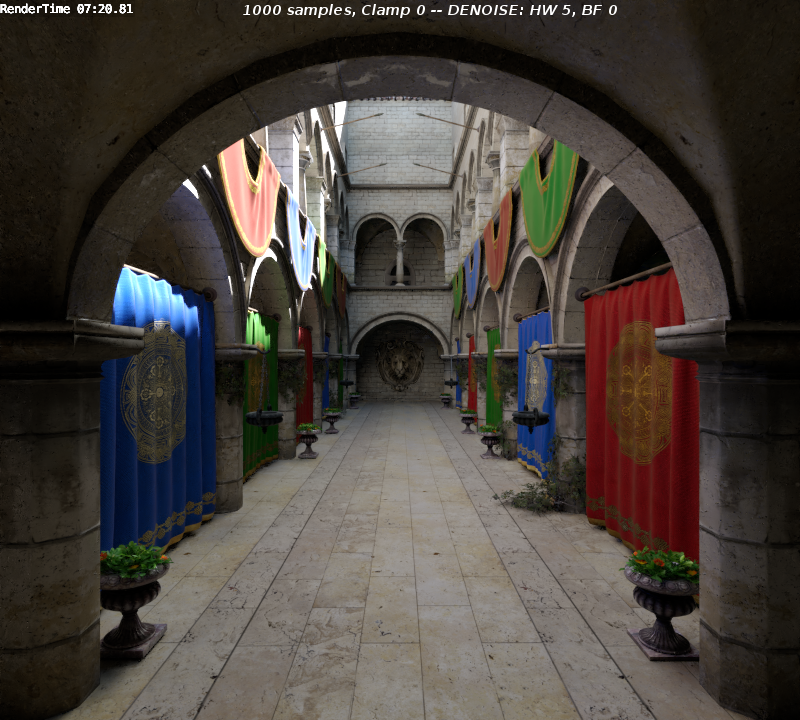

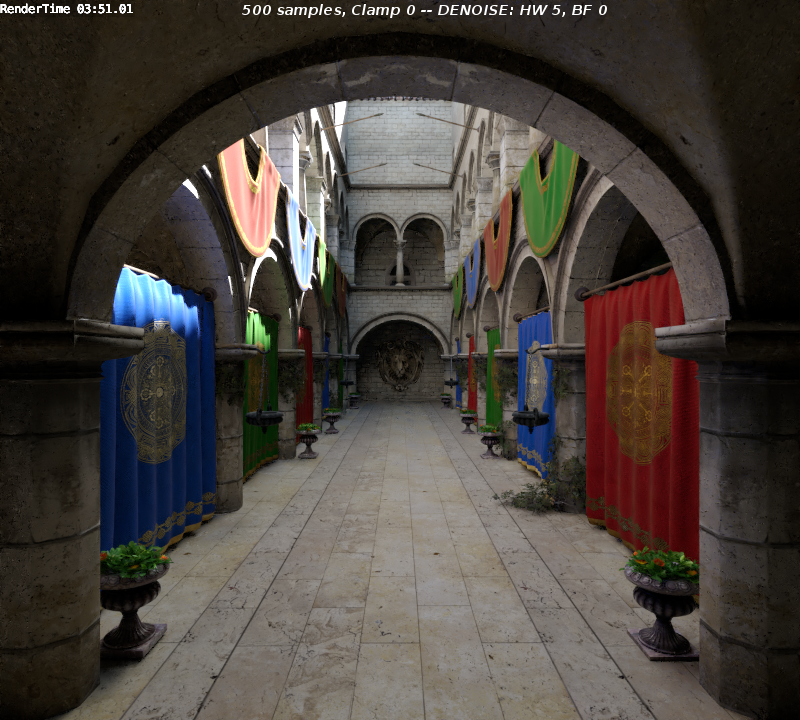

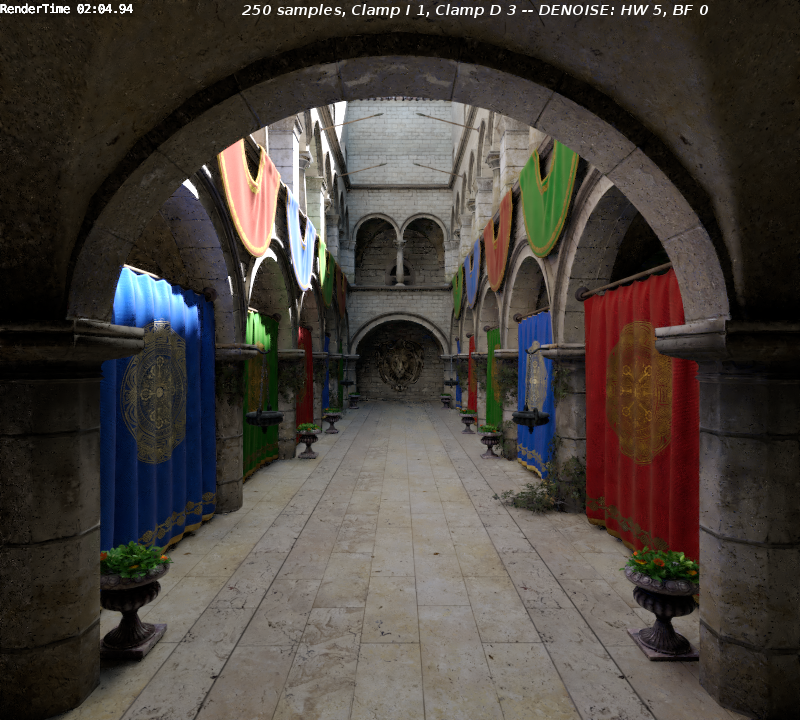

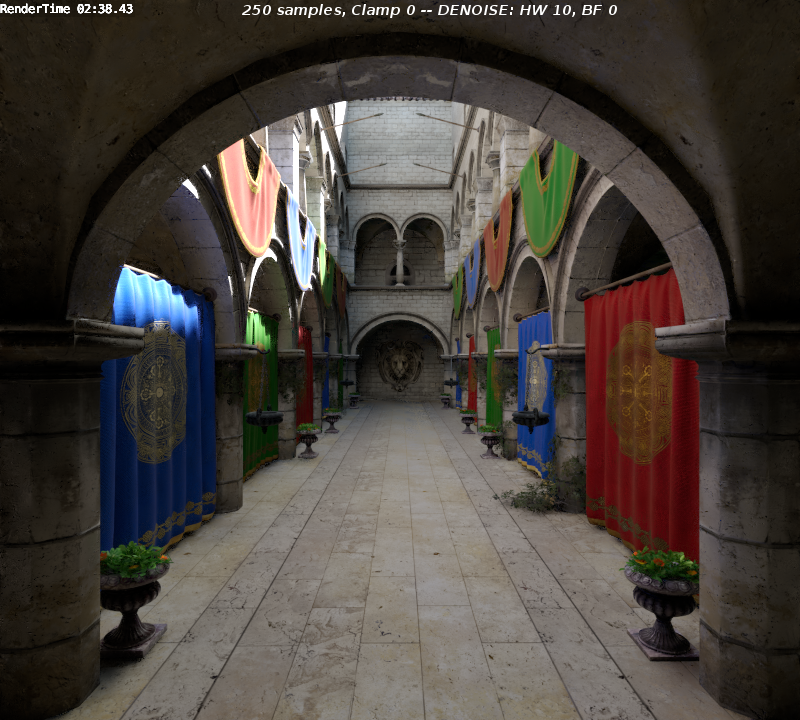

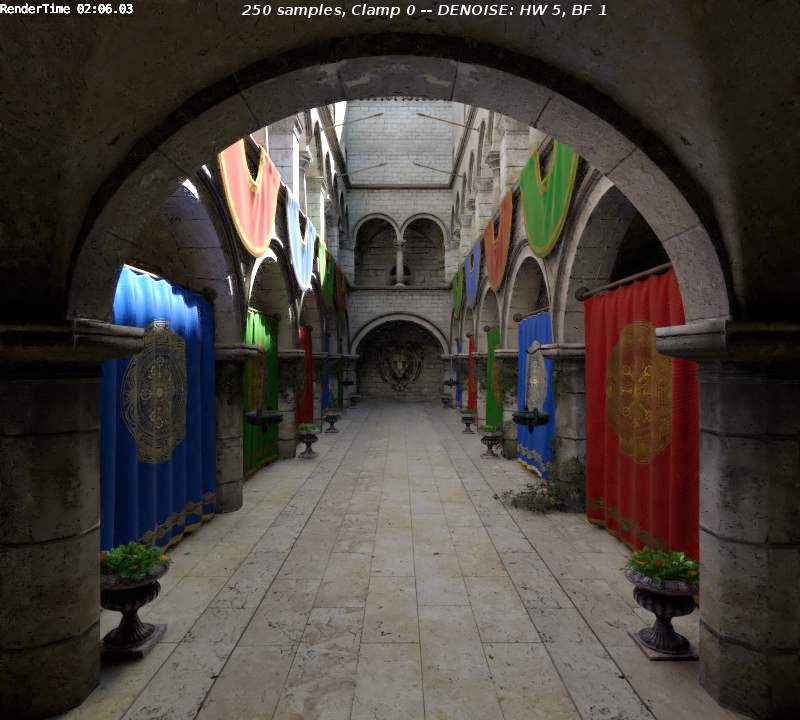

I’ve been playing around with LWR denoising for Cycles (for details, see here) for a while now, and have now finished a working and usable demo version of it - just in time for the GSoC proposal.

So far, the denoiser is pretty simple to use - in the Sampling panel, just activate “Use LWR filtering”.

The options are:

- Half Window size: Pixels up to this distance will be used in thedenoising step - increasing this value will make the denoiserslower, but helps to get rid of a “splotchy” look on flat surfaces(but might also blur things slightly more).

- Bandwidth factor: This value allows to make the denoising more or less aggressive - the default of 0 should usually be fine except for hair/fur/grass

- Per-pass filtering: Enabling a pass here makes the denoiser filter it separately and then combine with the other filtered results - should improve results in theory, but not really useful in practise yet (also, you need to enable the corresponding render pass as well as its color pass - e.g. DiffDir and DiffCol if you want to denoise Diffuse Direct separately)

- Additionally, in the performance settings, an option called “Prepass samples” was added: Setting this to some value (like 16) will make Cycles render (and denoise, if you have it activated) the image with 16 samples before rendering the remaining samples, to provide a quick preview.

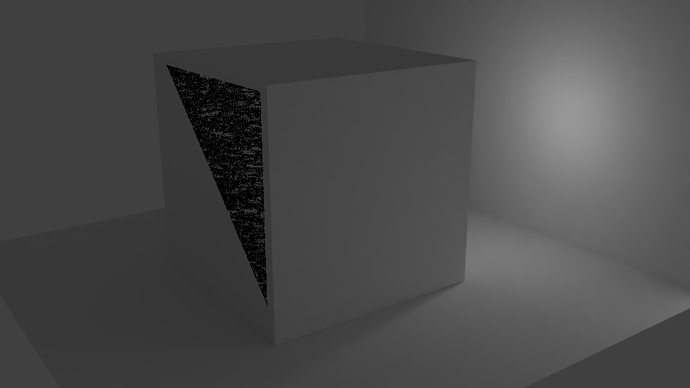

Currently, the denoising still has some problems with fine geometry - bump- or normal-maps as well as grass/hair/fur tend to be overblurred. There are ways to improve this a lot, but I need some remaining to-do items for my GSoC proposal ;).

Also, hard shadow edges may currently be overblurred, but just like above, there are ways to fix this in the future.

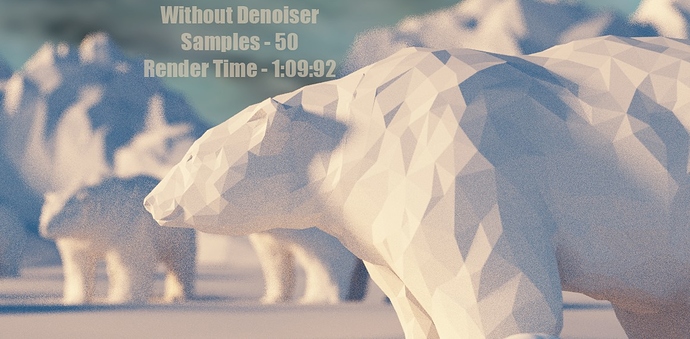

Generally, though, you should keep in mind that denoising is not magic: It can improve an image, but it can’t magically make detail appear where there is none in the noisy input. As a rule of thumb, if you couldn’t recognize something in the noisy image, the denoiser won’t be able to do so either.

This doesn’t mean that you can’t use it for quick previews - you can, but don’t expect production-quality results from 16 samples ;).

The denoising works on both CPU and GPU, although GPUs might run into problems because the current code uses a lot of extra memory when enabling LWR, at least for large images. Again, this can and will be improved in the future.

Animations might be tricky because of flickering - this could in theory be resolved with inter-frame filtering, but there’s no way to implement this in Blender, since every frame is rendered individually :(.

Also, as a final note, I’m pretty sure that at least Viewport rendering is broken in this build, and I didn’t test that much in general, so I don’t recommend using this build for serious work (yet).

So, it’d be awesome if you could test the denoiser a bit and give me some feedback regarding usefulness/shortcomings/etc., especially since I’m currently finishing my GSoC proposal for a more stable implementation.

And, of course, here’s the link: https://dl.orangedox.com/Kr1KBVHyQI6ARVNm0w

)

)