Hi, generally cycles renders are much better than when it first came out but I have found that there is still scope for a bit of de-speckling. i.e. These renders are a) 512 samples b) 64 samples c) 7 frames at 64 samples averaged with the furthest pixels from the mean excluded. The code is relatively simple (though numpy is never very transparent) and included at the bottom.

I have tried running this from inside blender and find that the range of pixel values is much reduced, maybe I’m not getting hold of the render results correctly. Also it’s not ideal having blender freeze for a long time with no indication as to the process having crashed or not! Any help gratefully received.

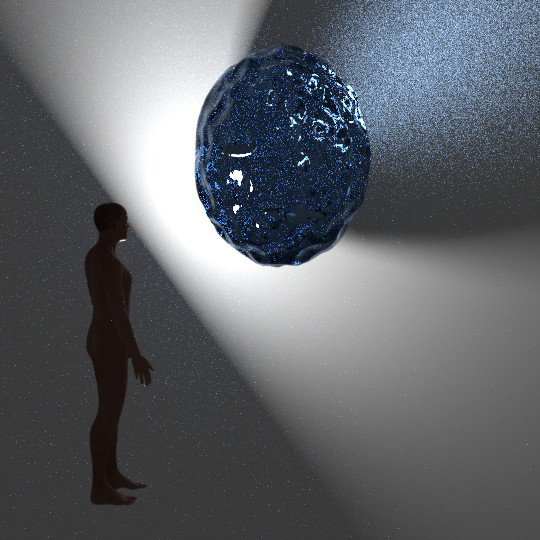

a)

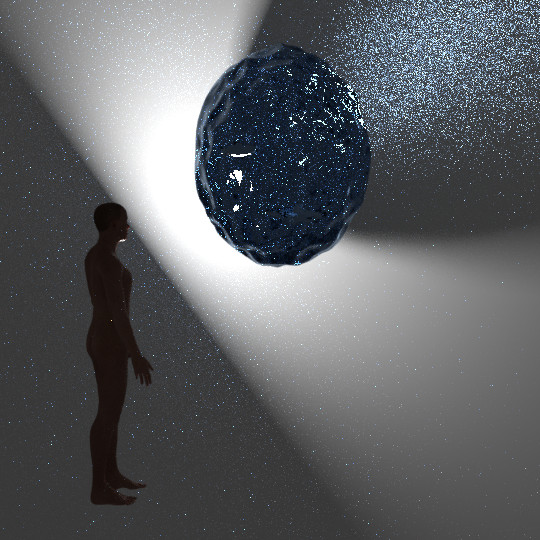

b)

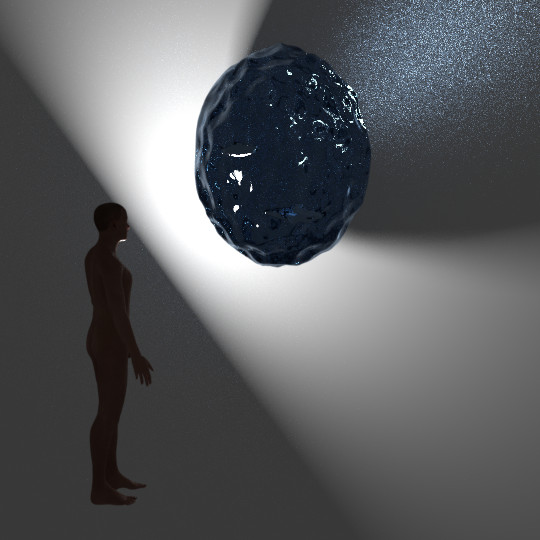

c)

python script

#!/usr/bin/env python

import numpy as np

from PIL import Image

import os

''' In blender you need to use the animation render to produce a series

of images of the same scene, say 5 or 7. NB you must click on the little

clock next to Sampling:Settings:seed to ensure that all images have different

fireflies.

Then run this file in the same directory after changing the variable FILE

below to match the stem of the file name you specified when rendering in

blender

'''

FILE = 'pic' # stem of file used in blender render, will be saved as pic0001.png

L = [0.2989, 0.5870, 0.1140, 0.0] # standard weight for luminance

rndr = None

f_list = [f for f in os.listdir() if FILE+'00' in f] # assume less than 100 files, practically will always be less than 10

n = len(f_list)

for i, f in enumerate(f_list):

im = Image.open(f)

if rndr is None: # first one - create render array to hold all images

w, h = im.height, im.width

rndr = np.zeros((n, h, w, 4), dtype=np.float)

rndr[i] = np.array(im)

lum = (rndr * L).sum(axis=3)

lum = np.abs(lum - lum.mean(axis=0)) # absolute distance from mean

ww, hh = np.meshgrid(np.arange(0, h), np.arange(0, w)) # make index vals for 2nd and 3rd axes

sel = np.argsort(lum, axis=0) # sort along first axis

final = ((rndr.sum(axis=0) - rndr[sel[n - 1], hh, ww]) / (n - 1)).astype(np.uint8) # subtract pixel furthest from mean

Image.fromarray(final).save('{}_final.png'.format(FILE)) # save image

and this is the blender attempt (but not with the full functionality of the above - I gave up after a while)

import bpy

import numpy as np

import sys # resorted to using PIL to check at what stage pixel qual deteriorates

sys.path.insert(1, '/usr/lib/python3/dist-packages')

from PIL import Image

N = 3

scene = bpy.context.scene # short cut to increase brevity

scene.use_nodes = True

tree = scene.node_tree

links = tree.links

for n in tree.nodes:

tree.nodes.remove(n)

rl = tree.nodes.new('CompositorNodeRLayers')

rl.location = 185,285

v = tree.nodes.new('CompositorNodeViewer')

v.location = (750, 210)

v.use_alpha = False

links.new(rl.outputs[0], v.inputs[0])

c = tree.nodes.new('CompositorNodeComposite')

links.new(rl.outputs[0], c.inputs[0])

bpy.data.images['Viewer Node'].colorspace_settings.name = 'sRGB'

scene.render.resolution_percentage = 100 # to avoid rounding error with w, h

w = scene.render.resolution_x

h = scene.render.resolution_y

rndr = np.zeros((N, h, w, 4), dtype=np.float)

# do three renders into rndr

for i in range(N):

scene.cycles.seed = i # different 'noise' each time

bpy.ops.render.render()

rndr[i] = np.array(bpy.data.images['Viewer Node'].pixels

).reshape(h, w, 4)

# use luminance

L = [0.2989, 0.5870, 0.1140] # luminance RGB factors

lum = (rndr[:,:,:,:3] * L).sum(axis=3)

ww, hh = np.meshgrid(np.arange(0, h), np.arange(0, w)) # make index vals for 2nd and 3rd axes

sel = np.argsort(lum, axis=0) # sort N along first axis

mid = int(N / 2) # if N is even this isn't official median

final = rndr[sel[mid], hh, ww] # use middle one

# even if I just save rndr[0] i.e. the first unaltered render it's lost image quality

Image.fromarray((final[::-1,:,:] * 255).astype(np.uint8)).save('/home/patrick/Downloads/Untitled Folder/scr_caps/addon_test/final5.png') # save image

# below non PIL original version

'''

# put pixels back in new image and save over file in render specs

im = bpy.data.images.new('Sprite', alpha=True, width=w, height=h)

im.colorspace_settings.name = 'sRGB'

im.use_alpha = True

im.alpha_mode = 'STRAIGHT'

im.filepath_raw = scene.render.filepath

im.file_format = 'PNG'

im.pixels = final.flatten() # put our median pixels into new image

im.save()

'''