Hi girls and guys who love Blender as I do,

(Beware - this is still theory!)

it has been a while - back then I stitched my own stereo VR spheres using multiple renders in blender: ( http://3futurez.com/stereoscopic-vr-sphere-toon-rendered-public-domain/ and https://blenderartists.org/forum/showthread.php?361867-PERFECT-VR-TESTCARD-done-with-blender-and-a-short-tip-how-to-do-it )

Nowadays we can do this using the wonderful multi view option in blender and uploading our artwork to VRAIS ( www.VRAIS.IO ) for example. Something I always wanted. Thanks for this feature to Dalai Felinto!

Yet equirectangular VR renderings have some limitations like fixed head position and a breaking immersion if you tilt your head sideways.

As I am progressing in writing the book “the cinematic VR formula” I wonder if this kind of approach (or call it hack) might work to get parallax to VR rendered scenes even if I tilt my head. I have the hope that some of you have some answers to my questions below - as my idea put to work would improve our VR viewing experience significantly.

My thoughts are based on the fact that you get sufficient results with light field data if you don’t use a complete array of your scene but only the frame outside plus an X inside the frame to interpolate the missing positions.

Now to my approach:

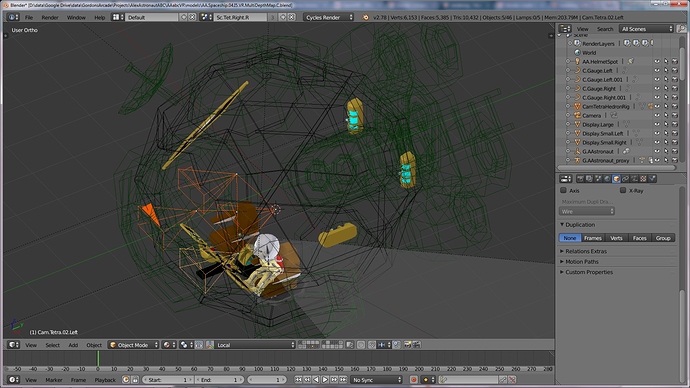

Imagine if we had not only the colour information of our equirectangular scene in mono or stereo but also depth information of four different camera positions placed at the spikes of a tetrahedron: right, left, top, rear - each equirectangular (ER).

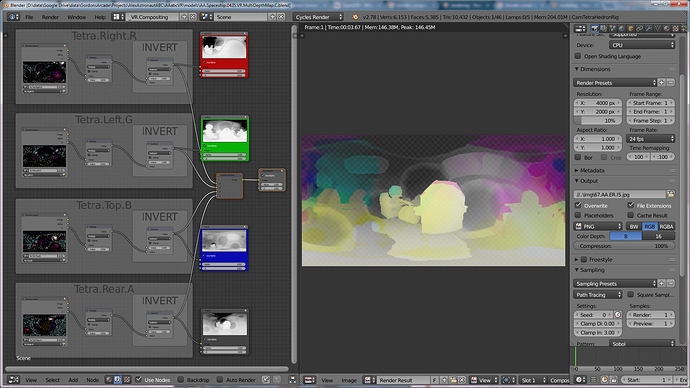

In an ordinary ER VR scene we have two images e.g. over-under. Now we could (ab)use one of the images to store the depth information in of each of the four cameras in each channel: Red, Green, Blue, Alpha - Voila - 4 ER images. Plus the other colour image for the “texture” of the depth maps.

Now my questions as I am no math expert.

-

Would it work (or under what circumstances would it work) to “interpolate” an arbitrary viewer position within the tetrahedron to get mostly accurate depth information from the 4 depth maps? I assume (as in my example) it gets problematic if I have objects within the tetrahedron itself (or if they are too close). Also I expect problems with parts of the geometry that are not visible from the outer camera positions but would be visible from positions in between.

-

Of course - related to the first point - it also is problematic to have parts of the ER texture missing (or at the wrong coordinates) depending on your viewpoint. Any suggestions how to increase the texture information from more angles without using more image space?

-

As OpenEXR format seems to store also Z information would there be other ways or formats to achieve the goal of calculating depth information and putting textures on them on the fly in real time (using a game engine)? Or maybe with the OpenEXR MultiLayer format (without the file size exploding)? Any other format that should be capable of this (Alembic?) (thinking about Google spotlight stories)

-

I have met Tristan from Nozon.com twice. With PresenZ ( http://nozon.com/presenz ) they offer parallax VR expereinces by taking your 3D data and rendering them into a magic format that also provides parallax in VR stills and videos. (you definitely should check out their demo e.g. from: http://store.steampowered.com/app/404020/ ) Nozon was acquired by starbreeze last year: https://www.starbreeze.com/2016/10/starbreeze-acquires-visual-effects-studio-and-presenz-technology-creator-nozon-for-7-1-meur/ ) Do you know wether their approach is similar to my idea or if it compeltely works differently?)

Here is some image reference:

a) The VR sphere (equirectangular stereo - will produce a photosphere view in a browser): http://vrais.io/?Kvvz6n (hope you can see it as it isn’t approved yet)

b) 2017-01-21a.VR_DepthmapCamTetrahedron.jpg

c) 2017-01-21b.VR_DepthmapRendering.jpg

d) 2017-01-21d.VR_DepthmapTextureOverUnder.jpg (how it would be saved to be processed by the VR viewer in real time - this is a fake image with no real transparency).

Thank you for thinking this through with me!

Kind regards

Chuck Ian Gordon