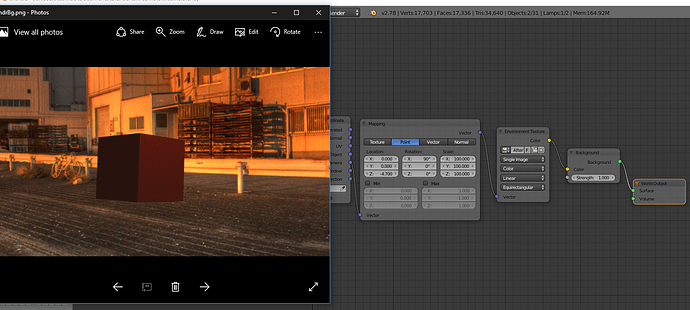

Hello. I have added an hdr img i picked up off wikipedia (https://upload.wikimedia.org/wikipedia/commons/b/b6/Afterglow_of_a_sunset.jpg). applied it to the world as an environment texture.

But it looks to me like it comes off poorly, and for now the sampling was left at 128

How can I improve it’s looks?

And how can I use it for lighting (feel free to point me to good tutorial.)

Thank you

It’s not HDR for a start. It’s jpg, which doesn’t have the dynamic range of a true HDR, not to mention that jpg is a lossy format anyway, meaning you lose even more detail. That’s not to say you can’t use them, but don’t expect great results.

Start here for real HDR images http://www.hdrlabs.com/sibl/archive.html

Thank you. Headed there and will grab a true HDR

D

May I ask why you are using Blender Internal rather than Cycles?

Quick disambiguation of HDR and HDRI:

Means High Dynamic Range, I am not talking about the detali/tonemapping fever from ten years ago. What you have is a tonemapped detail-carze 8bit garbage from 10 years ago. Nothing personal, lemme explain:

Tonemapping is taking one image and tweaking the values within that one image to come closer to hdr. HDR is still made from multiple exposured though there is a camera being developed at MIT to tackle half of that problem.

You can tell it’s just a tonemap when the clouds are black. Also makes it completely useless, as you noticed.

HDR is photographically still merging more photos into one.

For photographic arts it comes from physical limitations of equipment to capture light in bright and dark areas at the same time - point your phone out the window and you’ll probably see what happens to the interior. Any image made in HDR is an HDRI (HDR Image).

HDRI in CG has one important difference to photographic: it is 32 bit image format. For photos there is no reason to display stuff in more than 8 bit format, so after all calculations were made to create the hdr the image gets written to a JPG, PNG, TIFF… in 8 bit format. To use a HDRI for lighting a scene you need 32 bits. Maybe 16. 8 is just a placeholder.

So when you look for hdrs pay attention to large files (my most recent hdri is 850MB TIFF, but that’s really extreme) in TIFF, EXR, HDR formats. PNG can support 16bit so yeh, maybe.

One last thing: you’ll see tutorials saying you can make a 32b HDR from a single raw photo exposure. You can’t. You can try, but it’s not the same.

Edit: I said quick. lol.

Tonemapped images aside (which is another topic not related to lighting reproduction or actual dynamic range) a high dynamic range image is an image that has high dynamic range. The question is, high relative to what. It can be taken in two ways: higher than some other, low dynamic range or high enough to capture all the data we need.

If we call standard video, which has about 6-7 stops worth of range the low dynamic range image, we can call everything above that high dynamic range data. This includes both modern still and digital cinema cameras with 14-15-16 stops.

If we need to capture for example 30 stops of range, we have to merge different exposures because we don’t yet have sensors capable of capturing that range.

Bit depth is important for storing the image data. We can store a hdr image with 8 bits per channel, but we get horrible banding due to big quantization steps. With 16bpc we can already either save the data directly as scene linear data (using half-float exr for example) or using a transfer curve to map the scene-linear data into limited range of integer storage which tiff, png, dpx etc use. This is one of the main purposes of different log curves.

32 bits per channel is nice, but for color data storage (not manipulation as in compositing) it actually is overkill, especially for linear float data. For integer storage it could be necessary in some situations but I would say there is no real practical difference in appearance.

From above I would argue against that. A camera that can capture 15 stops is a HDR camera by most definitions.

I’ve yet to get an environment map which casts a crisp shadow from a single layer of footage. I’ll try it out. What I meant was when you see people taking a raw image, making three or five or seven from that one and merging them back into one, that does not work. Be it noise in the image or what it may, results were never good for me.

Yeah, splitting up a camera raw image into multiple low dynamic range jpegs or whatnot and then merging them together is just dumb. The camera raw image is in itself already a linear hdr image (within camera sensors dynamic range limits ofcourse), it just has to be scaled properly because sensor data is fit into 0-1 range (no signal up to maximum signal from photosite). Most camera raw processing softwares that are meant for photography (like Lightroom) don’t give you pure debayered raw image, they already apply a tone mapping curve, but any kind of nonlinear tonemapping breaks the relationship between code values and light intensity, which you need for proper rendering and lighting reproduction. But using dcraw you can extract the original scene-linear data from camera raw files and do whatever you want with it.

Getting a crisp shadow from a hdr image needs an image with high enough resolution to get a light source small enough to produce crisp shadows in the firstplace. Secondly it needs light intensitiy differences big enough to produce a strong shadow and third, the render engine must be capable of efficiently sampling that small light source area in hdr image to actually produce that shadow.

Most downloadable free HDRs are still clipped, especially if the sun is present. The sun brightness is very bright, but nowhere close to the real deal. I’ve also seen HDRs that are techically LDRs because all pixels can fit within that range (no overbright pixels). In this case using a jpg would actually work just fine. Also, HDR tonemapped to LDR can still be used for camera rays unless you want to tonemap it yourself (or choose to live with burned out brightness). Useless? No. But I wouldn’t use it for lighting.

In my own setup I have a node group where I can scale up the brightest parts of the scene (like the sun). It goes linearly up to a certain threshold of choice (varies per HDR), and then switches to exponential rate of change until a certain max brightness of my choice. This makes it easy for me to preview certain HDR looks (with hard shadows and proper brightness), but once I have settled on a look I replace it with a mimicked lighting setup using area lights (or portals or sky texture) combined with a sun lamp. I’m usually doing progressive GPU renders and I have to keep memory usage in a strict regime. That approach also converges way faster (less noise) than using actual HDRs.