I like rigging. I thought I’d start a thread where I can post various tricks that I discover.

I think that rigging is very modular. I find various techniques, basic tools, and try to add them to my list of things I can do. It’s like building something mechanical. You know what gears do, you know what screws do, you know what belts do, then you think about what you can put together out of all of the basic machines you have. The basic machines themselves are very abstract.

So one of the things Blender does is that it has a relatively smart IK algorithm. It looks at the existing angles and says, “Okay, I think you want me to do some angle restrictions on this structure like so-and-so.” That reduces twitch and reduces the amount of work you have to do.

Rather than basing these IK angles on the default pose, it looks at the current pose. Again, that’s smart, it lets animators tweak these values.

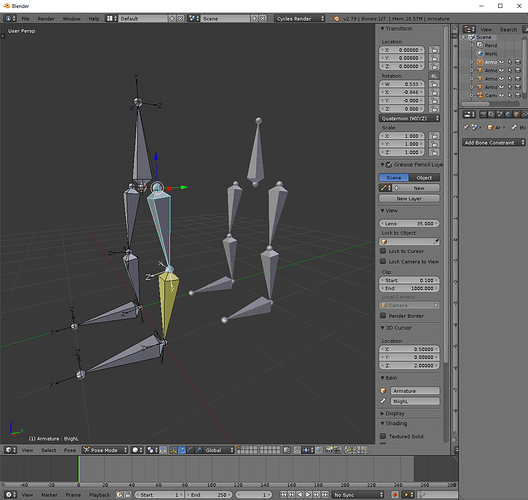

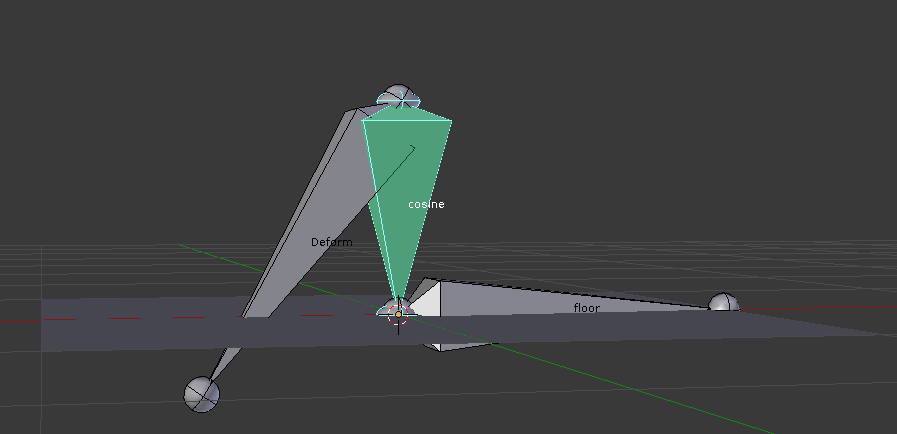

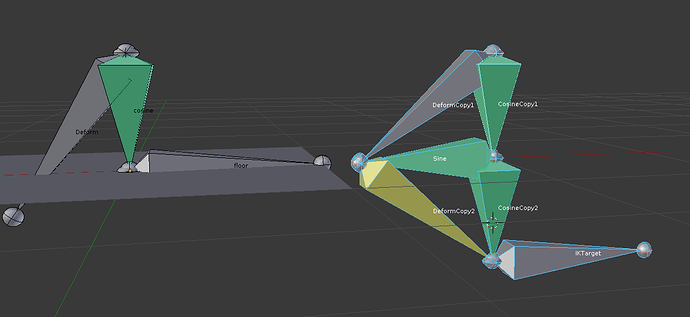

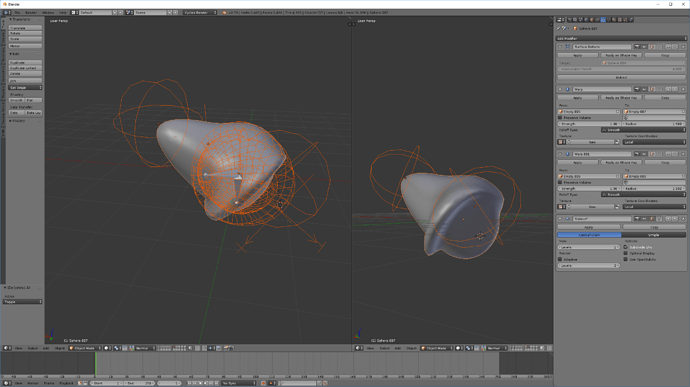

Just to demo, here are two identical skeletons, abstractions of IK legs. The rear skeleton is in it’s default pose. The left thigh of the front skeleton has been rotated, and you can see that the IK is reinterpreting the knee as a more chicken-walker kind of knee thanks to that rotation. That’s kind of handy.

But the only way to get the IK to reinterpret the angles is via manual transformation. You can’t use constraints to set the angle.

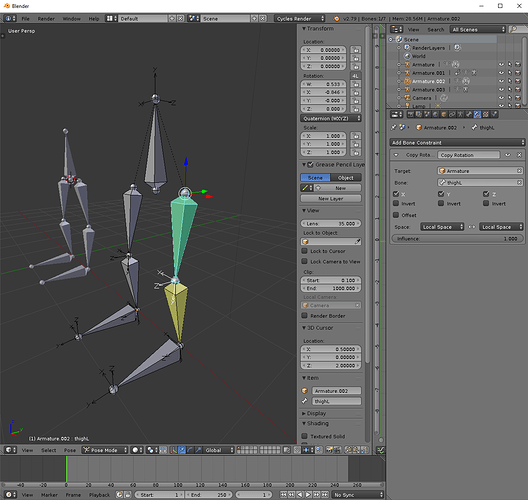

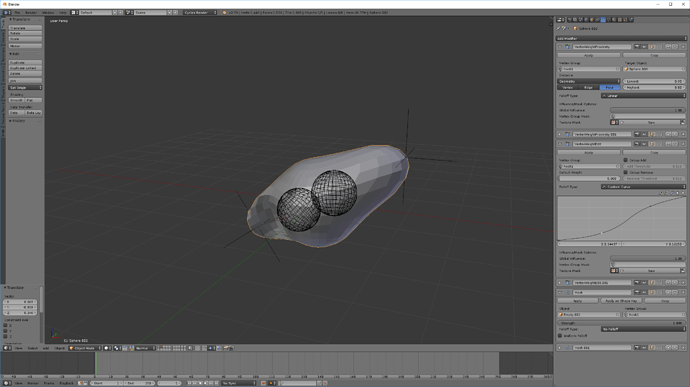

Here I’ve got another almost identical copy of the skeleton, and I’m trying to copy the rotation of the posed chickenwalker thigh to my new armature via a constraint, but it doesn’t do anything. Bones in IK chains don’t handle constraints very well.

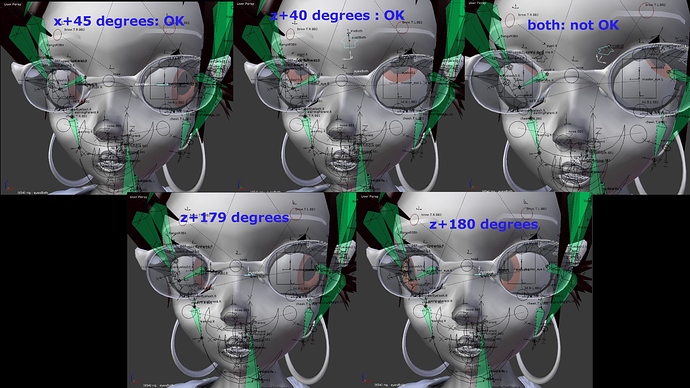

Why is this a problem? Actually posing the thigh to turn into a chicken walker is kind of tough. The animator doesn’t get smooth feedback saying they’re getting close to the IK action they want, because there’s a threshold effect. And what if the rigger wants to spare the animator the trouble of posing the thigh? What if they want to control the IK behavior, mathematically, based on the parameters of other bones? Then this fancy IK behavior is useless to them.

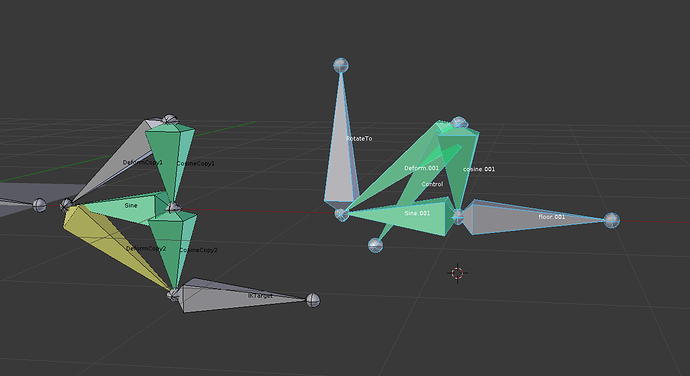

But here’s something you can do.

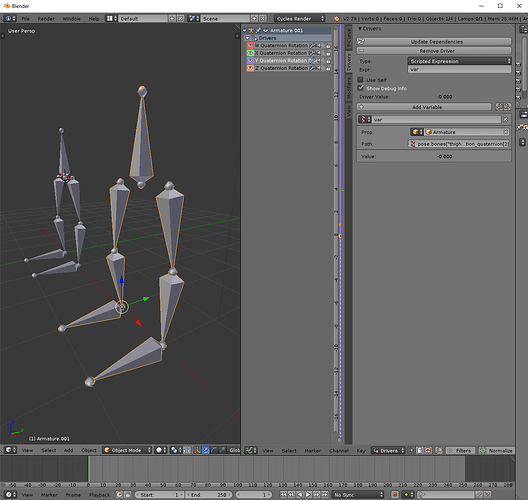

Rather than using a constraint to copy rotation, you can use a driver to drive the transformation. Because you’re driving the raw transformation, you can shove some manipulation in early, before the IK decides what its base angles are. Here, I’m driving it from raw copied path data, but you can drive it from transform channel data of individual bones, including in the same armature.