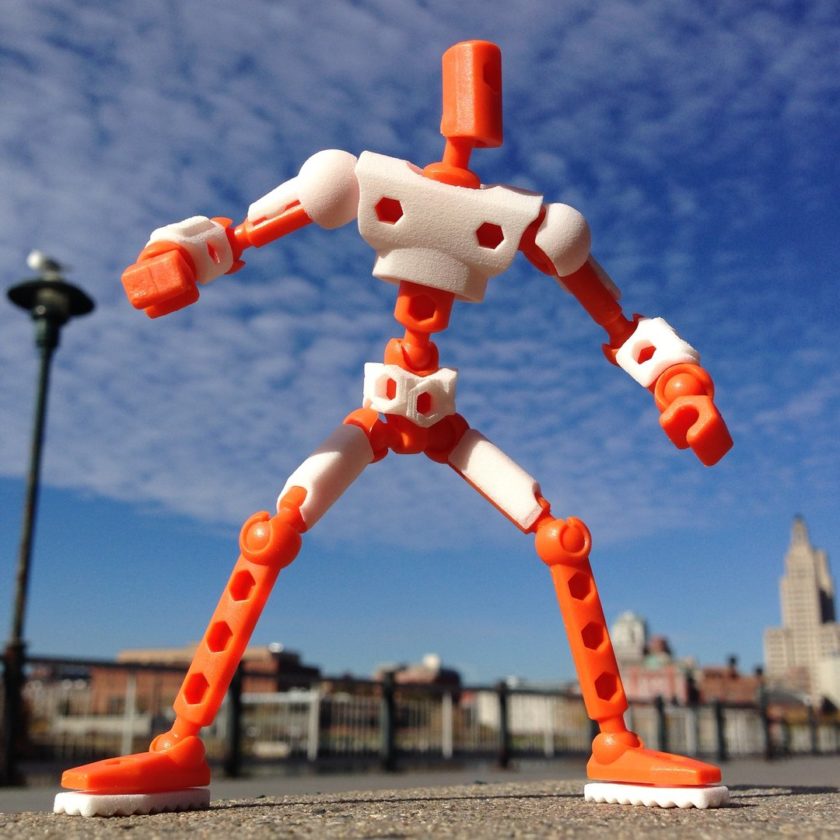

The concept is this using 3D printed ball and joint snapfit models that are fully poseable and represent your model in 3D space. The web camera would then read the figure use simple markers on the limbs like plus, boxes, hexagons to identify limb placement capture the coordinates from real-space and apply them back into digital space.

You might have multiple figures in a scene, each figure might be attached to an arm to allow the character to lift off the ground plain. registering a marker on the chest and or color to identify the figure.

The machine learning and camera would replace the servo’s on traditional puppeteers, and this would still allow them to move freely,

Advance the frame, move, advance 5 frames move more repeat as needed to complete the sequence.

Take an old concept and make it new again with advance techniques. If anyone is interested in working with my on this.

please let me know.