Hey thanks !

Indeed most of the editing decisions are taken at storyboarding stage (done in storyboard pro), we then just extend or reduce strips a bit in the end when animation is done.

Linked scene can be useful when setting up characters and cameras in the shot, or when we want to go away from the storyboard and try different things without intermediate rendering, but that’s not a common case.

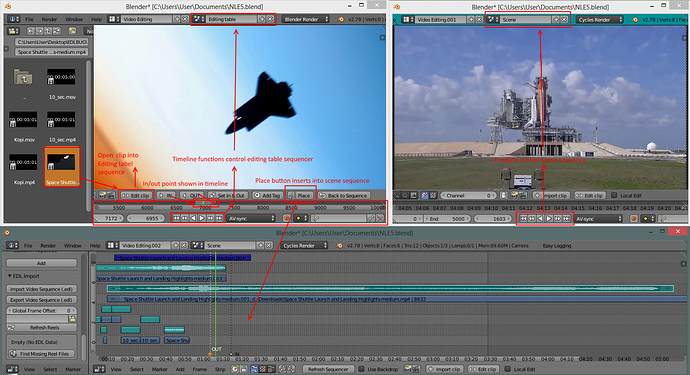

I’m currently reworking the whole thing to be more efficient, and not being forced to link scenes into the edit. That was useful at that time to be able to open the shots from the edit easily , but that also force us to link every assets in the edit file and then increase loading times.

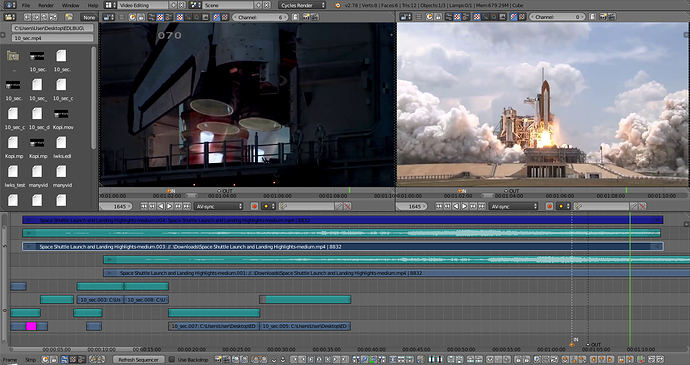

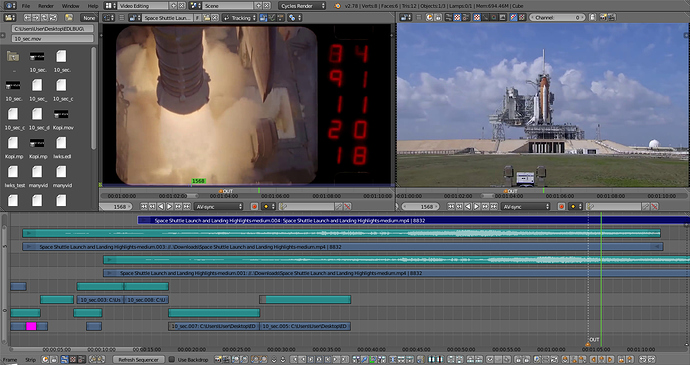

Now each shot is encapsulated into a metastrip and it’s possible to switch between full render, openGL or playblast render. The filepath to the shot .blend is a custom property of the metastrip so there is no need to link the scene in the edit anymore. Also everything can be rendered from the edit file, locally or into the renderfarm (CGRU/Afanasy).

About missing tools, with python everything is quite doable for what we need, it just take time to add them. I’d like to find a cool workflow to be able to do also storyboarding inside blender . It’s quite similar to what we have now with setting up shots, but when storyboarding you want to try many things and that creates a lots of shots that aren’t needed in the end. So it may work a bit differently.

What I found would be great to add but can’t be done in python is more into the color grading tools. Maybe a few more blending modes for strips : having screen , overlay, dodge or others can be handy alongside add and multiply.

I’m using the wipe effect to create some vignetting or to add some color blends to shots in the grading/finishing stage. It’s not perfect but that works , with a few more blending modes that would be awesome.

Sadly, when adding some Adjustment layers to color correct or these kind of vignetting effects, the sequencer playback isn’t realtime anymore, even when using proxys and only one color modifier. That would be awesome to improve playback speed under these circumstances, but I guess it may become too much work for a software that’s supposed to do 3D in the first place .And from what I’ve heard first VSE implementation was made in a hurry without a strong design in mind, that’s why even small things can be hard to add even now.

Having more strip modifiers and less effect strips can be another good thing to do, as now everything is a bit mixed between effect strips, the filter panel and modifiers. There are a few thing like this, that are not blocking someone from doing something, but that would push image manipulation a bit further but staying in the realtime side , as opposite to the compositor that is for strong image manipulations.

For me VSE is a great tool for 3D animation and is quite complete (as long as you can do a bit of scripting to automate things) , in these low budget / small team projects we are using only blender and we don’t loose time switching between softwares.

Maybe under different circumstances, like a feature length movie or a VFX movie it would be easier to use a true editing software and use the VSE only for sequences management or quick review. But that’s another scale of project, so having more dedicated softwares is logical.

If I need to do pure video editing I prefer using another software like shotcut or kdenlive, they are more well suited for that task (at least you can play/pause using spacebar instead of alt-a) . They’ve got shot management, fast playback and all the tools needed for editing. VSE in the other hand has a few unique feature that makes 3D animation a breeze. For me it’s two different tools with different purpose.

Sorry for that long post, I can’t stop myself when I’m on a cool subject, I think I should go to sleep now