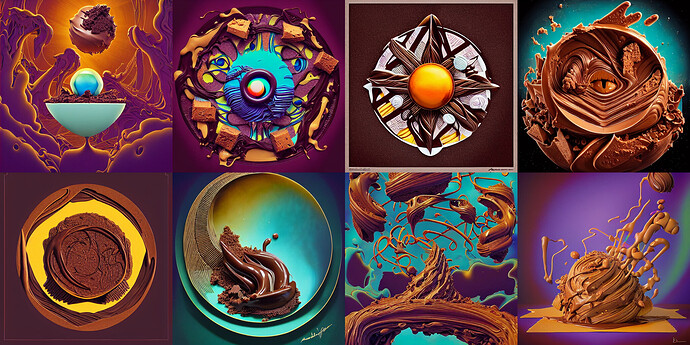

It can create these in about 10 seconds flat. It does not have to be based around food, and the possibilities are endless depending on the topic, artists and styles you feed it.

Mixing artists and styles of your choosing like a genius.

It’s superior to DALLE-2 on release it will be open source, unfiltered (except for illegal images) and free and can run on consumer 5GB graphics cards.

Unfiltered, except for illegal Images?

So its Not unfiltered… How does it detect If an Image is … Illegal?

has very advanced means, like classifiers, then stops iterating so you only get a very blurry image. Its not crude like openAI keyword bans.

But isnt’t it open source? So the filters have no meaning, since they can be removed by anyone.

Imagine a mad scientist to have used this technology in secret and would have become one the “greatest” artists of our time. Due to “rarity” and limited supply (you would create only hundreds of artwork in your lifespan and you would create each unique painting only once).

However now once you can get an AI to do the heavy lifting, suddenly what can you say? That it costs nothing? That everybody can click and create something?

It seems that though you have really great images, in terms of psychology, it looks to me that you are able to accept 1.000.000 of different ideas and not settle to anything.

You can do anything, except from doing what your life purpose would be. (Even if your life purpose is boring such as - creating 3D art - still is something to keep you busy).

These are just random thoughts… Don’t think of them in strict terms.

I would look at it more in the sense of preventing someone from accidentally creating/publishing something that is very similar to an existing image.

As with every tool, besides the good things one can do with them, there are harmful uses. Just as it is impossible to prevent people from writing something harmful in a text editor or preventing someone from creating something harmful in Blender, it is not possible to prevent someone from creating harmful results with this sort of tool.

I am getting the impression (not just from your post) that many look at this sort of technology as if what they are doing is less valuable. Even though that might be correct to a certain degree, more often than not, it is a sign that a change is going to take place.

When photography was invented, there was a huge shift. Or when sound recording was invented or when music synthesizers were invented, a huge change took place. When the samples started to sound very realistic, there was a lot of fear among musicians they would become obsolete. That didn’t happen, but things changed and shifted. Even though sound recording exists and sampled music can sound almost realistic, none of those beat the experience of a live concert.

It occurred to me, this is all of no threat to 3D artists, some people like having sketchfab or blendkit addons and loading other peoples objects into the scene to save a shed load of time and effort, and downloading other peoples lighting setups and materials. It’s not so scary it’s just a tool which changes how we do art. For 3D artists these AI images can only be used as reference material or texture information to build on… The creative people should be able to make more use of them than the non creatives and thus still find ways to make money.

Depends on the pictures its trained.

If source images are incredible, then result could be incredible.

Second problem: Where you find 250 TB incredible licence free source images and train your AI?

its already been done, its not a problem. It does not use the actual source images in the final model. Basically if you took an artists copyrighted famous work, studied it and labelled each part of it, like sky, dog, table, color, composition style etc, then compressed that knowledge down into just a tiny token of data (which is basically how your brain works and these models) then came to make your own artwork and used some of these ideas, like drawing your own dog, or your own color scheme based on what you saw… it does not violate anybodies copyright and the original artists do not need any royalties or attribution as i understand…

Once again it never copies actual original works it only uses knowledge about them. the model does not store images.

It usually doesn’t copy, that’s correct. However, it can copy. Though, it clearly goes way beyond memorizing and apparently learned useful concepts, we can never exclude the possibility of copying.

Even though, this is similar to how humans can do their work and be inspired by others, it is unclear as to how a court would rule. It is even reasonable to assume that every country comes up with different rulings, making the situation a huge mess.

I just remembered that this creative commons interpretation does not align with your statement. According to their interpretation, the output of neural networks which were trained using CC-SA licensed work have to be released under CC-SA as well.

https://creativecommons.org/faq/#artificial-intelligence-and-cc-licenses

yea im pretty sure stable diffusion have all the licencing stuff figured out… All the stuff its producing right now we cannot claim any kind of copyright on. its CC0 1.0 public domain…

(The public domain is not a unified concept across legal jurisdictions, thus the specific affirmation you make when using the DreamStudio Beta and the Stable Diffusion services is that of the CC0 1.0 Universal Public Domain Dedication [available at https://creativecommons.org/publicdomain/zero/1.0/]. Any/all users (includes “Affirmers” as described in the Universal Public Domain Dedication) specifically agree to the entirety of the referenced and incorporated Universal Public Domain Dedication, which includes, but is not limited to, the following:

. . . .

2. Waiver. To the greatest extent permitted by, but not in contravention of, applicable law, Affirmer hereby overtly, fully, permanently, irrevocably and unconditionally waives, abandons, and surrenders all of Affirmer’s Copyright and Related Rights and associated claims and causes of action, whether now known or unknown (including existing as well as future claims and causes of action), in the Work (i) in all territories worldwide, (ii) for the maximum duration provided by applicable law or treaty (including future time extensions), (iii) in any current or future medium and for any number of copies, and (iv) for any purpose whatsoever, including without limitation commercial, advertising or promotional purposes (the “Waiver”).)

(All users, by use of DreamStudio Beta and the Stable Diffusion beta Discord service hereby acknowledge having read and accepted the full CC0 1.0 Universal Public Domain Dedication (available at https://creativecommons.org/publicdomain/zero/1.0/), which includes, but is not limited to, the foregoing waiver of intellectual property rights with respect to any Content. User, by use of DreamStudio Beta and the Stable Diffusion beta Discord service, acknowledges understanding that such waiver also includes waiver of any such user’s expectation and/or claim to any absolute, unconditional right to reproduce, copy, prepare derivate works, distribute, sell, perform, and/or display, as applicable, and further that any such user acknowledges no authority or right to deny permission to others to do the same with respect to the Content.) and so on and so on.

According to this: https://huggingface.co/CompVis/stable-diffusion, they are using “LAION-2B (en) and subsets thereof”.

According to this: https://huggingface.co/datasets/laion/laion2B-en, the license is cc-by-4.0

And again, according to the creative commons interpretation https://creativecommons.org/faq/#artificial-intelligence-and-cc-licenses, attribution would be required. So everyone would need to attribute the dataset. On top of that, every image from the dataset which requires attribution would need to be credited?!

Indeed, the license for all images I could see in the preview is “?”, so it appears quite tricky.

from what i can see they are making it CC01.0 public domain, thats what the beta terms of service are… i dont know about the final product… But competitors like midjourney and DALLE-2 think they can give useres complete commercial rights… All the midjourney stuff is flooding the online galleries right now with people not even mentioning that midjourney AI did the work… And folks are of course trying to sell it.

Just to make it clear: I don’t have a strong opinion regarding this topic. It is difficult, it is a mess and everyone has an opinion.

It literally doesn’t matter what this and that entity is doing and what they claim the copyright should be. Even the creative commons interpretation is worthless if a court rules differently.

From my point of view, using data for neural network training without very clear licensing could easily end up being a legal nightmare.

Not my problem… im just going to use the output for personal viewing and ideas or inspiration. Its coming whether we like it or not, companies like OpenAI want revenue and need to pay their bills, and companies like MJ and others want to make money so they are going to try and give users a reason to pay for their service hence the apparent ability to use the outputs commercially… Companies like StabilityAI are strongly for open-sourcing the tech. once it is open source these AI images are going to be everywhere and unstoppable like an avalanche… What will happen to the art world and copyright world i dont know.

The whole thing reminds me of the end of the matrix. ![]()

Made by Stability AI just now.

That’s for sure not problematic, but as you stated earlier:

This clearly indicates that using the generated images for commercial work should not pose any problem. But this can be very risky, because the legal situation is not settled! More importantly, the interpretations of the companies training those neural networks and entities like the creative commons organization or the FSF don’t align, which increases the risk.

Copyright is copyright. It uses actual source image.