VR motion capture sandbox (optimized for Blender)

I create motion capture games for artists. Here’s one of my free games that was created for all Blender artists!! ![]()

If you have a VR headset then you can create stunning animations in minutes using the bundled automation to create blender renders using your own avatars!!

Free! Please support me on Patreon! Thanks!!

The term “getting into character” may apply here as literally connecting one’s self to an avatar as completely as possible and then looking into a (VR) mirror while acting out a script. And I totally added some Unity projects on Github that let users include their own custom avatars, props and scenes just for this purpose!

What problem does this solve that many people have?

I originally developed this tool to solve a need I had to create some VR training videos, but since has matured into a functional story telling system with the ability to export entire compilations as standard filetypes allowing applications such as Blender to import the recorded media. This is useful for anyone who would like to create VR storyboards or experiences, or for character modelers who would like to “become” their creations (and fully supports FuseCC, Mixamo, Daz3D, ReallusionCC3 and Makehuman).

It is also tailored to users who would like a means to quickly add character animations into a Blender movie or short film (using the included SceneLoader.blend automation script). And can easily be used to generate .fbx files for use in Unity games as well.

What does it feel like to use it?

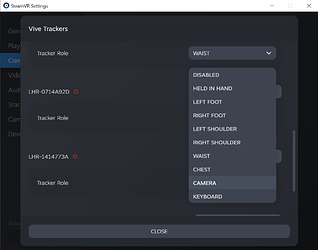

The user experience ranges, depending on if wearing feet and hip trackers (optional elbows, knees, chest) you may achieve an enhanced tracking experience as the avatar may be connected to the player’s 11 points of body tracking, Even the VivePro Eye is fully supported for gaze and blink tracking. Extra trackers or not It’s still lots of fun, and being able to record then immediately playback in VR has many interesting uses, while being able to create stories and add multiple characters (and props) can be extremely entertaining! ![]()

Current Release:

APS LUXOR (beta) - Version: 5.0.4

APS LUXOR (beta) - Version: 5.0.4

- Artists are invited to join the APS Discord

- Export mocap and create scenes in Blender™ instantly.

- HTC™ Vive Trackers (Up to 11 optional points) full body tracking.

- Ability to record, playback, pause, slomo, scrub mocap in VR.

- Customizable IK profiles and avatar parameters.

- SteamVR Knuckles support for individual finger articulation.

- Quest 2 optical finger tracking app for individual finger articulation and finger separation.

- Vive Pro Eye blink and gaze tracking support.

- Sidekick IOS Face capture app (Truedepth markerless AR facial tracking).

- User customizable Worlds, Avatar and Props may be built for mocap using the APS_SDK.

- Compatible with existing Unity3D™ avatars and environments.

- Supports custom shaders on mocap avatars.

- DynamicBone support for adding hair, clothing and body physics simulation to avatars.

- Breathing simulation for added chest animation.

- Add/Record/Export VR Cameras for realistic camera mocap (eg. VR Cameraman effect).

- Place “streaming” cameras for livestreaming avatars to OBS or as desktop overlays.

- Microphone audio recording with lip-sync visemes and recordable jaw bone rotation.

- Storyboard mode, save mocap experiences as pages for replaying or editing later.

- Animatic video player, display stories and scripts, choreograph movement.

- Dual-handed weapon IK solvers for natural handling of carbines.

- Recordable VTOL platform for animating helicopter flight simulation (eg. news choppers).

- VR Camcorders and VR selfie cams may be rigidly linked to trackers.

- VR props and firearms may be rigidly linked to trackers.

- Ghost curves for visualizing the future locations of multiple avatars in a scene.

Sidekick Face Capture IOS app:

APS Sidekick IPhone app - Version: 1.2.4

APS Sidekick IPhone app - Version: 1.2.4