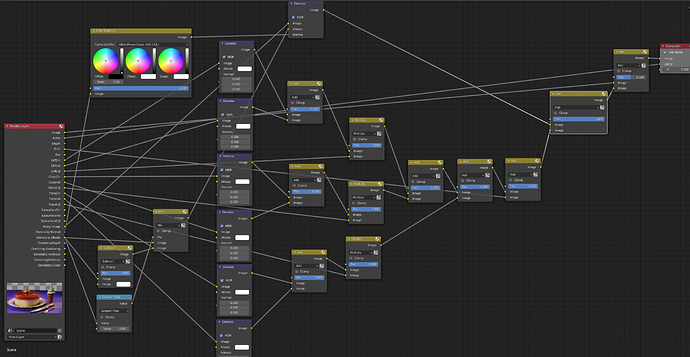

Also screenshot of compositing nodes if possible…

I don’t think, the render times are very high even for smaller scenes.

Well, now I have to go.

no-denoiser–64-samples–14sec

no-denoiser–512-samples–1min34sec

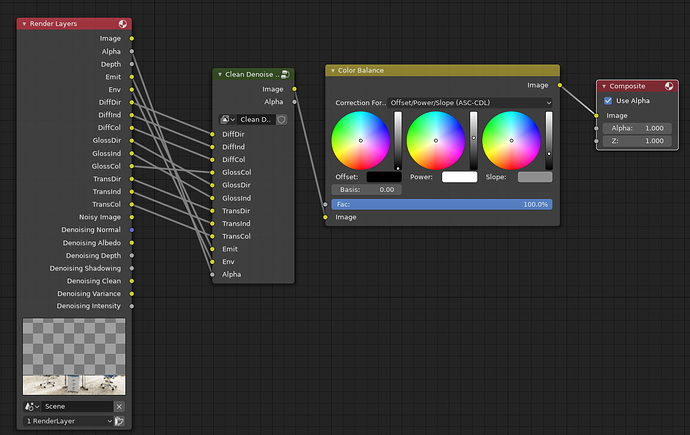

Im getting great results combining blender internal denoiser with Lord ODIN. I combine them both with a color mix node set to multiply 0.5.

Hi. Nice test. Did you make sure that albedo oidn input is between 0 and 1?

Would be nice if you could post a screenshot from the oidn image, albedo and normal input for the cake.

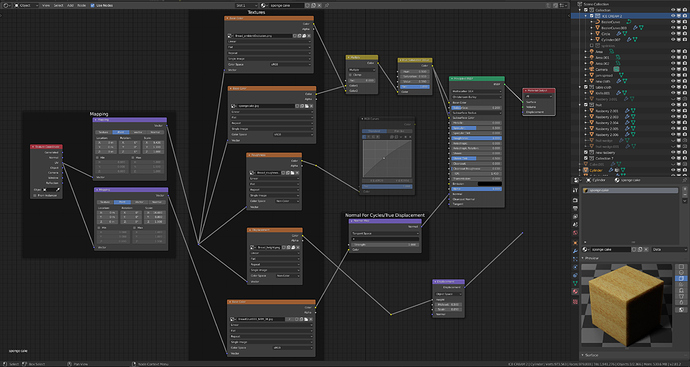

Under RT, Albedo and Normal

https://openimagedenoise.github.io/documentation.html

some constraints for OIDN.

When you have values in your Albedo pass over one you should bring them down. This is the case e.g. for spec>1 in materials

more infos here

https://developer.blender.org/D4304

Thank you.

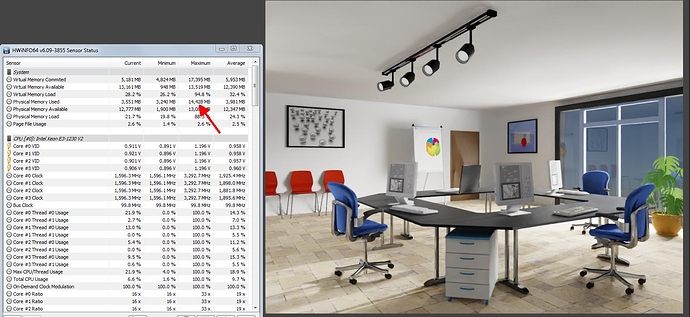

I meant difference in render time compare simple OIDN and node group and I got no difference in RAM usage.

Cheers, mib

Nobody told me that. lol. Shouldn’t this be part of the code built-in? I was seeing large RAM usage when denoising big images, i thought it was just the usual case of new code not being written very efficiently. I may need to fill the other other two DIMMS, I only have 32GB, I dread to think what it’s like for gamers with only 16 GB systems.

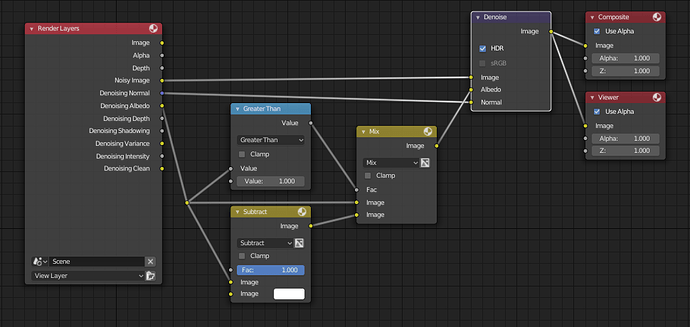

The docs. also say that normal data needs to be symmetrical (ie. goes to the same value in both the positive and negative directions). Having the normal data not have negative values at all is considered unacceptable.

In an initial test, remapping the denoised normal pass to ensure there’s values that go below 0 for symmetry purposes appears to improve the appearance of bumps and other details (ie. having a notably less impressionist look).

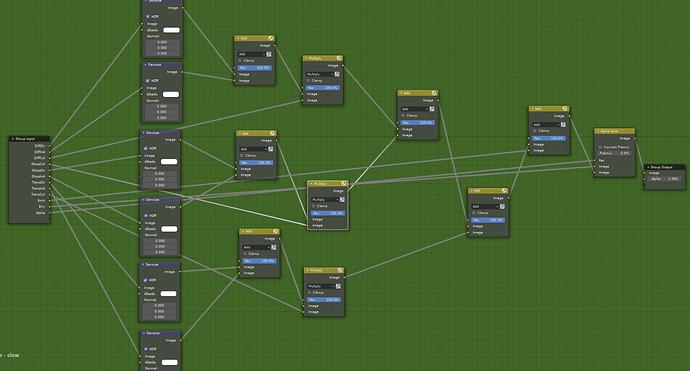

I think i’ve found a perfect combination of Blender internal denoiser and OIDN in this node setup. Make sure you have BI denoise on with standard settings 8px and relative filter on. Then follow this exactly.

Its just combining the Blender internal denoiser render with the OIDN result (that came from the noisy data and passes of its own) with a 50% color mix node. Make sure everything is unclamped or you will lose data in bright areas.

Here it is ungrouped. Ignore the earlier images they had a mistake.

Are you Malte S (pandrodor) user? I have seen that you have experimented with animation. What are your best nodes setup for animation? Have you experimented with more complex scenes, like interior scenes? I’m not having luck with temporal stability, there are differences between frames (at least with not very high sample rate).

Ace, what does that mean? Is it possible to do it in Blender? What would be the correct nodes setup for Normal then?

You are bound to see inconsistency and differences between frames with a neural network based denoiser. Its like asking an impressionist painter to paint each frame, they will all have differences from frame to frame because its not computed based on the physics of light, it’s a guesstimate based on images its been trained on. The result will always be a guess even if a very good guess. But especially with low sample counts.

That is what I supposed. But I have seen that there the user has experimented with animations and it does not seem to have major problems. Maybe because of the simplicity of the scene.

HolyDenoisy.

Please send this to the OIDN devs.

Would like to know what they say.)

3 questions according to your setup:

What is your thinking behind using already denoised passes as input for the OIDN ones?

What does the color balance on the normals?

What is the difference between an OiDN setup according to the OIDN docu with

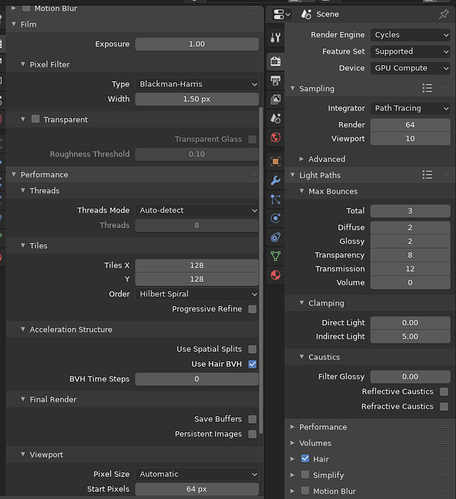

image: (no pixel reconstruction filter, box filter like Blackman Haris and Gaussian ok)

normals : corrected normals [−1, 1])

albedo: corrected Albedo between 0 and 1

I think Brecht did mention he is interested in trying to combine the two denoising solutions so that each will play to its strengths and fight the other’s weaknesses. In other words, a denoiser to rule them all ![]()

It would be neat if IODN and Lukas’ denoiser merged together in Blender and not a single weak point was left.

I have seen your nodes. At low sample rates I don’t see any benefit with mixing the result with internal denoiser. Internal denoiser is designed to only eliminate residual noise at high sample rates. At low sample rates for me to see the patches generated by internal denoiser is even more annoying than grain noise.

Could you share the yellow/orange material of that tasty dessert? I do not understand why OIDN is having so many problems in preserving details there.

I wrote down the differences in the render time in my first post.

OIDN Denoiser - 23sec

LordOdin Node-Setup - 55sec

Or do you mean something else?

I also do not understand the Ram consumption so exactly.

I have tested a few scenes where there was hardly a difference in Ram consumption between Internal, OIDN and LoordOdin.

But in the scene from my first post, he shoots through the ceiling.

Since it’s a very old scene, I guess a material node setup that doesn’t scale properly (rendering with 75% from FullHD resolution).

If Brecht combines these 2 into one, i propose we call it LOKI.

I have done an overnight test that attempts to combine the Cycles built-in denoiser and OIDN.

The group node just combines all of the different passes, but what you will notice is that the two denoising results are combined with what is essentially a ‘difference map’ that finds the completely unprocessed pixels in the result from the built-in denoiser (which is a result of the low detail weights I used). I then try to get rid of that remaining noise with OIDN, which denoises the noisy image pass generated again by the built-in denoiser.

For this type of scene which is somewhat difficult to trace due to the lighting, it is a very good result for what was just 5 hours of rendering (as opposed to this rendering nearly 2 days with the built-in denoiser at higher settings as was the case with the official version of the image).

There’s still just a tiny bit of noise in spots, but taking care of that manually would be a heck of a lot easier and faster than trying to denoise the original noisy image in post.

I have nearly no idea what im doing. Im not a programmer either. Im just going on node groups that were given in this thread, and suggestions i was given, i have no idea what the color balance does, but it was suggested. I just want to combine blender internal denoiser with OIDN to get the best of both, im certainly not going to find the best algorithm just something that kind of works.