Very cool! Looking forward to seeing it…

A couple of fixes to script in post #14 to run in 2.56… add_fileselect becomes fileselect_add and there was a matrix * vector to fix.

see post #28

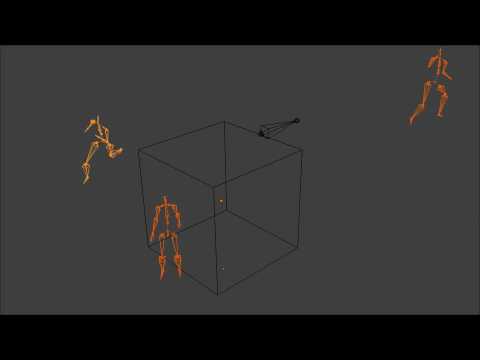

@batFinger: nice, have to take a look at it. And btw. this is a sample of “your running bvh-skeleton” … but this is what i want.

Take a part of an animation (action-keyframes from->to) and patch those together at new position and direction. So a single run-step … stomple around in a circle looks like this …

and about the calculation i had posted a question in the python-script-forum, cause i am not shure about the calculations - it looks ok … but i have to check for different animation-actions and different armature setups(masterbone/rootbone).

It would be nice, if it works - to have a ui to let such repeated animation-parts run along a path - …

test-dr: I am interested in what you show here… but I do not understand how you did it?

Did you write a python script to change the linear / straight line BVH action to run in a circle? Is that script posted somewhere?

No - there was only the older version i made for blender-2.49 - but then came the brand-new 2.5 … and the always changing api … ![]()

I would only post it now, if someone else would put some effort in it too.

At the moment it has no UI. And today i stuck at a jumping where one arm

was always broken. I thought its my fault …

but the imported bvh-anim did show the same broken arm.

@batFinger: i did use your shorter (posted above) simple-bvh-import, but did only store the keyframes for one frame, and not all the intermediate keys. Maybe

someone can verify if its the import or in the bvh.

Its the jumping from cmu-mocap subdir-01/01_01.bvh

ok … video shows the problem better than words …

its the left-arm of the jumping armature thats broken - but it was already broken from the import … (98-} i will blame BatFinger for the broken bvh-import … dont know …

- and i did post into the python-forum-section about the calculation i use, but got no answer till today. It looks the converting with the masterbone-rotationmatrix and its invert fits … but i cannot say i did understand this whole pose-action-fcurve-quat-mat-thing …

Update for the “broken left arm”:

i tried the import with the default bvh-import (blender-2.56)

and the arm is broken backwards too.

@batFinger: it might be inside the bvh-data, i need to check it with bvh-player

…

did a check with “bvhplay”

and the arm is not broken.

But the same error seems to be in the default import-bvh-module, because this did show the same backwards-broken arm.

I will try some others from the cmu-bvh-source…

- EDIT-

tried some other files and did lookup again the “jump 01_01.bvh” in bvhplay. The arm is broken there too - that looks a lot like error inside the bvh-data.

This thing is driving me nuts, when i dont know if the matrix/quat calculations are right or wrong …

I saw something similar when I was working with Messiah and BVH – it’s a gimball lock problem. The way I had to get around it in Messiah was to change the starting rest position – not sure how you would do that with Blender.

Whoops … I’m going to blame the cut and paste on chrome

#----------------------------------------------------------

# File simple_bvh_import.py

# Simple bvh exporter

#----------------------------------------------------------

import bpy, os, math, mathutils, time

from mathutils import Vector, Matrix

#

# class CNode:

#

class CNode:

def __init__(self, words, parent):

name = words[1]

for word in words[2:]:

name += ' '+word

self.name = name

self.parent = parent

self.children = []

self.head = Vector((0,0,0))

self.offset = Vector((0,0,0))

if parent:

parent.children.append(self)

self.channels = []

self.matrix = None

self.inverse = None

return

def __repr__(self):

return "CNode %s" % (self.name)

def display(self, pad):

vec = self.offset

if vec.length < Epsilon:

c = '*'

else:

c = ' '

print("%s%s%10s (%8.3f %8.3f %8.3f)" %

(c, pad, self.name, vec[0], vec[1], vec[2]))

for child in self.children:

child.display(pad+" ")

return

def build(self, amt, orig, parent):

self.head = orig + self.offset

if not self.children:

return self.head

zero = (self.offset.length < Epsilon)

eb = amt.edit_bones.new(self.name)

if parent:

eb.parent = parent

eb.head = self.head

tails = Vector((0,0,0))

for child in self.children:

tails += child.build(amt, self.head, eb)

n = len(self.children)

eb.tail = tails/n

self.matrix = eb.matrix.rotation_part()

self.inverse = self.matrix.copy().invert()

if zero:

return eb.tail

else:

return eb.head

#

# readBvhFile(context, filepath, rot90, scale):

#

Location = 1

Rotation = 2

Hierarchy = 1

Motion = 2

Frames = 3

Deg2Rad = math.pi/180

Epsilon = 1e-5

def readBvhFile(context, filepath, rot90, scale):

fileName = os.path.realpath(os.path.expanduser(filepath))

(shortName, ext) = os.path.splitext(fileName)

if ext.lower() != ".bvh":

raise NameError("Not a bvh file: " + fileName)

print( "Loading BVH file "+ fileName )

time1 = time.clock()

level = 0

nErrors = 0

scn = context.scene

fp = open(fileName, "rU")

print( "Reading skeleton" )

lineNo = 0

for line in fp:

words= line.split()

lineNo += 1

if len(words) == 0:

continue

key = words[0].upper()

if key == 'HIERARCHY':

status = Hierarchy

elif key == 'MOTION':

if level != 0:

raise NameError("Tokenizer out of kilter %d" % level)

amt = bpy.data.armatures.new("BvhAmt")

rig = bpy.data.objects.new("BvhRig", amt)

scn.objects.link(rig)

scn.objects.active = rig

bpy.ops.object.mode_set(mode='EDIT')

root.build(amt, Vector((0,0,0)), None)

#root.display('')

bpy.ops.object.mode_set(mode='OBJECT')

status = Motion

elif status == Hierarchy:

if key == 'ROOT':

node = CNode(words, None)

root = node

nodes = [root]

elif key == 'JOINT':

node = CNode(words, node)

nodes.append(node)

elif key == 'OFFSET':

(x,y,z) = (float(words[1]), float(words[2]), float(words[3]))

if rot90:

node.offset = scale*Vector((x,-z,y))

else:

node.offset = scale*Vector((x,y,z))

elif key == 'END':

node = CNode(words, node)

elif key == 'CHANNELS':

oldmode = None

for word in words[2:]:

if rot90:

(index, mode, sign) = channelZup(word)

else:

(index, mode, sign) = channelYup(word)

if mode != oldmode:

indices = []

node.channels.append((mode, indices))

oldmode = mode

indices.append((index, sign))

elif key == '{':

level += 1

elif key == '}':

level -= 1

node = node.parent

else:

raise NameError("Did not expect %s" % words[0])

elif status == Motion:

if key == 'FRAMES:':

nFrames = int(words[1])

elif key == 'FRAME' and words[1].upper() == 'TIME:':

frameTime = bpy.context.scene.render.fps*float(words[2])

print(frameTime)

#frameTime = 1

status = Frames

frame = 0

t = 0

bpy.ops.object.mode_set(mode='POSE')

pbones = rig.pose.bones

for pb in pbones:

pb.rotation_mode = 'QUATERNION'

elif status == Frames:

addFrame(words, frame, nodes, pbones, scale)

t += frameTime

frame += frameTime

fp.close()

time2 = time.clock()

print("Bvh file loaded in %.3f s" % (time2-time1))

return rig

#

# channelYup(word):

# channelZup(word):

#

def channelYup(word):

if word == 'Xrotation':

return ('X', Rotation, +1)

elif word == 'Yrotation':

return ('Y', Rotation, +1)

elif word == 'Zrotation':

return ('Z', Rotation, +1)

elif word == 'Xposition':

return (0, Location, +1)

elif word == 'Yposition':

return (1, Location, +1)

elif word == 'Zposition':

return (2, Location, +1)

def channelZup(word):

if word == 'Xrotation':

return ('X', Rotation, +1)

elif word == 'Yrotation':

return ('Z', Rotation, +1)

elif word == 'Zrotation':

return ('Y', Rotation, -1)

elif word == 'Xposition':

return (0, Location, +1)

elif word == 'Yposition':

return (2, Location, +1)

elif word == 'Zposition':

return (1, Location, -1)

#

# addFrame(words, frame, nodes, pbones, scale):

#

def addFrame(words, frame, nodes, pbones, scale):

m = 0

for node in nodes:

name = node.name

try:

pb = pbones[name]

except:

pb = None

if pb:

for (mode, indices) in node.channels:

if mode == Location:

vec = Vector((0,0,0))

for (index, sign) in indices:

vec[index] = sign*float(words[m])

m += 1

pb.location = (scale * vec - node.head) * node.inverse

for n in range(3):

pb.keyframe_insert('location', index=n, frame=frame, group=name)

elif mode == Rotation:

mats = []

for (axis, sign) in indices:

angle = sign*float(words[m])*Deg2Rad

mats.append(Matrix.Rotation(angle, 3, axis))

m += 1

mat = node.inverse * mats[0] * mats[1] * mats[2] * node.matrix

pb.rotation_quaternion = mat.to_quat()

for n in range(4):

pb.keyframe_insert('rotation_quaternion',

index=n, frame=frame, group=name)

return

#

# initSceneProperties(scn):

#

def initSceneProperties(scn):

bpy.types.Scene.MyBvhRot90 = bpy.props.BoolProperty(

name="Rotate 90 degrees",

description="Rotate the armature to make Z point up")

scn['MyBvhRot90'] = True

bpy.types.Scene.MyBvhScale = bpy.props.FloatProperty(

name="Scale",

default = 1.0,

min = 0.01,

max = 100)

scn['MyBvhScale'] = 1.0

initSceneProperties(bpy.context.scene)

#

# class BvhImportPanel(bpy.types.Panel):

#

class BvhImportPanel(bpy.types.Panel):

bl_label = "BVH import"

bl_space_type = "VIEW_3D"

bl_region_type = "UI"

def draw(self, context):

self.layout.prop(context.scene, "MyBvhRot90")

self.layout.prop(context.scene, "MyBvhScale")

self.layout.operator("object.LoadBvhButton")

#

# class OBJECT_OT_LoadBvhButton(bpy.types.Operator):

#

class OBJECT_OT_LoadBvhButton(bpy.types.Operator):

bl_idname = "OBJECT_OT_LoadBvhButton"

bl_label = "Load BVH file (.bvh)"

filepath = bpy.props.StringProperty(name="File Path",

maxlen=1024, default="")

def execute(self, context):

import bpy, os

readBvhFile(context, self.properties.filepath,

context.scene.MyBvhRot90, context.scene.MyBvhScale)

return{'FINISHED'}

def invoke(self, context, event):

context.window_manager.fileselect_add(self)

return {'RUNNING_MODAL'}

@test-dr

I imported the 01_01.bvh using this no problems… I attached the first 80 odd frames (to keep it below the 940k limit) of the animation for “proof” no elbow breaks no worries.

Part 2… i went over my 10K character limit… can’t take a trick tonight…

Also i have attached my “normalised” running bvh with a follow path constraint. Using normalized for want of a better term… it starts and ends the motion of the bvh at (0,0,0) and makes it relative to the rig object moving in the direction of the motion at a linear speed … like you are in a car going at a constant speed beside a runner whose speed may vary… but you start right beside and end right beside.

Because the motion is now relative to a linear motion dragging the rig around a path and keeping the speed gives a reasonable result IMO.

I put together a quick sample to show, even so it looks ok… it is only a 44 frame mocap so it jumps at its mismatching endpoints… but if it was a proper cycle it “should” work fine. Also i didn’t pay much attention to the actual speed of the mocap once normalised. … it will be in the file as an action on the rig object… the follow path constraint can be fixed to mimic this timing exactly.

The script i use to “normalise” actions is here http://blenderartists.org/forum/showthread.php?t=204377&pagenumber=

Thought I’d post this little “hint” before you guys drove me nuts… btw never close the manage attachements dialog with a file selectbox running … lol.

The previous was a max conversion of the cmu bvh. Here is the same with the motionbuilder conversion of the CMU 01_01 which has a star pose as a rest position… once again no busted elbow… did you even try and import it with the importer i posted?

I’m using the mocaps from here https://sites.google.com/a/cgspeed.com/cgspeed/motion-capture . I resampled it from a frame time of 8.2ms to 50ms or 20fps in bvhacker. If your mocap is busted i suggest getting another from there.

Hey BatFINGER, thanks for the hints… The script you used to normalize the BVH file… I took a look at the thread, and in it you seem to indicate that you are having problems “getting the fcurve from the RNA path.” Was that eventually solved, or is that still an issue… and how does it affect the normalization you are doing here?

(I’m going to try to play with this tomorrow… I was going to wait until Ricgard’s tutorial hit the newsstands, but I am too curious now to wait!)

Thanks!

@MarkJoel60

Accessing the fcurve via the data_path isn’t possible directly it seems… but a simple if structure testing data_path for the desired posebone and then using array_index suffices.

@batFinger: i used the cmu-daz-friendly hip-corrected from here:

> https://sites.google.com/a/cgspeed.com/cgspeed/motion-capture/daz-friendly-release

but i did not run it through “bvhacker”.

Is it right, you always run it first through “bvhacker”?

(pls. dont put too much effort in this, its enough for me to know, there are differences – and its not the coding (of me … or you …))

For the import i used your “simple_bvh_importer.py”

but with following modification ( most because vector*matrix mul has to be

in this order since ?rev=34xxx)

the diff output:

> MyFPS = 20

126c127

< node.offset = scale*Vector((x,-z,y))

---

> node.offset = Vector((x,-z,y)) * scale

128c129

< node.offset = scale*Vector((x,y,z))

---

> node.offset = Vector((x,y,z)) * scale

164,165c165,168

< elif status == Frames:

< addFrame(words, frame, nodes, pbones, scale)

---

> elif status == Frames: #modified this part to read only 1 keyframe per frame - and skip restpose

> if t >= frame+1 and t > 0: # this could lose some action at the end, if next fps is not reached

> addFrame(words, frame+1, nodes, pbones, scale)

> frame += 1

167c170

< frame += frameTime

---

> # frame += frameTime

226c229

< pb.location = (scale * vec - node.head) * node.inverse

---

> pb.location = (vec * scale - node.head) * node.inverse

293c296

< context.window_manager.fileselect_add(self)

---

> context.window_manager.fileselect_add(self) #change since rev.34130

295c298,300

<

back to the way tweaking animations,

first - maybe i use the wrong words -

i dont think “animation in place” is to fix the hip-location always at (0,0,0).

Its more like this:

> http://www.biomotionlab.ca/Demos/BMLwalker.html

(i used this animation-kind for the generating in my other script-samples,

blend with script is in my signature -? i may have to lookup the correct posting again)

so again, first: How to call this animation-in-place (like walking in air)?

(english is not my first language, sorry)

Next what i noticed from my tests (am i wrong?), if i do a bend of such

an animation along a curve, there always will be some sliding of the foot-contacts - not sliding in the walking-direction, but from the little turning of the hip-bone (=masterbone).

For example in this test with a walking on an up/down curve-like movement

> http://blenderartists.org/forum/showthread.php?t=205784&p=1768763&viewfull=1#post1768763

(the second one with the TUX on the walls)

i did not only change the rotation of the masterbone=hip, i did a rotation at the base, vertically on the “ground-contact” - its a like a lean of the character forward or backward (according to the steep of the current path).

I will lookup this sample again and check if i can get it down under the blend-post-limit and post it here, fixed for the blender-2.56 (they changed again some things … keywords instead of old magic numbers for keyframe-insert).

Finally: What are the pros/cons to use an “animation-in-place”:

cons: one need to store the travel-length and direction to use it.

cons: one need to always transform the normal animation to such a location-stripped and when doing later some changes, one has to do those conversion again.

cons-question: can i use the normal way to add other anim-parts in the nla-editor like put a “hand-wave” above and it will be blended in like the normal-animations?

… others …

pros: a longer animation will not be very memory-consuming

pros: a change in the path/movement-way needs only to recalculate the parent-object(maybe a path or animated empty).

pros: a scaling does not ?very? (can i not see it, or is there really none?) change the animation, it scales the size of the armature in the same way like the anim. (example try scaling the circle of batFingers samplecirclewalk…)

… others …

the attachment generates the walking around a curved path up/down with the TUX pics on the walls. You have to run the script “create_walking” to generate the animation. You may edit the curved-path and run the script again to patch it to the curve to check when it will be broken - there are funny things if the curve gets too steep up or down …

(and you can change the type of walk … more male, more heavy, more lazy … …)

Attachments

py_walking.blend (145 KB)

Not sure about your modifications test-dr since the update i have in post #28 which was supposed to be #22 but I pasted the old one like a fool…

scale is not a matrix only a scalar so its order in multiplying is not important.

lastly for frametime i’m using

frameTime = bpy.context.scene.render.fps*float(words[2])

ie the fps of your scene multiplied by the frame time in the read from the bvh. Perhaps framerate base could be included… does any one use it? This way i can resample the bvh files way down … I run in 24fps but often use an old version of bvhacker that lets me resample to 20fps with the click of a button… so i get keyframes on every 1.2 frames … but thats ok… they needn’t be on every frame…this will jam up the timing otherwise.

The biomotion stuff and what you are doing with it looks cool… something I’m definitely going to have a look at. I’m coming at it from a different angle… using the normalised mocaps with some drivers for toe roll etc … that can be quickly applied to the rig as they are not offset at all and can be applied to a path quickly.

There is an application to cycles here for sure, but currently for a longer walk I just use a longer mocap.

PS… as for scaling the path in my example… a moot point… it uses follow path so its parented to the path hence scaling the path will scale the rig being a child of the circle… I could have used the follow path constraint instead… or my prefered method of using a curve modifier to drive objects along a path.

ok -

i hope this little blend-file shows what i dont know.

How is the animation to be set for the curvepath-time-evaluation-fcurve?

The little blend-file is with

batFingers script to strip of the anim-movement,

my modified version with duplication of the action

and it returns the vector-movement.

Then there are 2 stripped actions to combine,

one jump and

one walk

and one simple hand-wave only to test the blending in nla.

The nla-editor has those actions combined to one longer actionstrip.

The path-time-evaluation-fcurve has some points, which nearly suit

the animation. Its a short walk, one jump, walk again and jump with

stand still a short time while waving the hand and walk the rest.

The bending along the very sharp path-curve shows other problems.

I did the animation only run in one plane, no steep up/downs.

How to calculate easy the positions for the time-evaluation-points?

I dont get the point what is the relation between path-length and evaluation-time

fcurve entries. And more worse, i even did not find the path-length?

I am shure in blender-2.49 there was such an entry … but where is it now?

The attached file is for blender-2.56 rev.>34xxx (maybe i need a new update, if there are more py-api-changes …)

Attachments

action_script2.blend (633 KB)

This is looking cool bananas test-dr… a little tip move the curve to 0,0,0 then the rig follows the curve without offset. Also having the rigs object origin between the feet makes it walk on the curve.

Here’s how I’m approaching this. I’m using a curve modifier instead… Attached is a simple example. This way the speed that is obtained from using the “normalisation” script can be applied when that action is playing. I’ve always found follow-path a bit problematic. However another way is to play with the evaluation time curve for follow path which is what you have done… I’ll get back to you on this… this may be an easier way to do it… the gradient or linear rig speed can probably be applied to this fcurve. Perhaps a combination of the two… the curve modifier takes out the need to know the length of the curve as it seems to give pretty much a one to one relationship between the distance the object is moved to the distance the mesh is moved as it is"modified" along the curve.

In the addendum to the normalise script there was this

print(transformVector)

print(transformVector.magnitude)

print(bpy.context.active_object.name)

fc = ob.animation_data.action = bpy.data.actions.new("linearSpeed")

ob.location[1] = 0.0

ob.keyframe_insert('location',index=1,frame=action.frame_range[0])

ob.location[1] = transformVector.magnitude

ob.keyframe_insert('location',index=1,frame=action.frame_range[1])

fc.fcurves[0].keyframe_points[0].interpolation = 'LINEAR'

fc.fcurves[0].keyframe_points[1].interpolation = 'LINEAR'

ob.animation_data.action.fcurves[0].extrapolation = "LINEAR"

Ignore most of it… The code assumes it’s goiing in the y direction (location[1]) however the

The transformVector.magnitude/action.frame_range.magnitude (the gradient of the linearSpeed fcurve ) gives the linear straight line speed to drag the rig after “normalisation” Make this a custom property on the rig named after the action… this can then be used in the driver for the object that is moving along the curve using the curve modifier… note this handy link http://aligorith.blogspot.com/2011/01/rigging-faq-addedum-info-on-drivers.html … I’m hoping that using this technique the active action in the NLA track can be matched to the property and the value returned to the driver…

I’m using the same process for a car rig… the curve modifier that is… and have a floor that moves along so the tyres can be shrinkwrapped to the road surface… The same thing applies here to some degree and has some application to using waylows footroll driver for instance… Have the rig parented to the floor, the floor object driven along the curve and shrinkwrapped to the ground and foot-sole-planes parented to the feet shrinkwrapped to the floor… then some IK could be used to overlay the feet to prevent them digging thru… not there yet. And i’m a horror at making cyclic redundancy… so using constraints may be wiser than parenting.

Hope this makes sense… otherwise you know where i am… lol… hey it’s looking good… you’re taking it exactly where i want it to be… i’m still stuck modelling my characters head…

now again with a handmade calculation for the length of a beziercurve.

It only works with BezierCurve as path-object, because i still dont

know all about the BSplines.

Attached blend-file (blender-2.56 rev.34130)

… from the script, it sets the “speed-fcurve-keypoints” for

jump, wait, walk 2times, jump, wait, walk, wait-till-end …

and the BezierCurve can be edited,

then the script has to run again, calculates the length

and sets a new curve-eval_time with the new points.

The nla needs the action-strips at the right points … and

now its possible to edit the BezierCurve, subdivide it,

let the skeleton go up/down … etc.

Still ERRORS - about creation of eval_time animation-data

and i did often run in the problem with those RED-coloured

animation-data-entries in action-editor and fcurve-editor - what are these

and how to fix/avoid those?

Update: a nice try is this:

Change the “follow-path-constraint” from set to the object armature

to the bone hip in pose-mode.

The animation will be the same but you have to set the direction in -Y

in the follow-path-constraint.

Then press space-bar and select the “bake action” and there bake the

action from frame 1 to 280 and check to bake for all bones (remove the mark, hook).

Now you have all patched animations in one big action. Duplicate the armature and

delete all constraints and set as action this baked-action.

Attachments

action_script2.blend (524 KB)

update again,

now uses the nla-strips of the armature

to calculate the eval-time keyframes for the bezierCurve.

So only move the action-strips in the nla,

run the script and it updates the eval-time-entries

according to the position of the nla-strips.

(this still works not with strips-scaling, but strips-repeat works

and … shure only for those strips, that were processed for animation in place

with the script)

Attachments

action_script2.blend (527 KB)

Just to keep some of the OP in the discussion… Hey Richard, I have been on the look-out for the 3D Artist Magazine… so far, no Joy. Many of the bookstores around here don’t seem to carry it, and those that do still have the Tron Issue out… I went online to order it, but they want to ship it (adding as much in shipping as the magazine costs… yikes!)

I’ll keep an eye out for it though…

Yeah, I think it is due out on the 15th, So that is a day away for what it is worth. I need to find a copy also. I need to get on it. Probably have to travel to a city center to find it in a news/magazine shop. I meant to ask the editor if I could get a comp copy but I forgot. Still time I suppose. Then I did another one on another subject, I think that is due out next month, but will have to confirm.