Alright:

1, Working on VFX in large studios does not automatically equal competency. In fact, it’s often quite the contrary. Large studios have brute force pipelines set up, which usually trade in performance in return for being error-proof and not requiring any technical skill from the artists. That’s how Arnold based pipelines mostly work. I have been surprised how many people working in large VFX studios with shiny titles such as Senior VFX TD have complete lack of understanding of even basic sampling optimization strategies. If those people are supposed to “Technically Direct” anything, then I am scared to even know what kind of technical skillset non-TD employees posses.

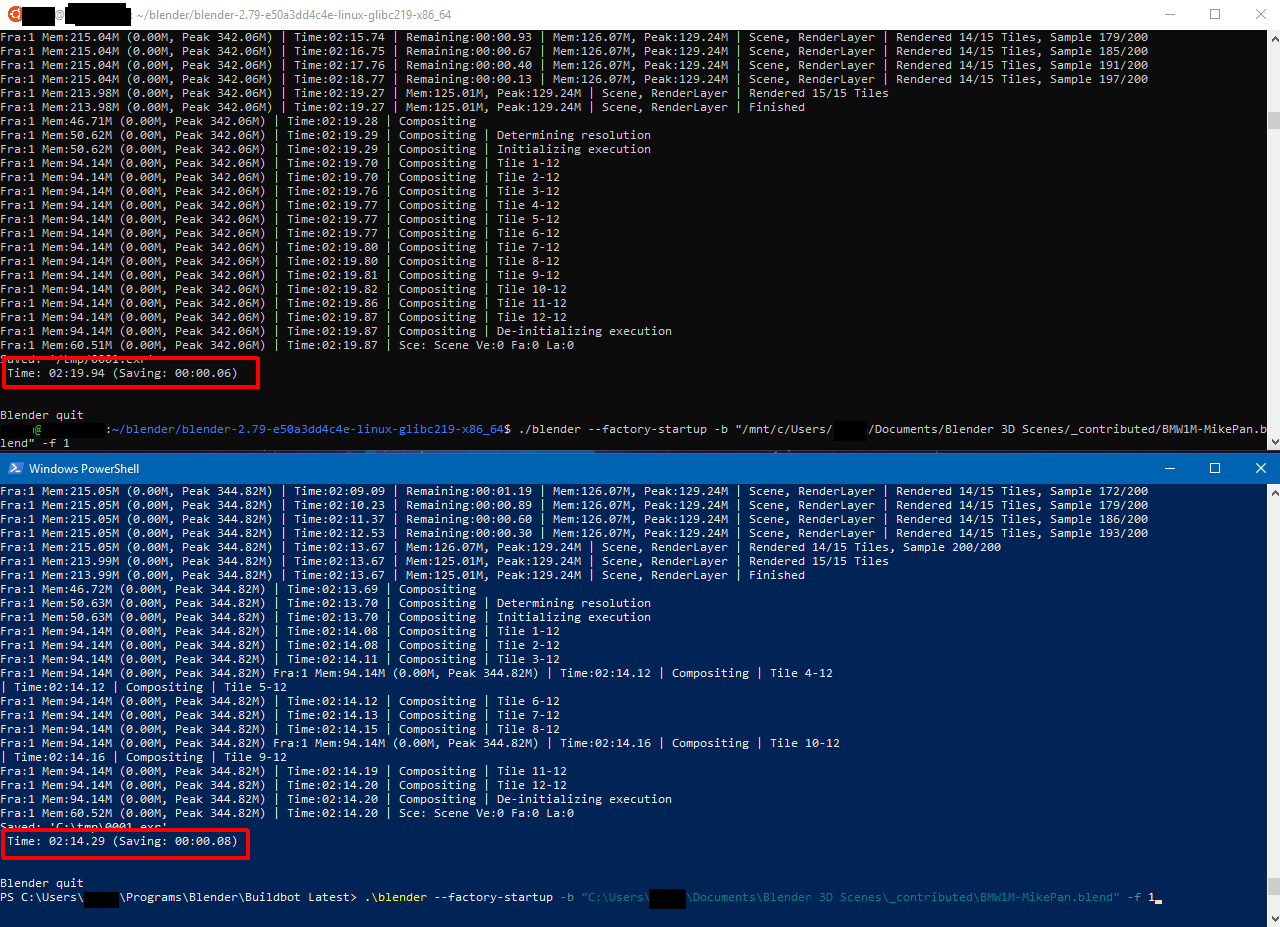

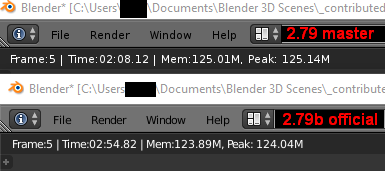

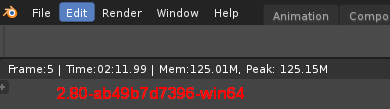

2, I never stated if I was comparing Cycles to Vray and Corona using secondary cached GI or using full path tracing. I actually compared Cycles to Corona using pure PT+PT in Corona, and Corona still performed about 3-4* faster in an identical scene on the CPU. On GPU, Cycles on GTX1080Ti was slightly slower than PT+PT Corona on i7 5930k, a 4 years old 6 core CPU.

3, The times when approximate methods in either Corona or Vray caused any artifacts, be it on static or animated scenes are long gone, that’s why these setups are also default these days. I haven’t seen any animated scene, regardless of complexity, which could not be handled by V-Ray’s light cache or Corona’s UHD cache in past 3-4 years, at all… not a single one. These days, retracing techniques for these caches have been refined to perfection.

4, The reason Cycles doesn’t have cached secondary GI has nothing to do with it not being able to produce artifact-free results. The main reason is that cached GI on it’s own is hard to implement, and very, very hard to implement on GPU. It’s possible, as Redshift has proven, but challenging non the less. Even large rendering companies have hard time pulling it off, so it’s understandable that for Cycles dev team with such limited resources, it’s not on a roadmap.

5, What this means in practice is that the only reason why you would not use cached solution for secondary GI - possible artifacts - is no longer valid, and there is never any excuse not to use them. This, in turn, gives renderers with such caching methods even bigger advantage over the others. So while Corona and V-Ray are “just” faster than Cycles in pure PT+PT mode, they are much, much faster in PT+Cached mode. In this thread, however, I was initially referring just to the difference between Cycles and PT+PT mode of Corona and Vray, as I generally tend to compare apples to apples too.

6, Renderman, Arnold and Manuka are made to handle complexity at the expense of performance while being error-proof, as I pointed out in my first post. It has absolutely nothing to do with the instability of the approximate solutions. I mean for example ILM generalist department uses V-Ray heavily and they are able to easily get their shit done

7, The argument about archvis but not animation, I don’t even know what to say about that… I’ve delivered so many animated shots over the past years using approximate methods as secondary GI, and never had issues. I think you are taking about V-Ray 6 or more years ago.

Now, my main point:

Blender’s main demographic, at this point, are smaller studios and individual freelances, exactly the kind of people who can’t afford those giant pipeline oriented rendering solutions which trade performance for reliability. Sure we need our renderer to be reliable too, but first and foremost, we need to be able to even afford to render. Rendertimes are still an issue, especially for animations. Trust me, I know it… this is one of my last personal projects:

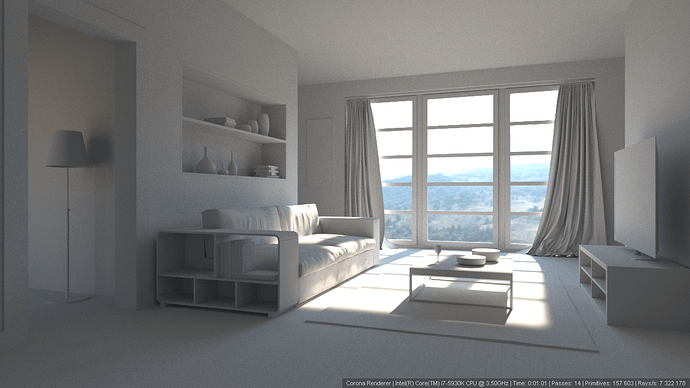

Rendered in Corona with cached secondary GI. 3400 frames at FullHD of a scene mostly covered in very dense, reflective and translucent foliage. It was completely stable. I know there is some small flickering here and there, but that’s not from GI, that’s from the antialiasing. Wanna know why? Because I had to render it at my own expenses. Believe or not, at fullHD, each frame of this scene took just 15 minutes on my 4 year old 6 core CPU. The scene took about 56GB of memory, so it would never fit on any GPU.

Despite using such fast renderer as Corona, and getting incredible rendertime of just 15 minutes for such complex frames, including in-render motion blur and DoF, I still had to pay over 1500EUR, or $1750 out of my own pocket to get this scene rendered. If I ever tried to do this in Cycles, I’d fail, because I would not be able to spend around let’s say $7000 just on a rendering of a personal project. So even these days, it’s still borderline impossible for a freelancer to render a short movie of a complex scene on his own. And for this to change, it means we need to take advantage of any optimization available, and get rid of that retarded mentality of “it has to be unbiased” or “caching is cheating”.