It looks like the BMW scene’s HIP performance with your card is similar to RTX 2070 Super which is not bad.

For half the price almost?

does the amd accelerated rendering play nicely with the intel denoiser? i recall back in the day having issues combining the two when using open cl.

although maybe it won’t matter if they don’t end up being able to support vega hardware anyway.

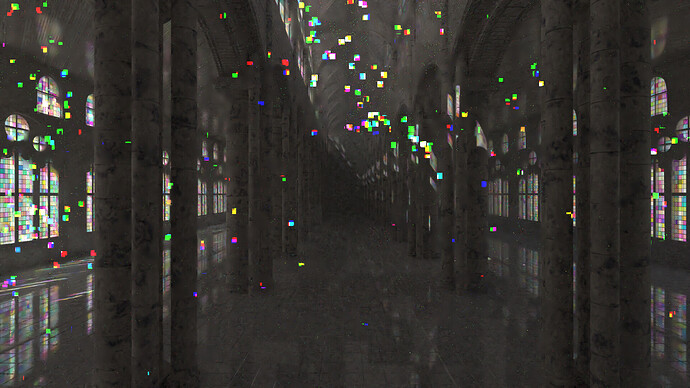

Any issues I had with OIDN when rendering with OpenCL were not actually issues with OIDN, but with the OpenCL drivers themselves, as the render would produce artifacts (depending on driver), confusing the denoiser. They’d look broken even without denoising.

If you’re on Windows, then that’s likely the issue you had as well - I got a friend of mine to render with a Vega 56 and he too had artifacts. He did a CPU+GPU render though, so not all tiles had artifacts. This issue didn’t happen on Linux, which has actually good OpenCL drivers (can’t say the same of the AMDGPU-PRO OpenGL driver though - for workbench/the viewport).

Since my 8GB RX 480 isn’t compatible with the drivers, and since I can’t get a 6800XT for $650 even if I wanted (which I still don’t, I don’t have faith in AMD’s drivers), I got another friend to render the scene with HIP with a 5700 and it didn’t have any artifacts, so it’s likely fine. For now. Of course, HIP is still a WIP. The HIP task shows there were some artifacts on the BMW scene that have been fixed now.

It’s worth noting that the artifacts can also be much less obvious to spot than that picture.

Has anyone gotten benchmark results of the 6900XT?

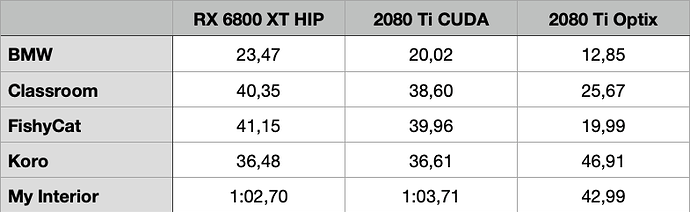

Radeon RX 6800 XT results:

BMW – 23,57

Classroom – 39,76

FishyCat – 42,82

Koro – 36,62

Barcelona – 1:11,83

Victor – 3:01,95

Barbershop – 3:05,66

Italian Flat – 2:01,25

Lone Monk – 5:07,20

Monster under the bed – 1:02,96

Party Tug – 0:48,92

Overall at the moment it is a performance level close to 2080 Ti CUDA. But for Koro scene 6800 XT is much faster, even faster than Optix version.

Consider that this is still an early development version with no support for hardware raytracing.

Is anyone going to mention the power usage numbers in this graph?

Woooo, only now I did noticed.

Same result as 3080 with 43% less energy. That’s insane!

According to the Opendata page, the HIP version is much faster than the 2080 ti CUDA BMW render. That is about %30 difference. The BD page does not list 3.0 benchmarks so we will need to wait for a while for true comparisons from there.

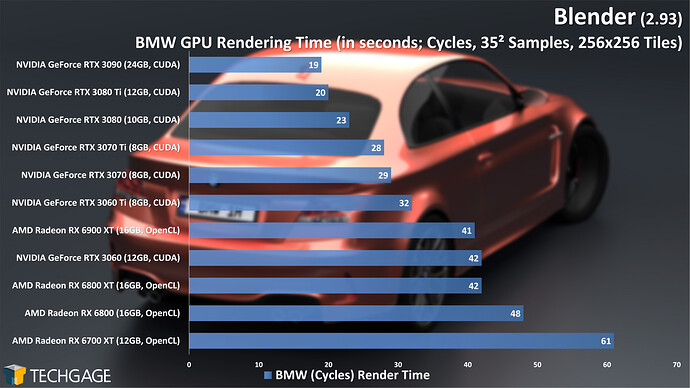

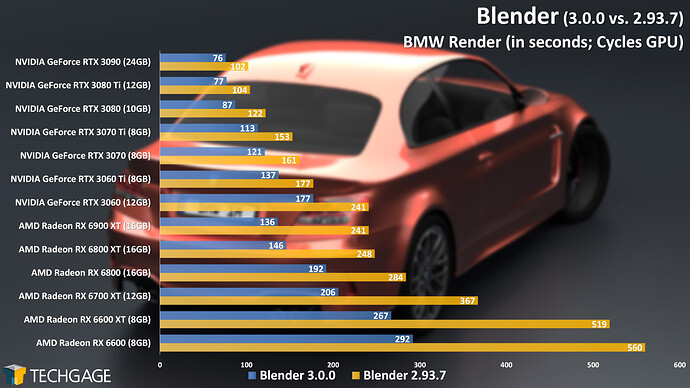

So, all this talk of HIP and the cards got even slower compared to OpenCL in the 2.93? Not to mention a bunch of older models got left behind. Perhaps they use different settings between the versions? Even so, Nvidia cards got faster with the 3.0, while AMD took another step back. Pretty disappointing to see a 6800 XT only barely competing with a 3060 both in out right rendering and viewport performance.

I don’t understand those BMW27 tests values. Are they at different resolution from what the scene has setup by default?

Blender 3.0 (i9-7900X, ASUS Sage WS X299 10G, 64 GB)

Power consumption:

RX 6800 XT – 160-180 W

RTX 2080 Ti – 220-240 W

So at this point RX 6800 XT HIP performance is similar to 2080Ti/3070 CUDA.

BTW: Opendata page is useless.

Thoughts:

Tile sizes? 2.93 should be 512 and I think AMD likes 256?

3.0 should be 0, and I dont know about HIP

Optix power consumption cant be very high because renders never turn on fans, and I can play Gwent while its rendering, and its just magic rendering it out without energy

Yes, and it’s something I should have made more obvious in the text. I used to keep that information in the graph title, but it just became too crowded up there. I made these changes to each tested project in 2.93 (except Sprite Fright, which I only used in 3.0):

BMW: Changed output resolution to 2560x1440 @ 100% (default is 50%).

Classroom: Changed to GPU compute, 2560x1440, 256x256 tiles.

Still Life: Disabled denoise and noise threshold, 256x256 tiles.

Sprite Fright: Disabled denoise and noise threshold.

Red Autumn Forest: As-downloaded.

Splash Fox: As-downloaded.

Thanks for checking out the article.

Regarding your article: Eevee does not use CUDA/HIP.

Eevee is OpenGL renderer at the moment. Blender Institute works on Vulkan version but it is scheduled for late 2022.

humm… not sure 100% but i think you are comparing the older 2.93 tests to the newer ones that “might” have been changed between the tests as stated by the author (with the recent tests using higher resolution/sample count).

What makes me think so is if i take the older bmw 2.93 test and compare it to this newer one, it would looks like everything got slower, when in reality everything got faster (CUDA, Optix, HIP) ![]()

3090 = 19 sec (on older 2.93 tests)

3090 = 76 sec (on newer 3.0 vs 2.93 tests)

![]()

So yeah, either my theory is correct, or there might be some regression on that specific classroom test. (I don’t have an AMD card, but maybe someone else can test on both versions and confirm it).

I have used the reference link to 2.93 results at the top of the article about 3.0. If we take into account that the resolution was increased, that still means, all Nvidia cards did the harder to render scene quicker than before, enjoying a very healthy boost in performance. At the same time, AMD, in relation to the competition, dropped down quite a few tiers, making it a rather poor value. How do you drop from the top of the stack (CUDA versus OpenCL) to only match a heavily cut down card like a 3060? Means the code is still not as good as CUDA, let alone Optix.

I regret missing your earlier comment about the discrepancy. Voidium is correct in that the project was adjusted from one article to the next. I updated the test methodology section in the article to better reflect this.

In pre-testing, I did check out the performance using the previous project configurations. With an RX 6700 XT, the Classroom project went from 60s in 2.93 to 34s in 3.0.0. I’ve put together performance articles like these for each Blender release since 2.80, and NVIDIA’s always had the stronger performance - especially with the viewport.

AMD has told me that it will continue to eke out additional performance as time goes on, but I feel like it’s going to take another RDNA generation before we see the company become a real threat to NVIDIA.

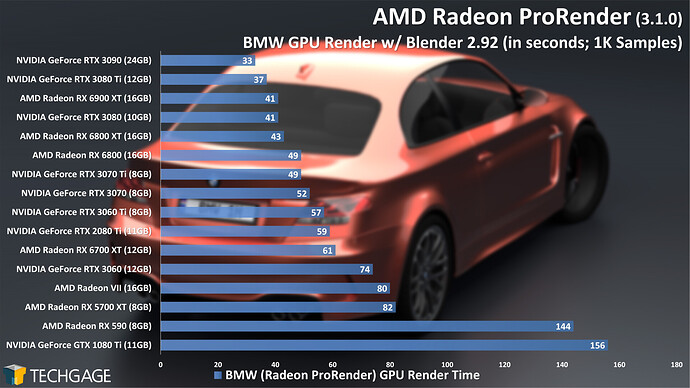

HIP doesn’t currently take advantage of hardware ray tracing, but Radeon ProRender does. Despite that, NVIDIA still manages to win:

That said… the performance is a LOT closer to what we’d hope to see in other render engines.

This leads me to a question (and apologies if this is a bad place for it): it’s been suggested to me that since OptiX is the de facto choice for NVIDIA RTX owners, that CUDA could be skipped over. On one hand, I agree that this might be a fair idea, because if OptiX is indeed faster, and the end result is 1:1 with CUDA, then it’d be a no-brainer to use. On the other hand, I feel like it might make it look like I’m picking on AMD… even if it’s ultimately fair.

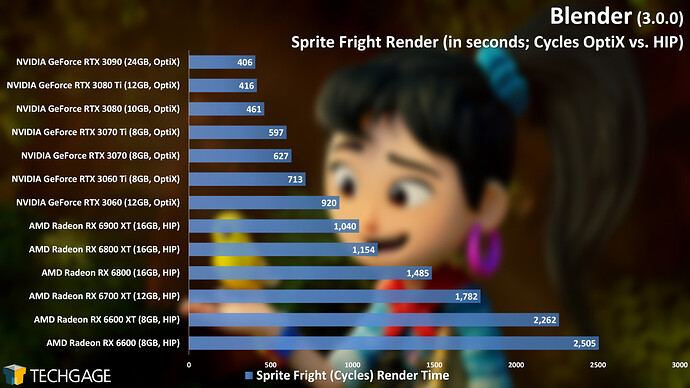

Here’s what Sprite Fright would look like if I compared OptiX to HIP:

Is this a fair way to go? Or should CUDA still be included? Once AMD implements hardware RT in HIP, then would that become a great time to change it up like this? Thanks for any suggestions.

I have been following your articles since the 2.80 and they have been a great help making a purchasing decision. Might I add, they are the best reference available on the internet. It is a bit disappointing to see AMD still so far behind, especially after this long awaited rewrite of code. Perhaps I had too much hope. Right now the choice of hardware is rather unfortunate, you either have to spend a small fortune on a slow card, or a card with a RAM count from 5 years ago. As you said, despite the promises from AMD, any future gains of performance will be insignificant, unless ray tracing is activated.

Speaking of ray tracing. I would say, including the CUDA graph is still pretty helpful to those coming from older hardware, say a 1000 series card. Your older articles, using CUDA, may serve as a record of progress, helping the person visualize how much of an upgrade it would be to jump to a 3000 series card under CUDA, and then, again, under Optix.

The same can be said for somebody with a 6000 series card. Knowing how the cards align on a more “basic” level, could establish a starting point from which to extrapolate the potential score under ray tracing implementation. As you said, in AMDs own Prorender, the gap is a lot closer between the two companies. One could expect a similar result under Cycles, perhaps.