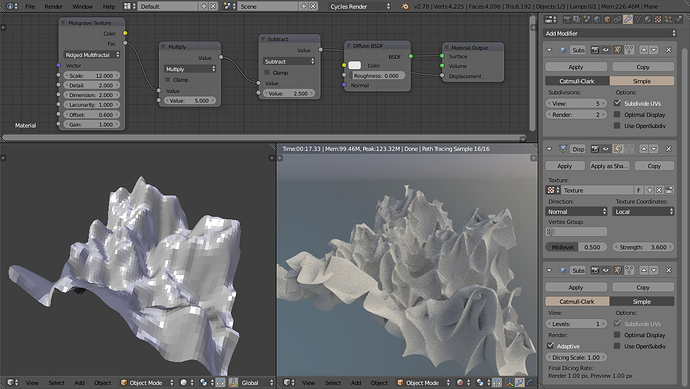

@soul You can have a first displacement using a displace modifier and add an adaptive subdivision below and displacement in the material node :

Thank you. But I can’t render this. Is this something bound to PC memory?

I can only render this on flat plane without displace modifier.

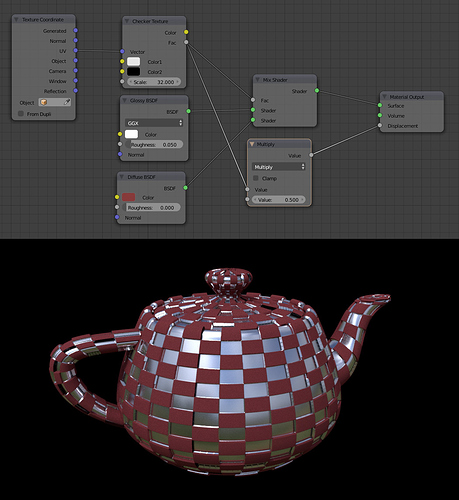

I have another question. When using checker texture for displacement, how to bound for darker areas of this texture one material and for brighter areas of this texture another material ?

Yes. Your render is bound to memory limit. Adaptive subdivision increase geometry. And so, it complicates rays paths.

You have to limit number of bounces and to use all tricks that can decrease memory use.

In Cycles, your material is just a nodetree. Mixing two materials is equal to connect both nodetrees to a mix shader node with texture as factor inside another unique material used by the whole mesh.

My PC is an old toaster so unless yours is even worse the only reason you haven’t been able to render this is because you set either of the subdivision modifiers too high or just added the first subdivision to an already high poly model.

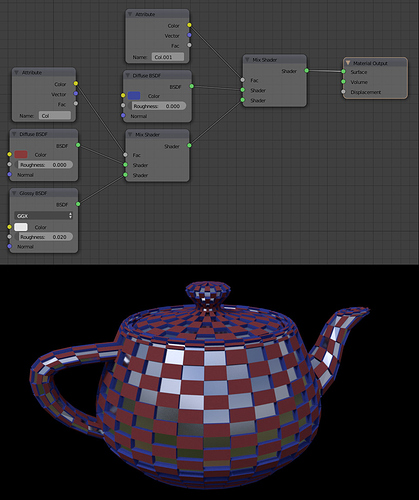

However unless your topology doesn’t allow it, I strongly advise to make the displaced checker by extruding faces in edit mode. It’s much lower on resources, looks cleaner and gives you control over the vertical faces (or should I say normal faces). Here I applied them an additional blue material. Nothing is plugged in displacement and there’s no modifier :

There is a functionality to quicly select faces in a checker. Simply select everything and go in Select > Checker deselect.

Am I being blind, or is there no way to use microdisplacement with the new principled shader?

I have ‘Experimental’ on, and ‘adaptive’ in the subsurf modifier, but I can’t see any options in the materials window…

You need to check True displacement under material settings.

Ah yes there is is… Cheers!

I’m hoping that true displacment/bump/both will in a more convinient place. Feel like I have to jump all over blender to enable that one function.

@bigbad that’s because it’s not one function. Adaptive subdivision is one thing, true displacement is another. And there are cases when you may want to use one without the other.

I saw this renders of Lee Griggs with Arnold’s volume displacement.

Could this be “volume microdisplacement” in terms how it would work in Cycles ?

No, it’s not. He is using Volume Rendering (Volume Scattering/Absorption). You probably can do something similiar in cycles, the only challenge is to convert the mesh to a volume texture. The rendering will be quite slow, though

He is using VDB volumes. These VDB volumes can be displaced as a 3D texture.

Blender only uses OpenVDB grids for smoke through Attribute node.

But attribute node does not have an entry socket for a vector array. So, you can not displace smoke like that.

You can displace a Point Density texture that have an input socket for a vector array.

You can mix a mesh using a volume material with another one by overlapping them in scene.

You can displace a mesh surface through a displace modifier.

But none of theses methods can be as simple as a VDB volumes deformation.

I don’t know if it is possible with an OSL script. Maybe Cycles only allows to use them for smoke.

This is so cool.

I found that openVDB will be implemented in Blender.

https://wiki.blender.org/index.php/User:Jehuty/GSoC_2013/Proposal

No. What you found is a wiki page about a GSOC that happened 4 years ago.

As I said now, in 2.79, OpenVDB is used for smokes only.

So,this was already done but not with a Voxel Data Texture as in initial proposal.

There is no practical conversion mesh to VDB 3D texture tool no plan for that and no dev working on it.

With this new patch: CUDA support for rendering scenes that don’t fit on GPU

I wondered, if this works with Microdisplacement as well, can someone shed some light on this.

i haven’t tested it yet but even IF - A ssoon as Geometry has to be cached out and not just Textures - calls become very very slow as parts of the ray-Intersect have to wait for new data. Copying that over the bus on something complex like a high subdiv/md will probably bring the GPU to its knees.

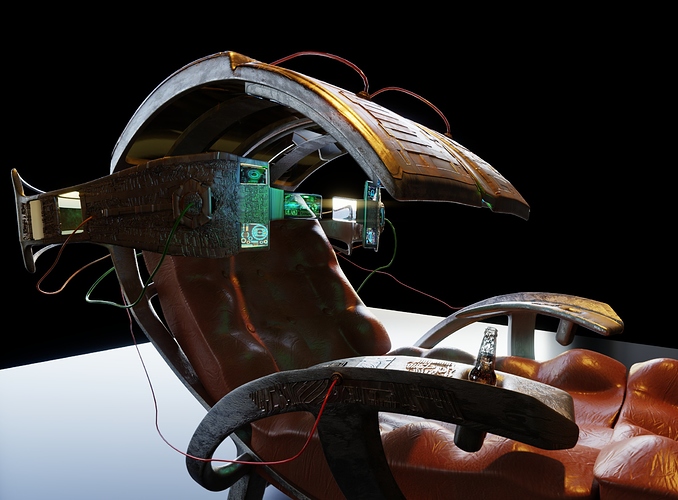

Well if anyone cares here’s a few things i did using displacement.

and

The sand in the first image caused the render to use 29GB of RAM and took like 30 mins to build the BVH probably using pagefile as well, i could have easily solved this though by upping the dicing scale on the adaptive subdivision.

Thesonofhendrix make sure to optimize the geometry according to your scene. In the case of this sand, you could remove all the geometry that’s outside of the camera frame. Microdisplacement isn’t smart enough to subdivide mostly inside the frame. The most dense areas of geometry are the ones that are closest to the camera and in the case of this sand, it’s the area right below the camera, which is not visible in the image. So it’s a big waste of memory.

xThesonofhendrix now that´s a chair, is this your chair at home, where you watch movies and work in Blender.)

xChameleonScales Well, actually Blender wasn´t smart enough, but now is: add offscreen dicing scale and dicing camera

It is also possible to render panoramic cameras with adaptive subdivision.