your result is better then lucas denoiser (looking at your example), or are those some artifacts of scaling in bitmap image ?.

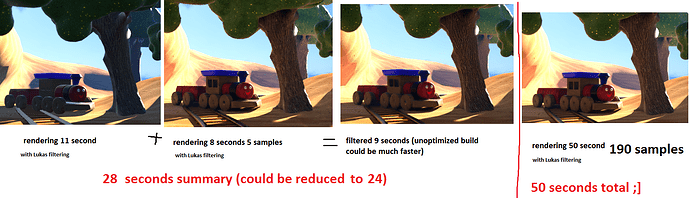

The image doesn’t have any artifacts. The base image for denoising is rendered in low samples (this is the reason it has artifacts). The result is better but I have used rendering with Lukas denoiser as input for my denoiser. My denoiser without Lukas denoiser using simple noisy render is still smooth but darken. So my denoiser may be an extension of denoising process but as an extension to Lukas denoiser.![]() But it has to be optimized. I don’t know if anybody uses it. So I don’t see the reason to optimize it.

But it has to be optimized. I don’t know if anybody uses it. So I don’t see the reason to optimize it.

The denoiser is using OpenGL render as an additional info about the rendered scene to denoise it, but in many cases such as glass areas, transparency areas, or hairs is not enough. It could be much better if viewport could render these things in the viewport. Then the denoiser could use it.

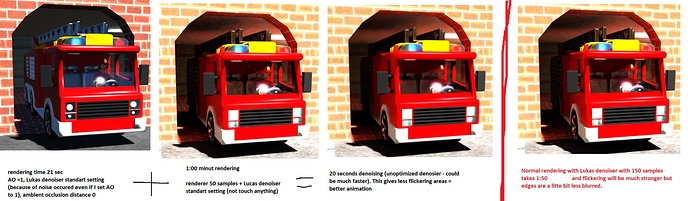

Just as I pointed out to Lukas some times ago, if you take a render without any light but with AO strenght=1 distance=0 you get a blazing fast render that retains all the materials peculiarities, such as textures, reflections and refractions. That could be useful info for a denoise algorithm like yours.

By the way, about your denoiser, I have the feeling that so far it might be useful just for your style (and even for it I see a big detail loss in object contours). In general people throw hundreds of samples not just tens as you tend to do. Afterall even Lukas denoiser is meant to “clean the last bit of noise”, it is not asked to magically rebuild things that are not already there in the render.

As ChameleonScales pointed out it is worth to test with some other scene, rather than your extreme-cartoony ones.

Thank you very much. I forget about AO settings. I have tried AO=1 (without removing all lights) and this gives much better result and removes problems with transparent areas and even (maybe) problems with denoising hairs because OpenGL view didn’t contain hairs so, in theory, using normal render with AO = 1 as OpenGL input could fix this, even bump mapping could be now possible (I haven’t tried to denoise scene with hairs yet), but rendering takes additional seconds because its no more simple Opengl view.

edit: I have tried to remove all light in the scene and set distance to 0 too (as you said) and that gives a good result but there are still some problems with lighting, so I just set A0=1 and the denoising result is still great. One problem is that the result contains light gradients (on the edges of the objects) from this AO =1 render.

To render pseudo OpenGL scene I had to use Lukas denoiser despite the fact I was rendered with AO =1 and dithered Sobol with 0 scrambling distance.

OpenGL render takes 1 second to render but doesn’t have details needed in the scene so it’s useless. If I only had time to experiment with passes x] / exr file. When the scene is rendered with AO =1 in combination with rendering base low samples image, in many cases it takes almost as much time as rendering with higher sample count (Except for a few exceptions - it can reduce flickering with some settings). So the algorithm is sentenced to death - Although I had fun with that :D.

btw. it’s hard to view my attached images - downloading is a better option

Thank you for testing! This algorithm doesn’t work perfectly with standard noised input, but as I said before - it’s better to render at low samples but prefilter using blender denoising by Lucas, this will give a much better result. But in your scene, Lukas denoising could probably denoise perfectly.

This algorithm doesn’t work perfectly with standard noised input, but as I said before - it’s better to render at low samples but prefilter using blender denoising by Lucas, this will give a much better result. But in your scene, Lukas denoising could probably denoise perfectly.

I have used your images as input for my newest denoiser and here is my result (some details are lost)

did you use the latest version? (btw the latest version contain bug - blur setting are disabled - I have to upload new version :))

I will update the first post and it will now contain all versions

I have fixed controls in denoising tool and you can now disable blur and reduce filter ration for such images like previous one.

The best denoising result is when you use fast rendering instead of simple OpenGL render. by fast render I mean with A0=1, scrambling distance 0, dithered sobol.

here is version 4 of denoiser

floydkids: I have used your opengl render and noisy render with fixed denoiser and here is the result :

Your denoising approach is looking really good right now, but I do wonder if the dependence on the OpenGL data will limit its use to a small subset of the Cycles feature set (if you want the best results, noting the limitations you already acknowledged with reflective/refractive surfaces).

Blender’s OpenGL display for Cycles currently can’t render much in the way of shadows, micropoly-displacement, SSS, hair shading, complex node materials with layers, glass shading, ect… It may not be much of a problem for your scenes since they don’t seem to push the engine that hard, but I can see a myriad of issues with more complex scenes that would not have a clear-cut fix (Blender 2.8 might go a long way in resolving this, but it’s still a ways off from a production-ready state and the most complex effects may still not see OpenGL support).

The main road to improvement may lie in actually looking at the rendered image itself and the passes that now exist because of Lukas’ denoiser (or maybe it could be seen as a polishing off stage for pixels that don’t get processed because of a very low Neighbor Weight value).

Thanks. You’re right. The current version is useless for many cases and because of limitation of opengl. Of course there is an option like rendering frame with A0=1 and with other optimizations and use this as opengl input for denoiser (proposed by lsscpp). this fixes problems with simple transparency and other things that are only avaliable in rendering mode and gives good result.

but rendering low sample input as noisy render for denoising and rendering higher sample input with AO=1 as opengl input for denoiser gives at the end a little less time than when you rendnder a normal higher sample image. But there is an additional cost of denoising these images by my algo, so finally all three proceses takes almost the same time like the normal render with higher samples count. Additionally, my denoiser gives less quality than normal render with higher samples. This makes it useless in many cases :/.

Of course I could use passes from the blender, but I gave up :). I started another project (android game) so it will be harder to find a time to improve or rewrite this algo. I am not as good as Lukas in the field of math so I will leave programming denoisers ![]() (Unless I have some interesting idea).

(Unless I have some interesting idea).

Thank you all for testing and ideas for improvement :)!.

Just curious, rather than using an OpenGL render as the sample image, would it be practical to use a neural network to extrapolate details from 2 images of the same frame but with different seeds?

ex: given 2x 64 sample renders, estimate the ground truth.

As I said before I am not so good in the field of math so I probably couldn’t write such algo, but if I am not wrong “Razorblade” said something about network training which looks like a similar topic. Anyway, it sounds interesting but who will have time to do it :).

Very interesting. Thanks