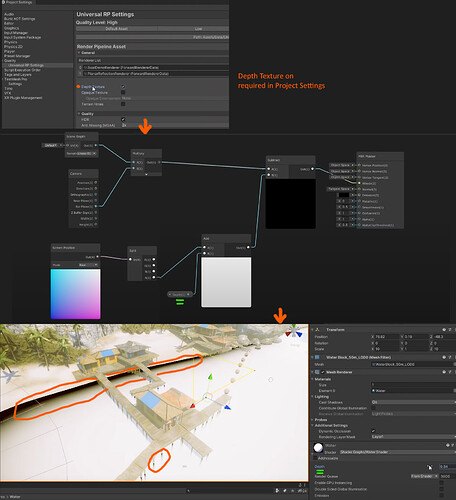

Is it possible to get this kind of depth mask for water planes in EEVEE?

The source of the screenshots I stitched together is https://youtu.be/gRq-IdShxpU?t=94

Thanks!

Texture Coordinates > Generated > Separate XYZ > Z

Yes, I know about it but it’s not the solution presented here. Every arbitrary object creates a live, fading silhouette beneath the water plane that you can use as a transparency factor. I imagine it’s possible with some vector math on camera info and light path node but I’m not that experienced.

Yeah you can do that too- just use the Object Output of the Texture Coordinate node, or use dynamic paint, or use geometry nodes… quite a few options ![]()

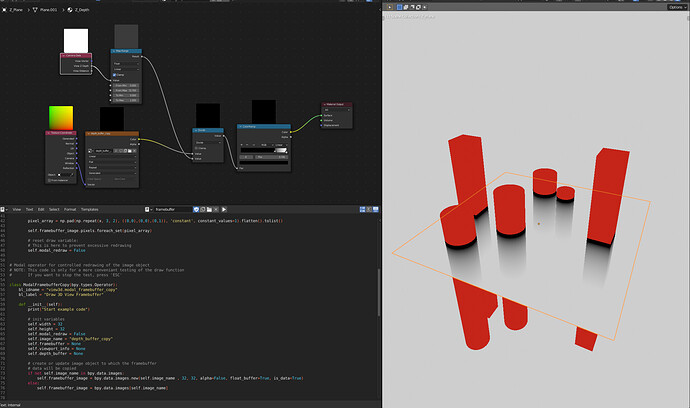

As far as I know there’s no actually usable way to do it in shader only. (Tried it few years ago: Z Depth as a input to shader (water shader))

There’s camera data node, but no access to scene depth in shader with surfaces that aren’t included in depth pass.

Thanks, I already scanned that post before and not seeing a solution I kept digging. Seems that maybe there is some progress exposing the depth buffer to python mentioned here: https://blender.stackexchange.com/questions/177185/is-there-a-way-to-render-depth-buffer-into-a-texture-with-gpu-bgl-python-modules

It looks like it might be possible then to use it looped back as a texture. The PyGPU API is bit of a mystery to me, but overall streaming textures via python in shader with combination with it sounds feasible. Not sure how practical it would be from performance side. (Found a thread with what seems to be a render to texture with python api Live Scene Capture to Texture)

There’s also the realtime compositor in newer Blender versions that could generate the mask itself, if only the mask is needed outside of the material. Although current Eevee renders composition passes

separately. The rewrite should have the passes available at the same time.

Overall it’s bit frustrating having this limitation with material shaders, as multiple game engine techniques aren’t possible with Eevee due to it (z feathered alpha for example).

Edit: Tried the script from your link. The depth from there still includes alpha blend surfaces, but enabling “show backface” gives the depth pass without that surface.

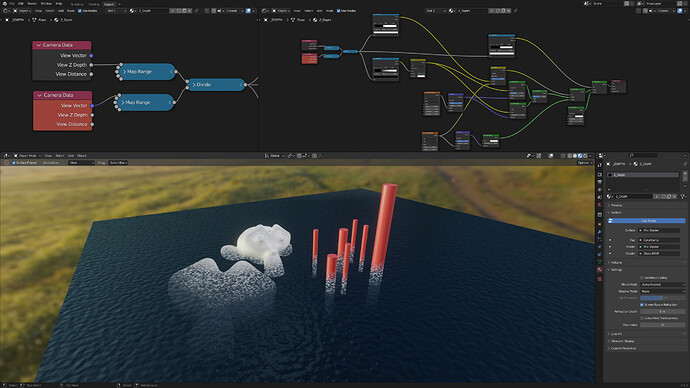

Edit 2:

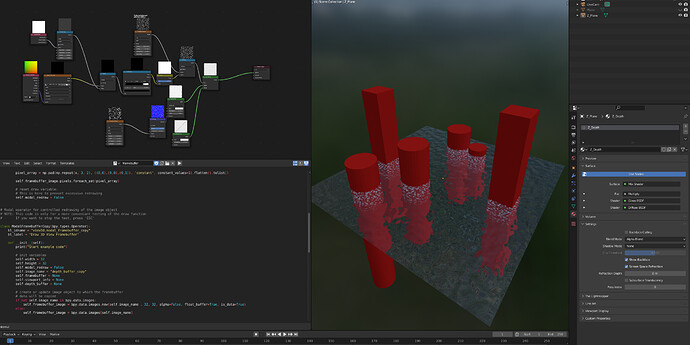

Range is random between the 2 depth buffers, but the idea works. Performance isn’t really interactive though.

And as a input for foam:

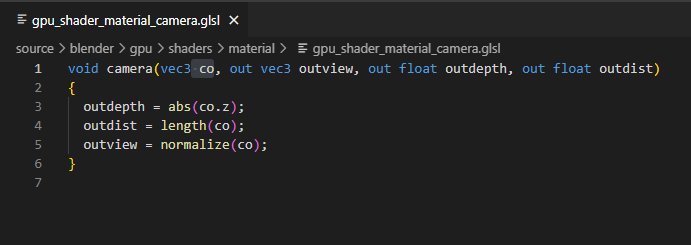

Also as a sidenote with what’s the actual difference between camera data node outputs:

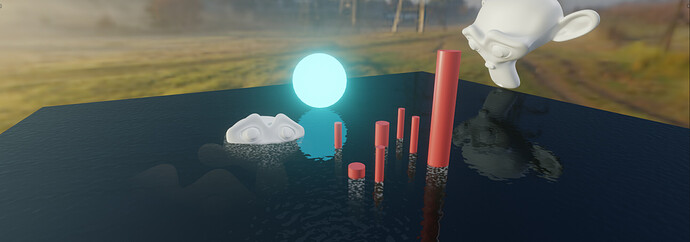

I have to say that I’m impressed that you got it working. Thanks a lot and this will have to do for now as a proof.

I tried also to modify the camera data node in Blenders source to add access to depth buffer to it (hijacked the view vector output for it), but currently I can only get it to work with materials that use alpha hashed and refraction. Which also means there are no SSR or planar reflections. No noticable performance hit in viewport outside of material compilation times.

Edit:

Made it compatible with alpha blend and planar reflections: