I have tried Stable Diffusion and MidJourney, and they seem to be able to create quite realistic 3D images with some shadow and lighted surface. What I wonder is, if those A.I. actually understand the objects’ 3D shapes, thus applying light/shadow according to the 3D information, or do they have no idea about 3D and just mimic light/shadow based on the light/shadow they had seen in similar photos?

I’d be very curious whether even their inventors can answer that question.

As far as I can see, just with the human brain, no one really understands what exactly is happening inside these large scale models while they do what they do.

Typically, human artists don’t work algorithmically while they draw or paint either - there’s no virtual 3D model that’s projected into 2D based on mathematical rules, and there’s no raycasting happening. Instead, artists have context knowledge about the objects they draw - they just know, if in a fuzzy manner, how they ought to look, to look best (which may even be different from real).

I reckon generative AI works the same, basically. Only it doesn’t learn from real-world experience, but has learned to associate certain string tokens with certain classes of shapes instead.

Side note: obviously this implies there’s no context understanding whatsoever happening, on the AI’s side. Hence, from a human perspective, it lacks any ability to add deeper meaning to it’s creations. Everything in that regard one might spot in AI generated images is either parroted, or just a mere byproduct of chance.

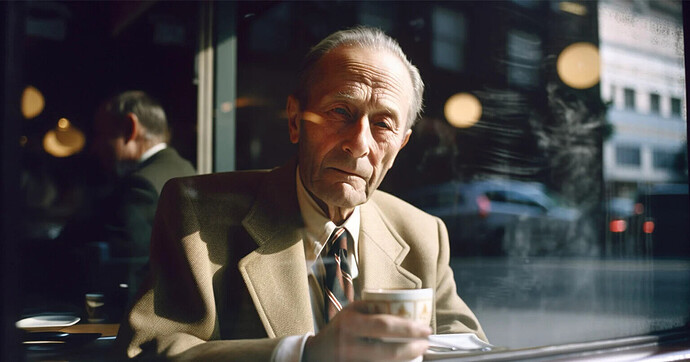

Of course, we humans don’t do the kind of ray-tracing that Blender does, but in a way, don’t we do ray-tracing inside of our brain? I mean, if we were to draw an old man near a window where the light is coming from, we don’t think a flat, paper-like 2D human being that is perpendicular to our eyesight inside of our brain, but we imagine the 3D form of the man, and the light as a line, and think that the line light of is blocked by things like head, so the head creates a shadow on the other side where the light is coming from?

AI image generators start with a 2D noise texture and iterate over it using pattern matching rules. It’s entirety foreign to how humans make things- we do one part at a time, AI does the whole thing at once many many times. It has no conception of 3D or 2D or anything else- it has no conception of anything, actually, the only difference between the 2D noise and the final image to the AI is that it meets a higher probability of being “object X in scene Y” than the noise. No AI outputs results with 100% confidence- they usually get up to 98-99% and call it a day.

To be honest, the reason why I wondered this question is that if the A.I. is thinking in 3D, I wondered if it could generate a 3D scene instead of a 2D image as the output. I mean, for example, instead of the 2D image of the old man in a cafe, the A.I. creates a Blender file of that scene.

So, if the A.I. does not understand 3D, it would not be possible to output 3D files?

There are AIs that can make 3D models, but they operate using entirely different instructions

and pattern matching than image generators. They’re also not very good at it, most of what they make is broken lumpy blobs.

Again, you have to remember that AI generators are just replicators- what we see as “original” output is one of a nearly infinite number of permutations of millions of individual pieces from their dataset. You can easily test this by finding or training an AI with a limited dataset and giving it instructions outside of the scope of its training- it will return complete and utter nonsense, usually in the form of erratic noise (as I said, they start with noise and refine down, if they can’t refine they just return the noise, which is where things like weird smudged hands come from)

Here’s an excellent example of this:

If Midjourney could “understand objects in 3D”, it could extrapolate what the back of a PS5 controller looks like. However, since it can’t and there’s no images of the back of a PS5 controller in the training database, it can’t output a spatially correct controller

Short answer is no.

StableDiffusion basically runs noise through a filter until an image comes out, it does this over and over until you get a good enough result.

Generative models are able to create images from subjects in poses it has not seen during the training. To me, it is more than obvious, that those models conceptually have to learn about shapes, depth and how light interacts with those. I don’t know how else those models would be able to generate new images.

When training neural networks, they sort of take every shortcut they can. They sort of learn the bare minimum of what is required.

What you are asking for is highly specific and quite far away from how neural networks work. What I have seen is experiments where they created NERFs or something similar from a single image (though my memories are very cloudy about that!). They were able to slightly shift and rotate the view.

Not being able to produce a reasonable (spatially correct) image from the back of a PS5 controller doesn’t mean those models are not learning any kind of 3d understanding. It means, the 3d understanding is clearly not perfect.

I think that’s potentially inaccurate, because you’re building an incorrect analogy. What generative AI does has nothing in common with drawing or painting, indeed, because it isn’t an analogy to that. If anything, it’s an analogy of how we invent or dream. If we ask Stable Diffusion or Midjourney to create an image, what we get in return isn’t a painting - there’s no additional translation process inbetween the image it has come up with in it’s “mind”, no hand that has to do some (tedious & inaccurate) work. Instead we get an accurate snapshot of what it came up with, a glimpse of the insides of it’s “head”. If you want to compare, the much better analogy is how we dream, how our brains come up with all kinds of images out of - seemingly - nothing. They never appear on a per-object basis, but are just there.

Who knows, the process that makes them appear might be similiar …

In any case, if you want to compare to what artists do, you have to look to those who draw/paint from imagination only.

Obviously one has to work in a linear fashion once one starts to paint or draw, but that’s just due to obvious physical limitations. I’m pretty sure, for most of them ideas manifest themselves in a holistic manner, just like in the dream example - in fact, if you bother to watch some of them work, there are artists who paint/draw all over the place in their “world”, in a seemingly chaotic way, in as much a nonlinear fashion as their hands allow it.

Indeed.

As a human artist your ability to fill gaps totally depends on your knowledge of context and your visual library either.

There already seem to be A.I.-generated videos. Nvidia showed something like a swimming turtle in an event held last month, right? Also, I think I saw an A.I.-generated pizza commercial in which people were moving. Without the A.I. knowing the 3D shapes of things, I wonder how it can create such videos. Is the technology fundamentally different from image-generating A.I.?

AI Models have been trained on 2D pixels. They understand 2D pixels.

What you call 3D is also nothing more than 2D pixels… your screen is flat right ?

So there is NO 3D on computers. Not the way you seem to understand it.

Blender, Max, Maya, ZBrush etc. of course offer amazing ways to work with X,Y and Z. But at the end all you SEE on your screen, on a printed paper : is X,Y 2D image.

So AI for images (Stable Diffusion, etc) understand only what they’ve been trained on :

X,Y 2D pixels.

But of course there’s a very thin line between the 2D representation of a sphere. And an actual REAL sphere in volume. That’s why we already have AIs that manage to generate 3D meshes from a prompt, which means AIs that will be able to generate a “3D” mesh on a screen (so 2D pixels), and from there control articulated arms to 3D print a REAL this time : 3D object for the real world.

And only this final object that you can touch with your fingers will really be : 3D.

As I understand it, the video generators are the same as the image generators, except they try to keep the previous image in mind when filtering the new image to keep it temporally consistent for each frame.

I also want to point out, since no one seems to anymore, that the AI’s we see today are not “true” AI. They don’t think, they don’t have consciousness, they are algorithms performing work based on massive datasets and training.

The image Memm shared isn’t an analogy, its literally what the AI does, and that’s all it does, there is no greater understanding behind it. Generate noise → get told the noise contains whatever text prompt is inputted by a human → try to find that text prompt in the noise. → iterate until the image isn’t noisy.

With that noise-refining method, it can already generate realistic still images, as you see in my OP. But do you think it will be able to create realistic videos with this method? I mean, people are talking about A.I. soon generating a movie, but to create a realistic video, I kind of feel like the A.I. needs to understand 3D, not just refining 2D noises.

It already can. Obviously its not very good yet, but I’m sure it’ll get better. Things are moving really fast.

I wish I could explain the video stuff a bit better but I don’t understand it as well as the image process, as I haven’t really looked into it too much.

There are Nerfs however, which are pretty cool. Half photogrammetry half Neural image radiance fields…

Those models learn implicitly what is needed for the task they have been trained on. In the case of image generators, it is necessary to have some form of a 3d understanding, or we could call it 2.5d or whatever. But without this sort of implicit knowledge, it is not possible to generate those sorts of images.

This is factually wrong. It has been demonstrated many many times that neural networks internally learn concepts that are relevant for the task they have been trained on. They are building up some kind of understanding during the training.