It already works, many of my users are doing games and using OIDN to make low sample bakes and the out of the box speed up works, so you can stack the CUDA speed-up of 2 on average with a division by 4 to 8 of sample count = up to 16x faster rendering.

I’ll make an example.

Hi,

so I made a quick small video with what you asked with 16 samples. It shows the difference between the standard and denoised version and how I denoised the bake in the compositor. I tried to make the same at 4096 spp in buildbots, but after 1 minute it was still at 1%, so I cancelled. This bake too around 30 seconds plus 4 seconds denoising on E-Cycles at 4096x4096.

Hi, this post is may interesting for you:

Cheers, mib

E-Cycles was really optimized for archviz and animation until now. I’ll add some new features for game developers, archviz UE4 fly-through and people baking in E-Cycles for Eevee GI (as it is a much faster workflow, free of light leaks and also free of all the tricks like creating volumes for GI, allowing much faster changes and better results).

In the mean time and to get more feedback from professionals using baking, you can get E-Cycles with 25% off for 24 hours with the code bake. The features updates added to fasten bake based workflow will come in the coming weeks normally. By using 16 spp instead of 4096, you can already render 256x faster using CPU, OpenCL or up to 512x faster using CUDA, which is a good start ![]()

2.8x here

and 2.79+2.8x here

Thanks. An example would be great. Probabky similar to

this nice one starting around 18:10 towards baking.

There is also some impressive interactive viewport ones at the beginning .

https://software.intel.com/en-us/videos/open-source-denoising-for-lightmaps

The question is more when i use e.g BakeTool who allows nice cycles batch baking can OIDN be called after every direct diffuse and indirect diffuse (common lightmap).

Thanks for the link. You have an new customer.

There is some really big potential.

If you give me a file of your choice, ready to be baked, I’m happy to show you how fast and clean the denoised version is.

In the mean time, here is the denoised version in live viewport:

I already optimized the code a bit so that the baking only takes 15sec now instead of 30sec.

Regarding BakeTool, @vitorbalbio has E-Cycles so maybe he can update his addon to work with it, but I’m also working on an addon to make it a few click solution so either way it will work

That looks great.

It s hard to strip you down a realife testfile because everything depends on lots of bake tool sets. But i try.

Some more info. It´s all about light transfer to the interior through and IBL where MIS is very important.

Bake it down.

Baked Realtime result in Unity is almost the same

(upload://tOidAdBfra9cApCkjc7ATDQbVu4.jpeg)

like Cyles realtime preview

So it is the most important to fast iterate with denoised bakes to the quality you need , do your lighting and remove errors.

For this is a lot of potential in OIDN with low sample counts.

For the final bake quality i do not really care. It could run up to week on many GPU´s.

For your test shown. In case this is an emisive plane.

Is it possible to simply use an environment sky ibl and make an hole in the cube where the lightplane is.

Could be 4 holes too. One is harder.

So that light in your testroom comes from outside instead of the emissive plane.

That s an fast reallive scenario for archviz or car interiors.

Good afternoon (o;

The AI denoiser is really great for images…but…is there a way to use the denoiser successfully with animation?

Especially with object further away in the scene you see high flickering…or on textures like wood.

This was done with 300 samples and AI denoiser quality set to 3:

I really recommend temporal denoising in the composite for stuff like this!

No great free options AFAIK - I use Davinci Resolve (which has a free version without temporal denoising) and others have mentioned Neat Video.

Good idea (o;

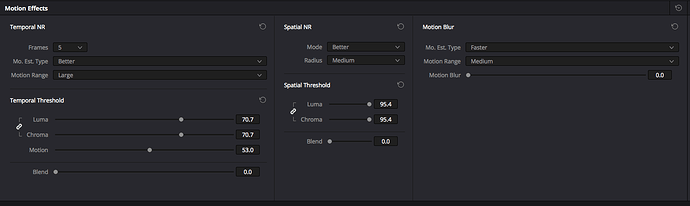

Temporal alone doesn’t do a well job in DSR…added spatial NR as well:

NR off:

NR on:

Settings:

Will upload the video here once it has finished rendering (o;

And also try with lower sample settings…this 1280x720 was done with 800 samples…and takes 75 seconds/frame.

So…Still images → AI denoiser

Animations: DaVince Resolve Studio Denoiser (o;

Cool! I’m gonna try that workflow out.

Here’s the weird thing - I actually feed the AI denoised output to the temporal denoiser and it tends to eliminate the flickers and patchiness without reducing much detail. Its worth a shot as well!

You use the AI denoised footage in DSR?

I just took the raw output…

Apparently there were some talks here talking about inter-frame denoising…but probably will be maybe implemented in blender 3.8

Is the Intel AI denoiser for still images only or does it offer some inter-frame analysing?

Hi, there are some examples in the first post shows animations of arch vis without flicker. It is may only a setting problem.

Cheers, mib

Intel AI denoiser is trained only for stills, but when well used it works for animation as @mib2berlin said  . Following advices allow fast fly-through:

. Following advices allow fast fly-through:

- clamping, it is the most important parameter here to make it flicker free. The lower, the better.

- using faster presets help a lot too (fast or very fast).

- having a lot of light come directly in the room with a bright HDRi or a simple white emission plane.

Hi,

I’m not sure to understand what you mean. The example I show you is exactly what you write. A cube with a single hole (visible at the top right) lit by a single HDRi. No emission plane, no portal, the most difficult scenario, yet it looks good at 16 spp:

Note that the old denoiser also does a great job on animation. This is rendered in 12sec per frame on latest E-Cycles and a single 2080TI (1080p, lot of SSS, glass, DOF, etc.):

What preset did you use and what amount of samples?

For me it even takes 10 - 15 seconds to prepare the scene for each frame…

Yes, only the 2.79 version has the persistent data option, which is one of the best option for fly-through. It is scheduled for 2.8, but someone else started to work on it. So to avoid doing the job twice, I’m waiting to see if it will be finished or not and work on other optimizations and features.

I used 576spp iirc and there was no preset back then, but it something like the fast preset, so with very fast it could be reduced even further ![]()

As many don’t believe E-Cycles can be faster then Eevee, I give a link of a video made by Boostclock.com:

Well I never used Eevee…and never will…

Leaves you with a blank screen for ages with not knowing what is happening (o;

Hmm…does 2.79 do some denoising on the fly? I don’t see the additional “initialising execution” at the end of each frame…

Nayway…I have to stick with the slow preset as faster presets gave me wrong lighting in the past…like the bathroom/beach scene…nevertheless it is still faster than the master branch (which took me 50 attempts to download RC1 today ;o)

I’ll do some more testing this weekend with your suggestions and the E-Cycles/DSR 16 combo…

just used DSR since version 14 before for just video editing…and now since Fusion Studio is included as well…even a better software package…

I assume denoise-wise there is no difference between 20190709 version and last Threadripper version?

PS: Even this mp4 in the link is unplayable with Quicktime player…seems some wrong settings with ffmpeg/avutils during encoding…even vlc on macOS complains…but plays…

Maybe as a side note…this render took 140 seconds on AMD threadripper with latest E-Cycles and 2 * 2080Ti @ 1920x1080, 300 samples, AI denoise set to 3, dunno if the same quality can be achieved much faster:

The AI denoising on the wooden surface looks rather unnatural…some patches just look flat and glossy…