I really hope they get rid of the ridiculous shader compilation times. So I hope it’s more than a “nice” improvement. I still sometimes have to way for up to 2 minutes before I can work on an opened scene. That’s not great.

I believe they’re aiming to match the features of current Eevee before they get too crazy adding in goodies we’re all waiting for. So SSGI is something that will likely be developed after Eevee-Next is ready for use. Additionally, SSGI is not ideal for some cases, so they’ll probably still keep light probe baking as an option even after SSGI is deployed.

Unless they go an alternate route for GI, of course. One that doesn’t require baking but also doesn’t have a risk of creating unwanted shadowing artifacts from stuff outside of screen space.

All of this feels like they’re chasing outdated tech. It would have been ok if EEVEE Next had been ready a year ago, but at this pace, by the time they actually release it with new bells and whistles too differentiate it from the EEVEE that we have today it will be completely outdated and out of step with what other engines can do.

Oh well!

Any news about Parallax Occlusion Mapping (POM) planed for Eevee ?

no, its Extended target, so it haven’t and wont be worked on until all minimum-viable-product features are made

I think eevee can also take a look at the toon shaders all created with it… most people prefer eevee for toons. I think it would be great to get into this idea since cycles already does realism pretty well.

Yeah Eevee is more flexible in that matter, the MALT render engine looks super promising too .

Workbench allows for simple but interesting render option too.

Problem with Eevee is feature parity with Cycles, it’s not mandatory but both engine are almost compatible. But probably since Eevee is going to be the weapon of choice for grease pencil as well it will get more NPR features …

Would eevee still be on par or ahead of viewports and real time renderers in Maya, Max, Modo, etc?

for that, they must work on the shader to rgb node…

I think the plan is to use Gpencil-Next (at the moment basically the current engine) for rendering Grease Pencil and only use Eevee Next for cast shadows.

Nick F.: Is the plan still to use eevee-next for GP 3.0 rendering?

Falk: No, this is not the plan. GP 3.0 will likely use the gpencil-next renderer (currently mostly a port of the old renderer). In order to render shadows for fills the proposed solution is to be able to use Geometry Nodes to convert fills to meshes and then render them using EEVEE and EEVEE materials.

Regressions

- The fix to support Eevee on Intel HD4400-HD5500 on Windows introduced a regression that made Eevee unusable on MacOS/AMD using the OpenGL backend. This has been fixed. Thanks to the Blender community for validating the fix works on the problematic platforms. The fix has been marked to be backported to Blender 3.5.1.

Planning

We spent time during the meeting to go over the current state of all the projects. Here is an overview.

Eevee-next

- Indirect lighting

- Indirect light baking working, but still needs to be added to be used during drawing/rendering.

- Expect several weeks to polish to a MVP. After that more polishing is needed but that will be postponed after all core features have been implemented.

- Volume

- Expected to land in the upcoming week.

- Smaller changes are needed afterwards.

- Other

- SSS has been ported, but still needs to be reviewed

- SSRT and reflection probes would be prioritised after the core features are available in main.

- Polishing

- Shadows still requires more work to be reusable. (bugs, performance). We can still delay it a bit to focus on other areas before that.

- After the bigger changes have landed the properties that needs to be controlled by users should be clear and work on the UI/Properties can start.

Overlay and selection

- Overlay and selection will be merged into a single engine. The selection will be done by swapping out the overlay shaders with selection shaders.

- The selection shaders won’t be using GPU-queries anymore, but use atomics to match the previous behavior.

- Initial feedback from Campbell is desired to validate the approach. After that the implementation can be extended to other selections, before users can test.

- Overlay and selection isn’t a requirement for Eevee-next and could also land in a different release.

Workbench

- Workbench next almost finished; curve rendering and tweaks are still to be done.

Grease pencil

- Grease pencil next is handled by the GP team members.

Eevee-next

Previous section was about the global state. This section is about the developments that have happened since the last meeting.

- Irradiance cache is now stored per object

- Volumes is working with some limitations. Volumes is implemented as a graphics shader and needs to be ported to compute shaders.

- Eevee-next running on Metal has some unknowns. Most risk would be on platform compatibility. SSBO and bindpoint PR needs to be reviewed and feedback addressed. Platform support could be done after initial Apple Sillicon implementation.

- Challenge could be supporting Intel iGPUs and we might also bump the minimum requirement for it to match Windows/Linux bump for Blender 4.0.

Vulkan back-end

- Initial development was done to the graphics pipeline (#106224 - WIP: Vulkan: Initial Graphics Pipeline - blender - Blender Projects ). This includes the immediate mode support. The initial development was done to find areas that require engineering. The next areas have been found so far:

- Some vulkan commands have to run inside a

VkRenderPass. Other commands (including data transfers) are not able to run inside a render pass. This require changes to theVKCommandBufferto keep track of what is needed and perform a late render pass binding to reduce unneeded context switches.- A better internal API is required to support both the graphics pipeline (using

VKBatch/VKVertexBuffers) and immediate mode (usingVKImmediate/VKBuffers).- Reuse temporary buffers to reduce allocation/deallocation. As buffers are constructed with a specific memory profile these buffers also needs to be managed so in different pools. Idea is to have a

VKReusableBufferthat will move its ownership back to the pool when it has been used. This solution is expected to work together with the submission/resource tracker that was introduced for the push constants fallback.- Parameter has been added to GPUOffscreen to specify the needed texture usages of its internal textures. This was needed to reduce validation warnings in the Vulkan backend when used in test cases where the data was read back for validation. By default the host visibility flag wasn’t set. (#106899 - GPU: Add Texture Usage Parameter to GPUOffscreen. - blender - Blender Projects )

I think most of us have seen the lastest video’s from Cyberpunk 2077 with path tracing.

As we now have the realtime compositor, I was wondering if it is possible to combine some of Cycles path tracing functions with Eevee with the compositor?

Now please don’t get me wrong, I don’t expect the developers to jump on this anytime soon but I’m just wondering if something is actually possible?

This isn’t the thread for feature requests, this is just a thread for sharing updates to development. For feature requests, you’re welcome to make a request here:

It’s probably going to be complicated, both engine are quite different, it would probably means running both engine at the same time, which mean twice the amount for geometry, textures and everything needed in memory. I’m not sure both engine are able to share stuff.

You can do that with regular compositor by having one scene for cycles, one for eevee and combine them. But right now viewport compositor doesn’t support passes or layers and probably scenes are the last step in the development , I’m not even sure it’s planned for the first reasons I mentioned.

So don’t expect a realtime solution here, but maybe at some point eevee will allows more realtime stuff natively.

multipass rendering is planned for realtime-compositing, but i;m not sure if its for single render engine of many

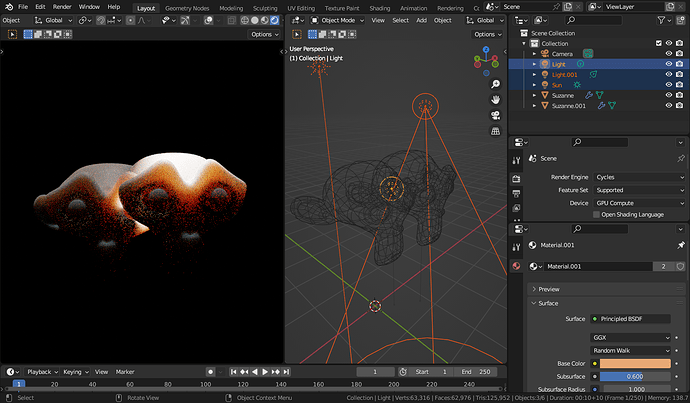

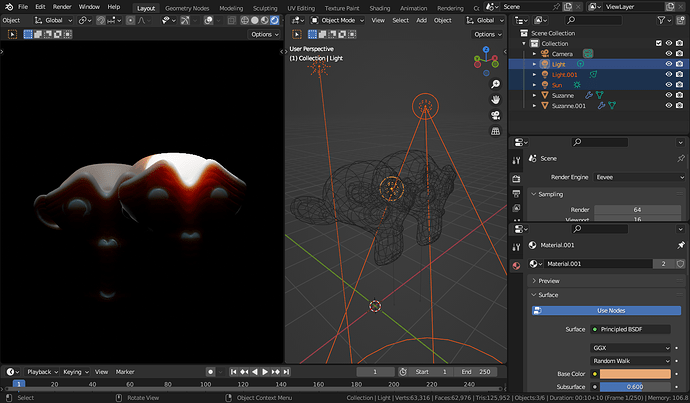

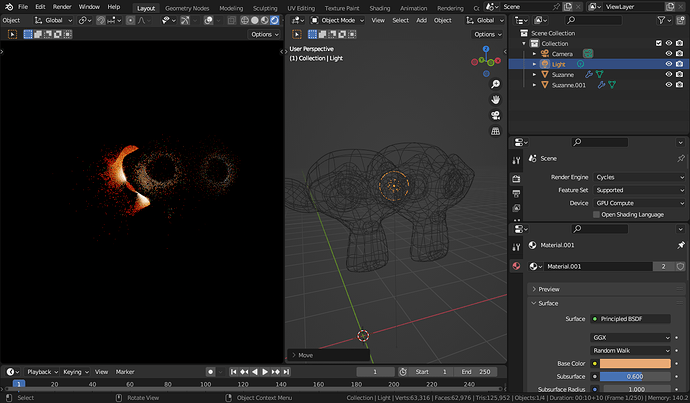

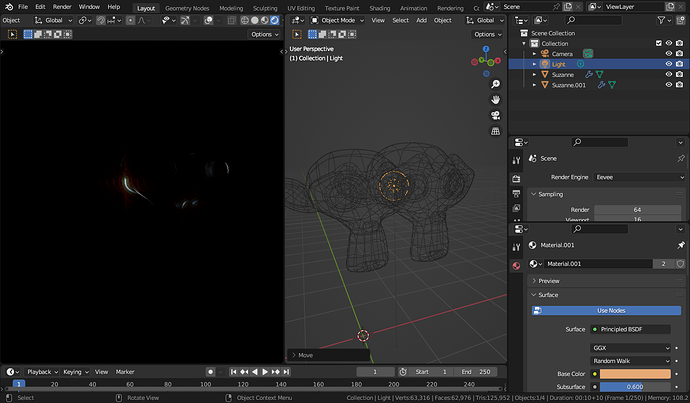

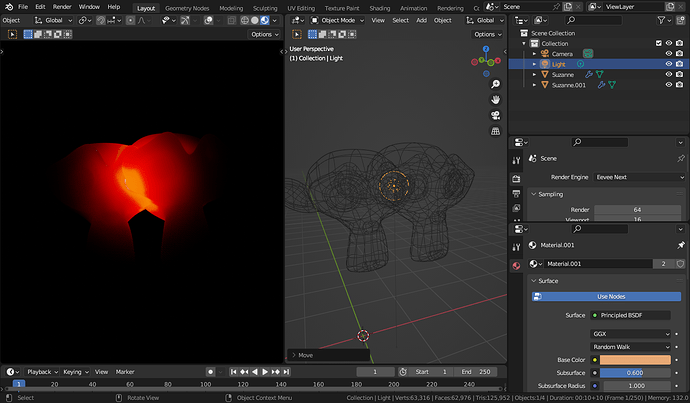

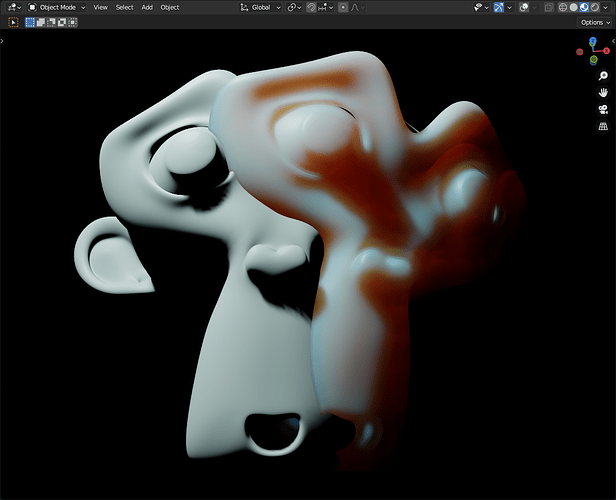

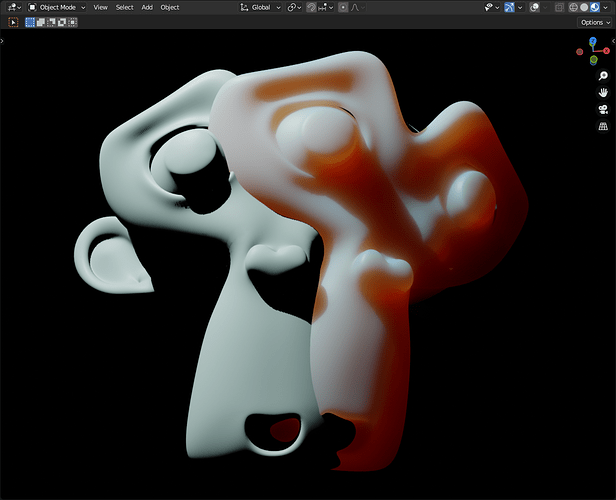

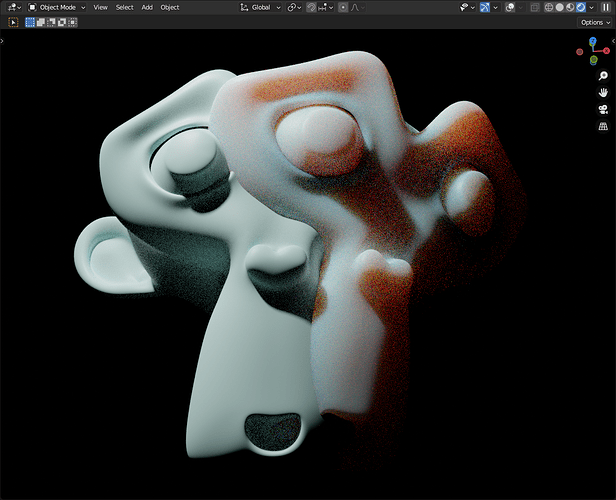

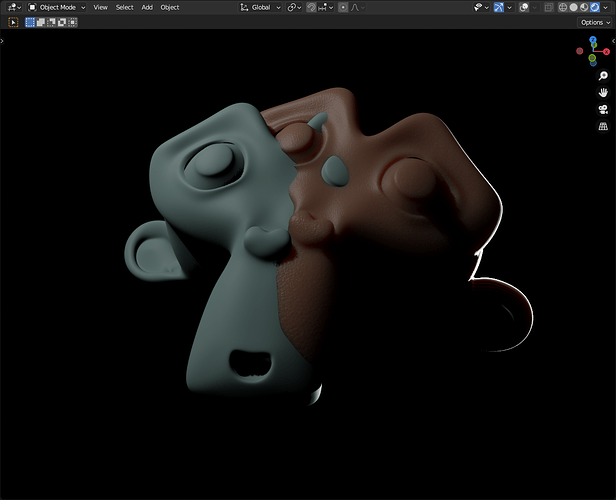

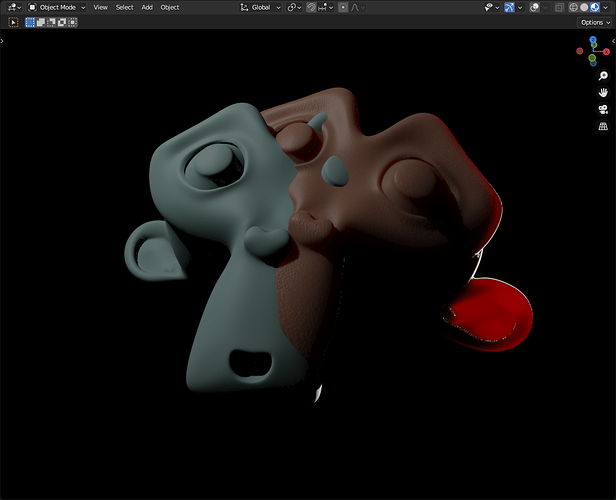

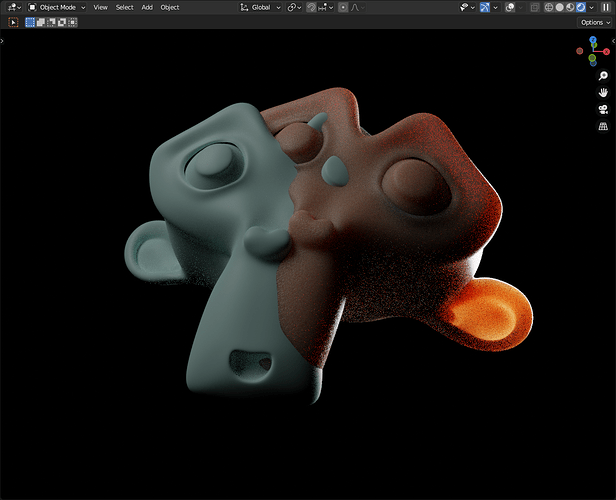

Tried out Subsurface Scattering in Eevee Next. Black background, strong spotlight, point light inside two meshes, a weak sunlight.

Cycles

Eevee

Eevee Next

Simpler comparison. Right one is Eevee Next. Disregard shadow difference because Next doesn’t have soft shadows yet.

With Cycles.

interesting, eevee next looks far more translucent (assuming its the same settings) than either old eevee or cycles. more often than not i’m using sss for more subtle skin effects and i’m not sure i’d always want the whole mesh to be glowing.