I have just read this entire thread - ![]()

And what did I learn, we are all as close together on this as electrons to a nucleus, in relative size terms.

One issue with I had with Animation Nodes is the relative simplicity of the nodes in terms of their functions. It was often hard to remember how I had built a node tree to do a simple (on the face of it) job. So I tried experimenting with Script Nodes and Expression Nodes. Put this in an Expression Node:

((x + pi) * sin(y-z)) + (sqrt(a**2 + b**2 + c**2) * ((e-d)**3))

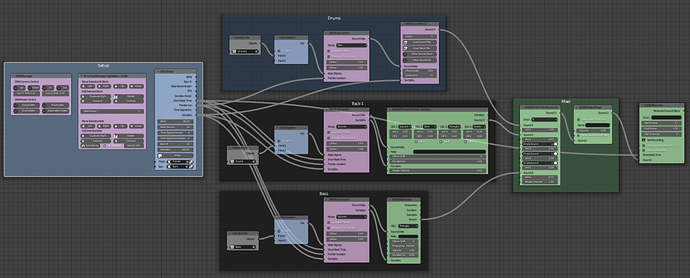

A bit extreme I know (8 inputs), but how many normal AN nodes do you need to do this? Answers on a postcard please. Then consider that you have to remember the formula, so where do you go from here, answer: write a node to do it. I know that very few of us are writing our own AN nodes, I personally have written over 150 nodes so far. Example tree below, only the grey ones are native AN:

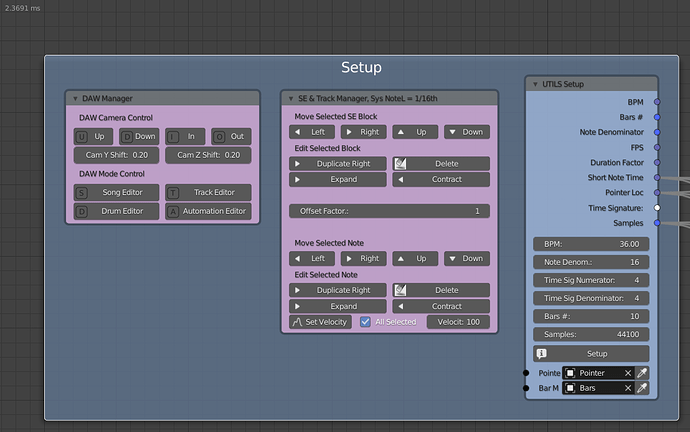

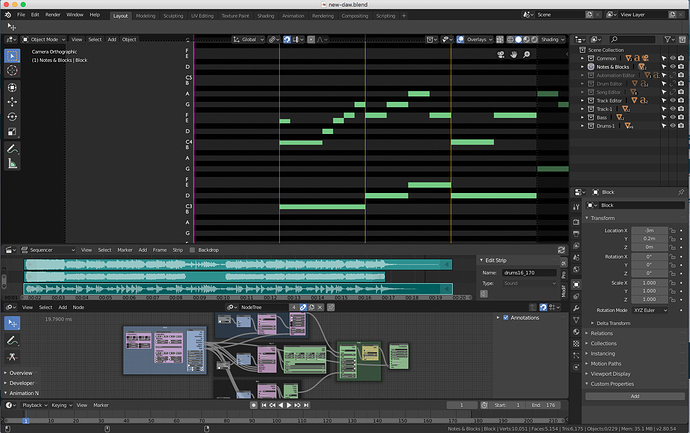

This complete node tree controls a Digital Audio Workstation function for three channels in Blender and outputs the result to a .FLAC file and runs in realtime. This cannot be done with native AN. The answer to make things more “Artist Friendly” is to go big on the nodes themselves, so they perform a complex, often repeated function in a simple fashion. Detail below of the Setup Section:

There are some large nodes in there, but they are easy to use and make the tree manageable, note the execution time for those who think complex nodes are bad. This could be done with a modified GUI, but I would then need to build and register a full Add-on rather than write some supplementary nodes, I know which I would prefer.

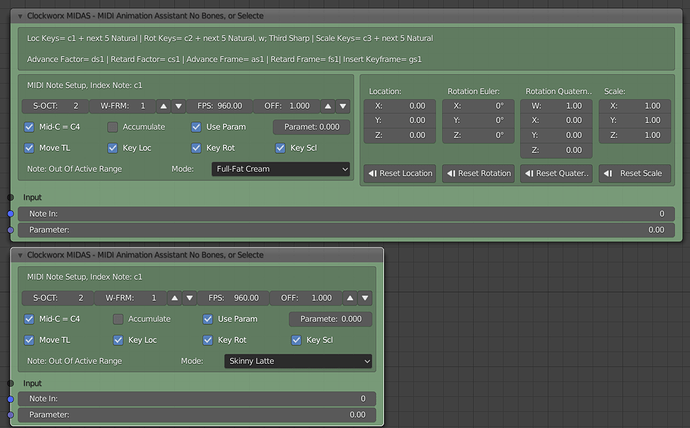

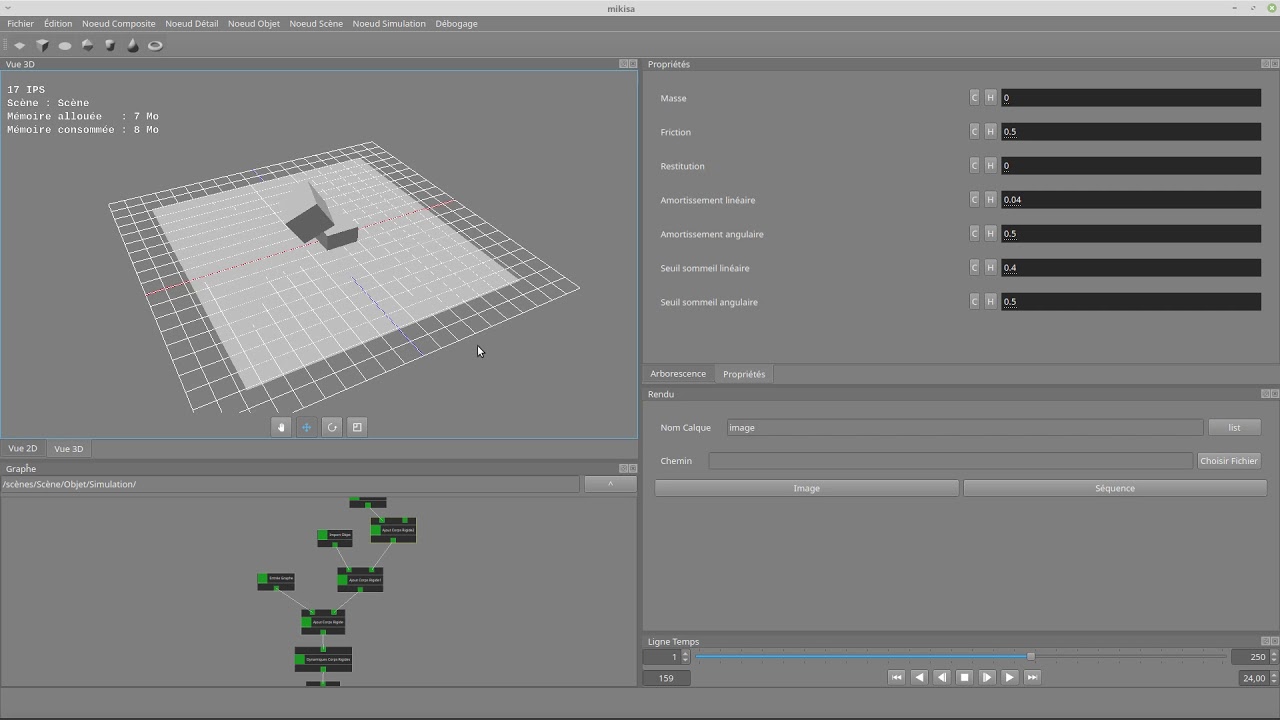

Here is another example of a single node that operates in two modes “Full Fat Cream” and “Skinny Latte”:

This single node allows me to control and keyframe a complex animation from my MIDI keyboard, very quickly and very efficiently.

At least a year ago I found that there was no simple way to control Armatures from within AN, so I wrote a “Bone” socket file and several nodes to control bones from within AN. It works very well and very reliably.

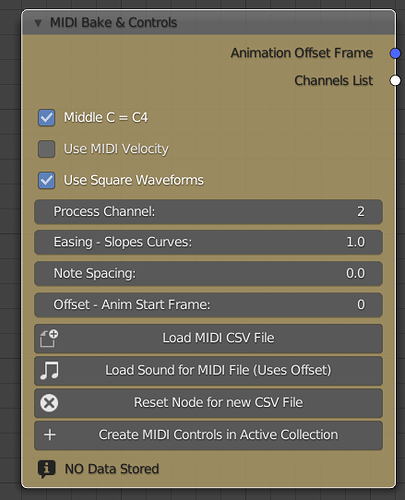

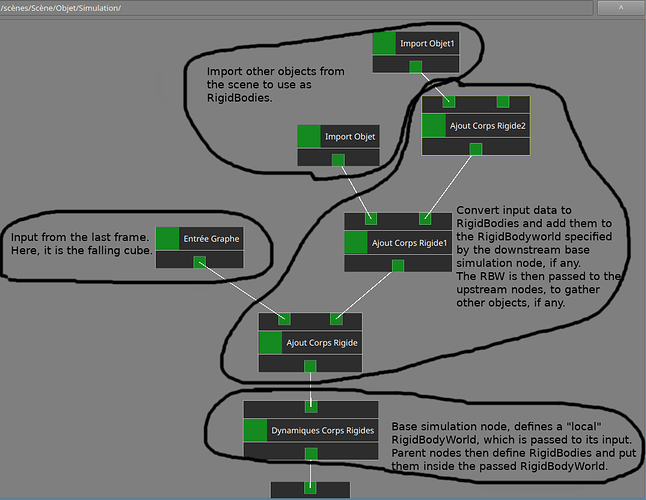

I also built a system that reads MIDI files and animates objects from them. My first music video took me 4 weeks to animate a simple one instrument on a 2 minute song length. One node I wrote takes 0.5 seconds to animate 72,700 note events in a MIDI file for a 4.8 minute song playing 55 different notes on a piano - I rest my case M’Lud. Here is that one node:

There are 286 lines of code in this node BTW.

So we should not pre-judge Everything Nodes until we see it. Very complex tasks can be written into very easy to use nodes, if someone is prepared to do the donkey work, as I am. For the DAW suite I have written a shed load of nodes, including Sound Generators, Synthesisers, many Sound Effects and so on using AudaSpace API. It is possible to make a node based system easy to use, appear other than a mess of spaghetti and make it very fast/efficient.

Just my two cents worth and I am sure this will kick the hornet’s nest nicely.

Cheers, Clock. ![]()