Warning! A wall of text and a lot of rambling from an old dude…

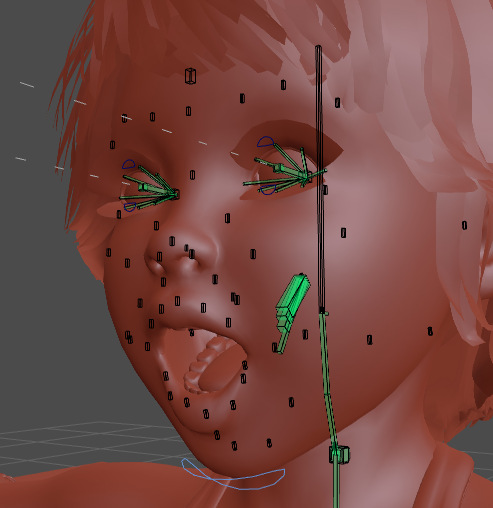

The Sintel rig was completely driven with shapekeys. Depending on the version you were looking at, it could have had custom properties driving the shapekeys in the N panel, or shapekeys driven by bones with custom shapes and drivers on the face.

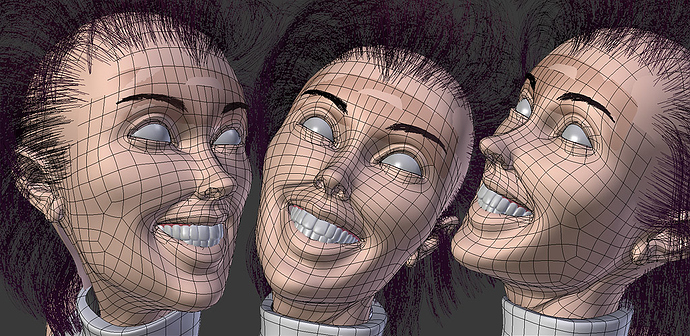

The action I think you were looking at in the video was simply a set of keys going from shapekey to shapekey to test whether the shapes would work well together. Because shapekeys are linear, it can sometimes be a pain to “morph” between one another to get a good deformation.

The upside to shapekey driven facial rigs is the final shapes will be very accurate or at least as accurate as your modeling skills allow. Since you are using DAZ figures, there should be enough morphs available to create a complete shapekey driven facial rig.

A downside to a shapekey driven facial rig is you cannot change the topology in the mesh after you start creating shapekeys for that mesh without possibly breaking the shapekeys. Also, you cannot apply modifiers to a mesh with shapekeys You’ll need to be certain the mesh will not need topology changes before spending the time to add the shapekeys.

Also, shapekeys driven rigs are not reusable. At least the time invested in creating the shapekeys is not reusable from one character to the next. Each mesh/character will need time invested to create shapekeys specific to that character.

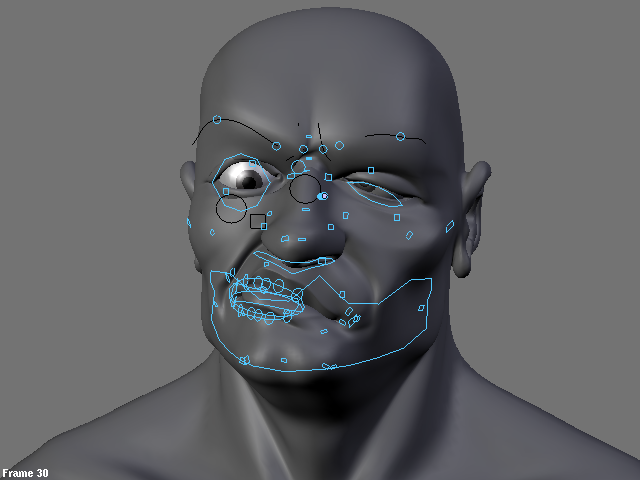

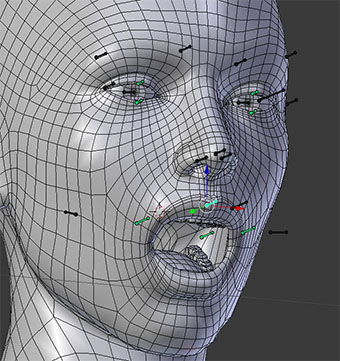

The Pitchipoy facial rig and the one chipmasque linked for his Othello rig are bone driven facial rigs. Bone driven rigs can be good if you ever need to change the topology of the mesh because they rely on bones and vertex weights to work. They can work very well and are often reusable for multiple characters.

Some downsides of a bone driven rig are they can be very complex. The standard Pitchipoy facial rig has 296 bones in it. I did not count the ones from chipmasque’s rig, but I’m betting it’s pretty high up there as well. Bone driven rigs depend on good weight painting. My experience tells me that is not a skill that most people possess.

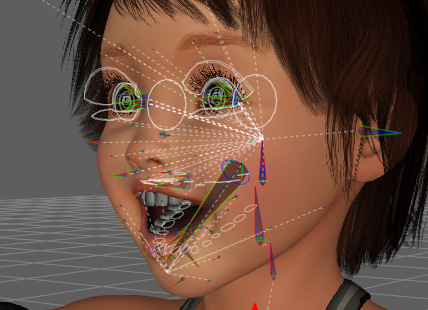

I prefer a combination of shapekeys and bones for my facial rigs. It gives you the best of both worlds. The best example I can give of is the Flex Rig by Nathan Vegdahl, Beorn Leonard and CGCookie.com.

http://www.blendswap.com/blends/view/61707

The jaw is based on this : https://www.youtube.com/watch?v=jEQoQ5DzPMI

At the core, there is a pretty simple (if you are comparing it to the pitchipoy rig, at least) bone driven rig for the jaw, eyes, eyelids and nose. Then shapekeys and controls were added on top to add finer control over each area. The results are very good. Also, the number of shapekeys needed is vastly reduced because most of the vertices are being moved by bones.

Transformation constraints! My second favorite constraint. Action Constraints are still number one in my book.

Anyhoo…I’m just rambling.

I hope some of this helps you, SkpFX.