@eye208

I’m no coder and I’d like to know more about your example #3. How can this be achieved? Can you explain it a bit further?

tia

regarding AO and noise Marco GZT posted a very interesting material based approach:

Some Questions:

Why is BI faster at rendering non-GI renders than cycles is?

Can this method be ported into cycles? For example, render the direct pass in a BI kind of a way, then just sample the GI bounces over that?

Could and Will cycles get some different GI algorithm methods in the future?

I have this weird idea of an approximate, noise free indirect light algorythm. I am not a programmer and I have no idea how things work, but here’s my train of thought:

Use cycles ray tracing to calculate indirect light colour on pixels that have space between them, then interpolate the colour between each calculated pixel, using sharp shaded edges and projected edges of meshes as boundary guides. Then multiply this approximate GI result over the direct pass. Would that work?

-

Cycles is a raytracer, BI is a rasterizer at its core. That means it draws triangles on screen and interpolates their surfaces. Up to a point around where the triangles are smaller than the pixels, this is faster than shooting a ray into the scene per sample.

-

Cycles is plenty fast at rendering direct lighting, so there’s no point, it wouldn’t speed anything up, but would add significant complexity.

-

Brecht might add light caching of some kind at some point, but I wouldn’t hold my breath.

If I understood correctly your idea is similar to irradiance caching. It’s an option, but it would fit better on rasterizers like BI.

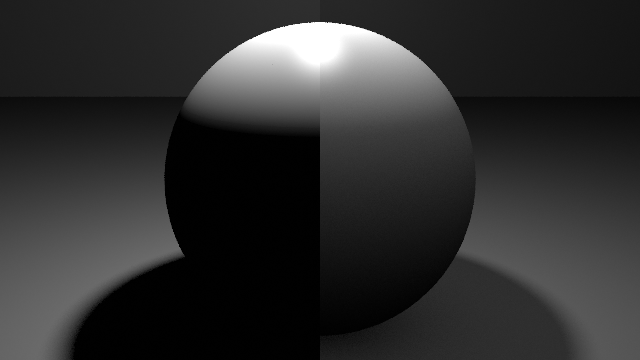

Example #3 uses shader-based fake GI. The diffuse shader is a modified Lambert that “wraps around” objects to illuminate the backsides which would otherwise be pitch black under direct lighting conditions. For comparison, the following scene uses the default Lambert on the left and the modified one on the right. Rendered in Cycles without diffuse and glossy bounces:

The same scene rendered in BI:

Note that BI lamps use a very different distance falloff curve. Other than that, the overall look is pretty much the same, and so is the render time. Without raytraced indirect lighting, the only noise you’re dealing with is from raytraced AO and soft shadows per lamp. Branched path tracing allows you to control their sample depths separately, just like in BI.

Here’s the shader as a node group for both BI and Cycles:

The “Prev” and “Next” sockets allow multiple group instances to be chained. You’ll need one instance per lamp.

Uhm… you should probably try to learn the difference between a rasteriser and a raytracer.

BI is at heart a rasteriser. Rasterisers, roughly put, draw every triangle in a scene, starting with the triangle the furthest away from them, and ending with the closest by triangle. It is “unafected” by resolution, but affected by polycount.

Cycles is a raytracer, which checks every pixel and tries to calculate how the light went from a lightsource to that pixel on the screen. It is “unafected” by polycount, but very affected by resolution and samples(how often a pixel is checked).

Drawing triangles is much cheaper on computing power than checking every pixel. Hence BI being so much faster.

Videocards with their GPU’s are really really good at drawing triangles, hence most games use rasteriser technology as well. Some game engines use a combination of both techniques, often a bit of raytracing over the rasterising.

However, trying to implement such raytracing methods into BI didn’t go very easily, because rasterisers use all sorts of cheats to get things working, and raytracing conflicted with that. Next to that raytracing in BI was very slow.

BI is a little difficult to understand because you need to understand what kind of cheats it uses and how those affect each other, but the effort is repaid in efficient rendering. Cycles is a little easier to understand because the method is more all compassing, but it’s also much more brute force leading to slower rendering.

Yes, that would work.

It’s pretty much what mental ray did for 25 years, where the interpolation algorithm commonly used is from 1988:

http://radsite.lbl.gov/radiance/papers/sg88/paper.html

If I understand that correctly, it’s also what was initially intended to be implemented in BI before the decision was made to pursue Cycles instead.

I do understand that BI and Cycles use two completely different methods of rendering. What I am thinking of - why not make a hybrid?

Some game engines use a combination of both techniques, often a bit of raytracing over the rasterising.

If realtime games can do it, why would it be impossible for cycles?

Games use rasterized graphics at real-time. Modern real time shaders don’t look any worse than BI.

Rasterized non-GI clay renders are far faster, noiseless, and look identical to raytraced non-GI clay renders.

Cycles could speed up like this:

Rasterize the direct pass on GPU (It should take 1/60 of a second to do, which is evident in games), instead of raytracing it.

Now cycles kicks in with raytracing, only rendering the raytraced indirect pass.

Multiply the raytraced indirect pass over the rasterized direct pass.

And there ya go - similar, but faster result. Not sure how much faster, though.

Some non GI renders take a long time to denoise on Cycles, because of environment lighting, soft shadows. And if it was rendered on GPU as game graphics, it should look similar, but almost instant.

Could it work realtime in render preview? I think it could. Just draw the direct pass, and then let cycles kick in, rendering and multyplying the indirect light samples over that.

I think cycles should have a posibility to fake or approximate as much things as possible.

Often speed is far more important than accuracy.

Hey guys, I see there is a big discussion going on here!  Glad that my post brought in people that know how to further optimize rendering with Cycles. As I was saying I’am a renderman artist…that means lots of RSL programming at work. Lately I started to learn OSL…and it is so similar to RSL, though I find OSL kind of limited because there is no easy way to design custom BRDFs, access lights and fake heavy BRDFs. But still OSL is a great thing. I was reading OSL specification and I understood that it is quite easy to make custom production and speed friendly IBL occlusion (fake realistic GI if you will) with OSL. I’ve started to roughly prototype the idea…but than I run into a problem…My Blender 2.7 crashes every time when I enable OSL feature and try to render. Can someone please help me with that? I want IBL occlusion solution so bad!

Glad that my post brought in people that know how to further optimize rendering with Cycles. As I was saying I’am a renderman artist…that means lots of RSL programming at work. Lately I started to learn OSL…and it is so similar to RSL, though I find OSL kind of limited because there is no easy way to design custom BRDFs, access lights and fake heavy BRDFs. But still OSL is a great thing. I was reading OSL specification and I understood that it is quite easy to make custom production and speed friendly IBL occlusion (fake realistic GI if you will) with OSL. I’ve started to roughly prototype the idea…but than I run into a problem…My Blender 2.7 crashes every time when I enable OSL feature and try to render. Can someone please help me with that? I want IBL occlusion solution so bad!

Blender shouldn’t crash under any circumstances. File a bug report and provide a sample scene with the shader that causes the crash.

If you’re using the 2.70 release, it’s worth a shot to try the nightlies, perhaps your problem is already fixed.

Hi Piotr! Thanks for your reply. The thing is that I’am not even using any custom shader. Blender just crashes when I tick OSL and try to render the start up scene. I installed 2.7a version but the problem is still there. My setup is Win7 64bit 16GB RAM Quadro k4000.

You do realise that a lot more of the effects seen in games are often pre-computed, baked into textures, or faked in other ways as well that is not really suited to 3D work in the same way, or often takes a lot longer to create the assets.

You do realise that a lot more of the effects seen in games are often pre-computed, baked into textures, or faked in other ways as well that is not really suited to 3D work in the same way, or often takes a lot longer to create the assets.

I don’t really understand you.

Yes, a lot of things are baked, faked and what not, but for render engines (like BI, for example), things also get faked and baked… So what’s your point?

He’s saying that a lot of the performance of real time renderers stems from the shortcuts they take. Dropping a 4 million poly mesh with complex shaders into a real time engine isn’t going to automatically result in 60 fps rendering.

Ofcourse. Same logic goes for any renderer.

I would never use a 4 million polygon mesh for my renders, unless it’s grass, hair, something like that.

When it comes to such high polygon meshes, such raytracers as Cycles come in handy, I think they are faster than rasterizers for that, right? Which is why, rasterization should be an option…

To substantially speed up rendering, you have to take shortcuts on indirect lighting, because that is the primary source of noise and the reason why Cycles is slow. Without light bouncing, Cycles is almost as fast as BI.

And BI is far slower than Unreal engine 4, but doesn’t look any better…

Completely different architectures. A ferrari is faster than a lawnmower, but they accomplish different tasks.

To see how quickly blender renders using “real time” techniques, try the opengl render mode. set up all your materials to use glsl shading and set the viewport mode to only render. It’s still not that fast. it has to write images, not to the screenbuffer in videoram, but to an actual file, on a hard drive. there are dozens of reasons why a modern real time engine would look better than a BI render. most of them have to do with the artist, not the engine. It also might have something to do with the millions and millions of dollars that companies spend developing those engines.

So Freemind, please consider all of the reasons why blender doesn’t render at 60fps. Actually consider them, don’t just say “why not?”. ask yourself first before asking the entire internet. There are real reasons for all of the things that you ask, and if you took the time to consider them, you’d look a little less ignorant.

If you use Cycles for indirect lighting as suggested, it doesn’t matter how fast your direct lighting pass is. Indirect lighting already consumes 99% of a frame’s render time to eliminate noise. Your suggested hybrid approach to speed up the direct pass would increase the renderer’s complexity without solving the actual problem.

You’ll see a measurable speed increase only if you fake the global illumination and skip the indirect lighting pass altogether.