How to get the pixel coordinates of the object location after rendering? An object and I have a glass material in front of it with some refractive index. SO while rendering the position of the object changes due to refraction. I want the position of the object center as seen in the rendered image.

what is rendered is a 2D image only not a blend file !

what do you want to do with this location in an image ?

happy bl

I want to do some calculations using the 3d location of an object center wrt to the pixel coordinates of the same object center from the rendered 2D image.

Right-click over the rendered image will give you pixel coordinates at the bottom line, I have no idea though of how to find the corresponding position in the viewport camera view, perhaps with the help of an add-on (that I know exists) aimed to get precise measures of the render border region.

Hi and welcome on BA !

If you are speaking about Python scripting to go from 3D vertex to 2D, I am currently having the exact same problem, and couldn’t yet find a solution. With no refraction it’s very easy (using World Matrix of object, and vertex coordinate) When there is refration… That is a very good question !

Well, I use renders done with Blender as much more than “only” 2D images ! All the passes, material, obj index, depth, etc can be very usefull in a LOT of cases ![]()

A solution could be to do a first render with a refractive index which equals 1.0 (no displacement of the rays) where the 2D position can be computed. Then do the “normal” render with refraction. And then use an Optical Flow algorithm to track the pixel movement between two renders, and get back the final 2D position.

I’ve been having the same issue trying to find the distorted/refracted pixel coordinates from the camera POV. I just asked stackexchange here https://blender.stackexchange.com/questions/228281/finding-image-coordinates-of-rendered-vertex-after-refraction-distortion-etc. Did you ever find a solution for this?

I have looked at your stackexchange link.TBH i dont have played with such 3d to 2d code yet.However, the refraction is a shader calculation in a closure system.This means you dont have direct access to the shader data,except for the shader inputs in the UI ofc.

The refraction is a optical effect as you know,which you could calc based on camera view,the object normal and with the mesh thickness in question,and the IOR of the mesh/material.

You need ofc,the equation for the refraction,to get a projected on view “optical bended” effect.

This seems to be the missing link in your 3d-2d math.

Dont ask me how,just a idea to think about.

edit,If you want to have the bended red ball result from stackexchange,i guess its not possible because as said,its all done in the closure shader calculation with refraction, reflection which includes all objects and materials in the scene.

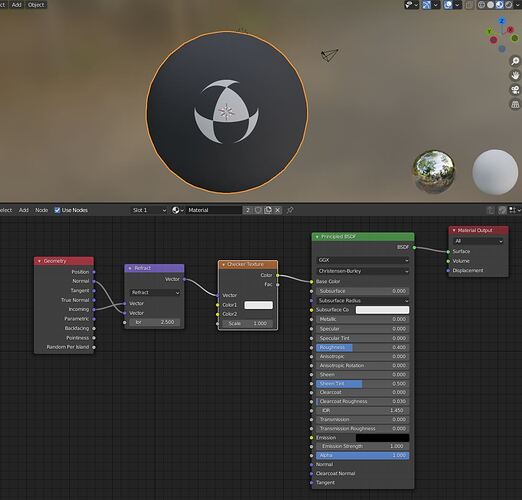

I think there is a refract mode in vector math node. I’ve never toyed with it though, can’t even remember if it has an IOR setting.

I theory you can calc everything.But,how can you reconstruct a red ball with all its own material trough a refractive glass eg.This is the task of the shader while the rendering process.

The math of refraction on its own is quite simple.

another way would be to calculate the physical rays using a python script!

but a bit tedious and take long time to do all pixels on object + lens effect

still not certain why it is needed and for what !

even if you can get these pixels then what do you do with it ?

happy bl

I was hoping to find a way to get the rendered locations with arbitrary distortions. I’m building a tool for other people to construct their own scenes, so I’m hoping to make it as flexible as possible. But it looks like I might have to do it manually, calculating refraction using IOR, glass thickness, etc.

Maybe there’s a more general way, given the compositor nodes and the material nodes of objects obscuring the target object from the camera, to do this?

E.g. would there be a way to find all the 3D coordinate “cells” that map to a certain pixel? That’s ultimately what I need.

Fair enough.Why not use a random note setup based on location,material, object index or what ever works for you,and use maybe a map range note to remap the random values to different IOR values for your refraction variations?

It’s probably simple, and I did check and it does indeed have an IOR setting. I have just never conjured up a practical thing where I needed these (refract, reflect, project, face forward - all those new ones). Would love to see some examples where these would be absolutely needed to get the job done.

Yes have seen this too.Maybe first vector is for camera view,then surface normal and the output the refracted vector?

https://docs.blender.org/manual/en/latest/render/shader_nodes/converter/vector_math.html

I made a quick test.Just put the view vector and object normal vector into the node.

Hmm… Interesting… I’ve been trying to use them to calculate/modify normals into shaders.

This is pretty much like very old-school map lookups (can also use texture coord/reflection) on environment maps, but you also get “refraction”, allowing fake glass.

The use-case for that approach I think is nonexistent today though.