Holy fu- Retarget animation!!!

Hello everyone,

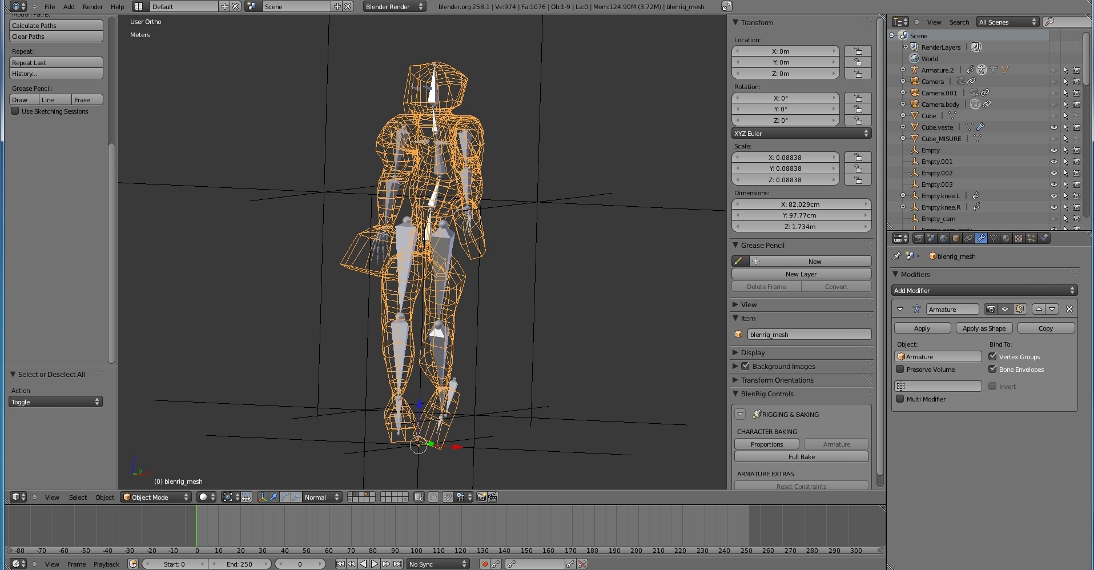

A tutorial / demo showcasing the functionality already coded has been created and is available here: http://www.youtube.com/watch?v=qVCaiFX1Mkw

I’m looking forward to any and all feedback!

@Richard: You’re correct, my inspiration for this project has been the features in Motion Builder and other commercial software. Regarding the “library” of motions, it remains to be seen at what level I will be able to implement this, as it lies outside my original proposal. I can promise you this however - The code will “remember” the mappings you’ve done, and as such, you’ll easily be able to retarget animations from the same source very quickly and easily, and store them as Actions within Blender. Also, it will not be difficult to write a quick python “Batch” script to do this for you, provided the mapping exists already.

Benjy

Thanks Benjy.

Yeah that is looking real good so far. I am wondering how/if this will be able to be used with Rigify or any other rig for that matter with a more complex control rig built in.

I am seeing the groundwork for a great system so far. One thing I was wondering is if you were going to try and implement any other tools similar to human IK where you can pull on a limb and it solves down the entire rig. This is especially useful after you have re-targeted and you need to make contact point adjustments for a character who is a different size.

Hi everyone!

A new, in depth tutorial/overview is now online!

Feedback, as always, is more than welcome!

Very interesting indeed… Great video Benjy!

Great video. This is coming along nicely!

Is improving motion paths within the scope of this project?

Looks great! When linking the bones - would it be possible in Blender to click on the actual bones in the 3D window as a faster work flow alternative to comboboxes?

Hi Benjy

Tested Blender’s Pepper branch r38331 on Ubuntu 64 bits by Fish. I must say that I’m impressed with your progress. Here are some comments.

There is no button labelled Load mapping in the version I tested.

It would be nice if Blender could rescale the BVH rig automatically. In MH I based the scale on the left thigh, for two reasons. 1. Pretty much every rig does have a well-defined thigh. 2. Leg size is important to get walk cycles right.

The bvh files come from ACCAD, and the target rig is Rigify, both the metarig (available as Add armature > Advanced human when Rigify is enabled) and the generated rig. Rigify was used both because it is readily available and because it is not in T-pose.

ACCAD female to rigify:

Metarig: Some problems with arms, probably because metarig is not in T-pose.

Generated rig:

Did not get translation part for some reason. Hips was retargeted to torso (not hips as the video shows), and the toes were marked.

Deform arms and legs are broken apart. Not sure if this is a problem with retargeting or with rigify.

I also tried to replace foot.L with foot_ik.L in the mapping list. The IK chain was not detected.

ACCAD male to rigify:

Retargeting not perfect, but surprisingly good considering the ACCAD male’s weird rest pose. Much better than I managed to do, anyway.

Hi again.

Next I tried to retarget the cartwheel animation from ACCAD to the Sintel Lite rig. The idea was to redo the animations at http://vimeo.com/20651164 with Pepper. Unfortunately, I did not get so far, because retargeting failed with the following error message:

Traceback (most recent call last):

File "/home/thomas/apps/pepper/2.58/scripts/startup/ui_mocap.py", line 303, in execute

retarget.totalRetarget(performer_obj, enduser_obj, scene, s_frame, e_frame)

File "/home/thomas/apps/pepper/2.58/scripts/modules/retarget.py", line 366, in totalRetarget

stride_bone = copyTranslation(performer_obj, enduser_obj, feetBones, root, s_frame, e_frame, scene, enduser_obj_mat)

File "/home/thomas/apps/pepper/2.58/scripts/modules/retarget.py", line 195, in copyTranslation

perfRoot = end_bones[root].bone.reverseMap[0].name

IndexError: bpy_prop_collection[index]: index 0 out of range, size 0

The blend file, just prior to pressing the Retarget! button, can be downloaded from http://hotfile.com/dl/124213970/c637453/sintel-accad.rar.html. Perhaps I made some mistake with the mapping.

Actually, I tried to do this twice. The first time Blender crashed with a segmentation fault, but after the second attempt Blender survived and Sintel was animated. Alas, the resemblance with the original action is not so good. Ouch!

A general observation is that retargeting takes quite a lot of time; with the MH tool the time grows at least quadradically with the length of the animation, and Pepper is at least as bad. It would be nice if some feedback were printed in the console window, to know that something is happening. E.g., one could print something every 200 frames, or for every bone, or something.

One way to dramatically speed up retargeting is subsampling (at least I call it that). Just keep every n:th frame and ignore the rest. Subsampling is especially useful for CMU data, which were filmed at 120 fps. To play it back at 24 or 25 fps, you need to subsample with n = 5, otherwise the clip will be in slow motion. But subsampling combined with a rescaling of the F-curves is also useful to speed up the workflow if you want a rough idea how the animation will look.

Thank you everyone for the feedback

@Thomas - I committed a number of fixes today that improve retargeting for skeletons with disconnected bones, like Rigify. Sintel Lite still has some issues I’m investigating. I’m examining the other issues you raised. Thank you very much for the detailed feedback, it REALLY helps me kink out some of these bugs and complex end user armatures. Thanks for the ideas regarding auto-scale and sampling/preview retarget, I’ve started working on them now.

Hi Benjy. Here are some more comments on Sintel Lite.

-

Sintel is a mixture of bones with different rotation modes, both quaternion and Euler angles, and in different orders.

-

I noticed that all bones in the target rig acquires F-curves, including the original (ORG), mechanism (MCH) and deform (DEF) bones, as well as the fingers. This is very expensive. Only the control bones should have F-curves, whether the rig is animated by hand or by mocap. Some bones also had both quaternion and Euler F-curves, but that may be because the retargeting crashed.

-

Sintel has a quite complicated notion of parenting. Consider e.g. the parenting chain from the upper arm to the spine. In the BVH rig, things are nice and simple:

- LeftArm has parent LeftShoulder

- LeftShoulder has parent Spine1

In Sintel, the chain is much more complicated:

- upper_arm.L has parent MCH-upper_arm.L_hinge

- MCH-upper_arm.L_hinge has Copy Rotation constraint to ORG-DLT-upper_arm.L

- ORG-DLT-upper_arm.L has parent ORG-shoulder.L

- ORG-shoulder.L has Copy Transforms constraint to shoulder.L

- shoulder.L has parent ORG-DLT-shoulder.L

- ORG-DLT-shoulder.L has parent ORG-neck_base

- ORG-neck_base has parent ORG-spine.04

- ORG-spine.04 has Copy Rotation constraint to spine.04

The trick is to make the script realize that these chains describe the same thing. I solved this by keeping a list of effective parents. Only bones that correspond to BVH bones are animated. If the parent of such a bone is not animated, the effective parent is its grandparent, or the parent of a bone that a suitable Copy constraint is targeted to.

Only animating bones that correspond to BVH bones should also speed things up considerably.

There is of course a limit to what a script can figure out. I have seen shoulder rigs with Copy Rotation constraints with influence 0.5; how do you deal with that? A reasonable goal is to deal with the obvious cases, and discourage riggers from using solutions that do not work well with mocap.

I like the second version, Helicopter Girl!

Hi, I am very interested in these new features because I feel tedious to animate by hand

I used the applications of this last build, and I was able to retarget

BVH motion from a motion capture files to my armature based on a simple skeleton enabled by default in blender 2.54

But the problem I had when I applied to the skin model

is this strange twist in the joints.

Marcolorentz:

It looks like you have a problem in the hip area, right? I have encountered similar problems, and believe the reason might be something like this: in your rig, the pelvis bone points up, but in the bvh file it points down. So the retargeted armature looks fine, but the mesh is seriously distorted because the pelvis now points down. What happens if you apply reasonable Limit rotation constraints to the thighs?

Benjy:

I had a look at you code, and it made me embarassed about my own. Clearly you approach the retargeting problem in a much more general fashion than I did, which renders most of my comments above irrelevant.

Thanks for the reply!

The problem was that the axes of each bone were not aligned

in the same direction.

After having aligned, and done the retargeting, when I applied

the armature everything was perfect!

Motion capture in blender would be so cool! What kind of hardware do you need and how much does it cost?

Hi Benjy, great work, but I have 1 question: Is it set / library of functions that can be used on any imported mocap data (bvh,c3d,…) or is it specifically determined for 1 mocap format? (I know, stupid question.) Thanks for answer.

This looks very good and is something I’ve been looking for (praying I would not need to implement it myself!). As such, you’ve inspired me to grab a copy of the subversion branch and test it myself on a variety of game characters. I’d grab a precompiled version of the branch, but no-one seems to compile it for OSX. -shrug-

Given the characters used are for a game (consisting of goblins, gnomes, etc) and are all using rigify rigs, I am sure this is going to make the tests interesting (i.e. proportions are not exactly human standard, even if the rig is standard bipedal). Will report on how it goes and show off awesome results if/when they occur

OK, I’ve built this from scratch three times now using the CMake system and an examination of the scripts/modules & scripts/startup directory show that the python modules are not being copied across. Do I need to do this manually or is this a sign that something else is going wrong (i.e. that there are native files not being compiled as well)?