Have you contacted Troy_S ? He has a lot of experience with this topic too.

@Dinokaizer - thanks for posting the link to your thread. I would love to collaborate with you on trying to take your mockup-concept there and refine it into a more specific finished-mock and todo list… then hack it out and get others to help. It’s going to take me some time to digest all of what you’ve written there.

Hello, I wanted the VSE to be awesome the first time I saw it in action.

What I see as important is:

1. Snapping

-

Dumb easy proxy workflow, much more explicit in the interface.

-

Pre render and possibly backround render.

-

A realtime option to play the video with only “easy” processing taking place leaving complex effects out of the game without disabling every single “heavy” feature in timeline manually.

-

Mute&Unmute track, without necessarily adding a numbering on the side, maybe using right click.

-

Right click is a kind of a metaphysical tabu in blender, it should be used in a more traditional fashion, to give options and tools.

-

Asset repository, with folders, previews and custom names. I know it is nearly achievable with the current UI but does’t give you the necessary flexibility. The idea is to extend the file browser module to accommodate for collections, which can also be used for textures and audio for asset tracking when exporting, who knows.

-

Nodes… I think there may be two approaches. One would be creating a new class in the node editor explicitly for VSE, which would be a cleaner approach. Or create a custom node with both in and out slots which would represent a single effects strip in VSE. The strips should be listed in a dropdown menu like the scenes in the Render Layers node. So one could create his own loop of nodes or a nodegroup underneathe this in&out node, which would abstract all the time and frames preoccupation form the user just caring about the node effects. As the time would be determined by the effects strip directly in the timeline.

-

Effect strips should have an extensible influence with the ability to disable intermediate tracks. Like flexible a piano keyboard which you can roll down and than throw out some keys, roll up etc.

-

Make VSE compatible with edl or xml scripts and thus grant some basic interoperability with commercial software.

Those are the reasons which stopped me many times form using VSE for video.

I don’t really think that blender should mimic any particular NLE in the market as most of them have a really outdated interface. Which is a consequence of the fact that people actually use them to make some money and thus any innovation is seen as a time loss. The only two that are actually looking ahead of time for me are Lightworks and Final Cut X, those are in a similar position to what blender was with the 2.5 release. They have fresh and good concepts behind. As for me most of the buzz about differences between premiere and final cut etc. comes down to layout and icons

P.S. there is an awesome open source project called slowmovideo which is a kind of standalone twixtor but with a comprehensible ipo curve based workflow. It has gpu acceleration and maybe even it’s own lib so it can be used inside other software. It would be really cool if it worked inside blender.

I still think that before redesigning the UI or UX (User Experience) of the VSE we need to define what it’s uses are. Of course there are lots of cool features that could be implemented and there are new ways of visualizing workflow (dynamic snapping etc.).

But what do most Blender users want the VSE to do?

Originally Ton suggested the string out tool with minor trimming of animation. Due to solid storyboarding and pre-production, these aims are met with the rudimentary tool set now available. However, once you introduce less controllable elements like field video and use different story construction techniques (not script based), you need much more flexibility. How much flexibility do we need and what tools specifically should we add to these aims?

Dangit! I said I would make a detailed post and I didn’t, I am filled with shame lol

Like the main points of my post, if we want the VSE to become an editor we need to tackle the issue of functions and UI, many people are bringing up very good points.

I will try to explain what I like about FCP as best as I can… later, but I will try to explain my idea of handling Nodes now, since that is a big part of my problem with the VSE (as well as audio sync and alphas). I don’t know how good of an idea this is, nor if it is even doable, feel free to criticize I literally came up with this in a few minutes;

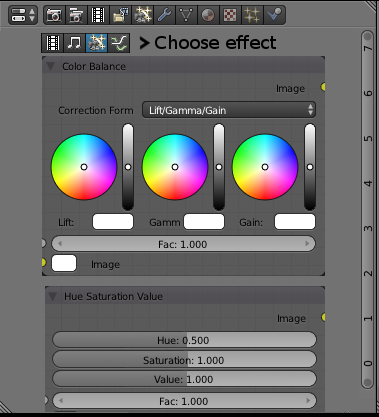

Nodes as they are basically are a lot like floating panels, so if possible for the sake of simplifying it somewhat, in the VSE under a SFX tab(I promise I will explain the idea behind my mockup later (not sure how much later though ;P), you could select what type of effect you wanna do (there is already an add on for group node presets) and select it, instead of a web of nodes in these SFX tab, you get the series of node panels stacked on top of each other in its own group subset (eg you make a group node specifically for color correction), now you could adjust the various settings in an easy accessible panels (similar to Afx anf Final cut where these things are also stacked) and if you need to want more precise control using the node editor that is easily another option (since the panel is using the info from the node editor, just arranging it in a much more straight forward manner).

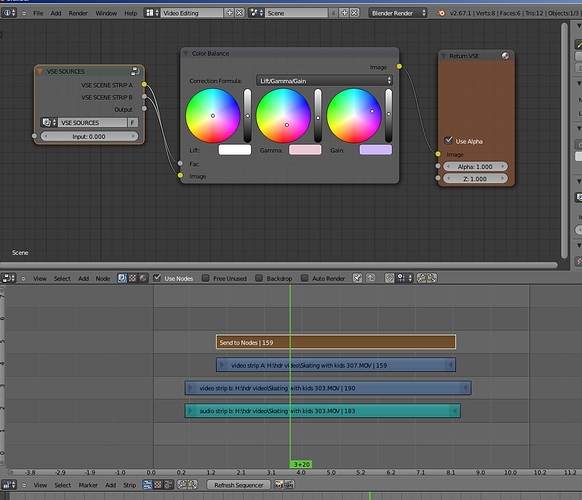

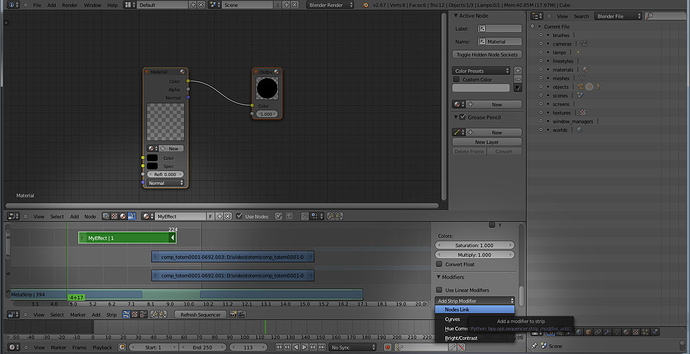

Step 1, use make some nodes, pretend it is a group (Yes, I know this set up would do nothing, I was in a hurry)

Step 2, The settings for these things are now found under a panel, probably under the name of the node group that was created (I didn’t show this in this joke of a mockup)

Step 3, Profit?

Ofcourse this brings in to question other things such as the issue of multiple video layers on top of eachother, how will alphas be considered in this case? Could videos/image sequences using RGBA simply be placed above a clip and have the alpha work automatically like FCP if such a system were in place? Not sure, this was a quick idea that requires a lot more thought between what functions and features we want/need as well as how this would even work.

I agree with Canaldins proposal on many points and have many of my own ideas, but as 3pointedit pointed out, we need to know what the uses will be and what do users want the VSE to do.

That’s one idea, let me know what you think

Dinokaiser, I think the very point of having access to the nodes is to retain the operation of the compositor UI. With noodles. I get the feeling that your suggestion is a similar hack to the current strip modifiers.

I will get burned by Troy for this. But you could use the VSE to dynamically set timing of keyframes and relative positions of media (including animation scenes).

Perhaps you add a node router effect strip, it could promote video strips below it into the node space. This would allow another input node that introduces the VSE strips in relative timing to each other. I’m not sure if the node space should be in the same scene or another scene as it allready is? This would be a streamlined version of the current process.

FYI, I am talking to Troy_s about the higher level design side of these issues and the VSE.

I really appreciate the detailed feature concepts and mocks. It helps bring ideas from the abstract into something tangible. Couple points:

@canaldin - your hit-list here is very much the same as mine. I’m talking to Troy_S to get educated about some core-tradeoffs in real-time/interactive for proxies/originals vs batch processing high-quality and deep-compositing-setups.

@3pointedit - i think there are different VSE users and devs who have different goals. I can’t speak to theirs, only to mine. My focus is the “low-end” user, not the production studio, and making the VSE more accessible to the masses. That said, it seems sensible to create a macro-level design built to achieve final output in high-end pro-quality (4k, huge color range, deep-compositing), because I think it is easier to scale it “down” than “up”.

As a more tangible use case… I’d like a youtube publisher to use the VSE to lay incredible blender-artist-authored custom 3d titling or transitions into their web-video – instead of using canned stuff in off-the-shelf-NLEs. Blender has an amazing integrated featureset to do this, but it’s lacking the wire-up to make it “easy” for someone to control and use pieces directly from the VSE.

@dinokaizer - I’m right with you when you start with a direct compositing integration and a “1d vertical node layout”. In fact we already have something vaguely like this with the vertical materials-panel “limited” node-editing. It’s just confusing and limited. I think it will benefit many areas of blender to come up with a more suitable and generic “1d vertical node stack layout”, which lets you do more/all of the full 2d node stuff, and is practical for simpler smaller node setups.

My next step is to figure out how to select and name specific “internal node property fields” and expose them onto the node-group’s “external interface”. Then we can build a compositing “macro” which is easily usable from the VSE. Do this same trick for 3d scenes and drivers, and we can “package” a 3d titling sequence into something which a VSE editor can use directly from the VSE without understanding blender 3d.

To bring in the Troy_S discussion about interactivity (if I understand it)… AFAIK, throwing the blender compositor into the VSE is going to make it impossible to have the real-time feel of interactively editing anything (such as compositing paramaters) hitting play at any time and seeing the output. We’ll have to (as canaldin said), make a “dumb easy proxy workflow”, and even then the user will have to wait/render proxies before they can “play” and feel the output.

Personally, I would like to approach interactivity the way we do in 3d, with the ability to quickly proxy low-rez, and if it’s plausable (which it may not be) “mock” GLSL rendering. This style is already suggested by the current way the VSE works if you “add scene”. This can support interactive editing at mock/proxy resolutions, with a batch-render to final rez… something which I hope can work for both high and low end users.

The vse can already cache a scene strip even if it comes from a compositor (just play through once). But After Effects is also crippled by the lack of real time feedback. You have to render a cache there too. But low res proxies would be neat.

Have you seen my titling tutorial?

You can see the drawbacks of using another scene(s) for 3D asset animation, constantly going out to another scene and back to the VSE. Not terribly interactive. It would be cool to drive values to the other scene but I don’t think that the dependencies allow you to work that way?

BTW I must re-iterate that the key value to nodes are the many and varied ways to interconnect your assets and tools. I would hate to see the stacking UI (like modifiers or After Effects tracks) in the VSE. Perhaps we could add a property to every strip that allows you to send the strip to a node input? Instead of an aggregator “send to nodes” effect strip that I suggested earlier.

I like the minimalistic look of this: https://popcorn.webmaker.org/

The balance should be ease of use in editing, automations (like in transitions), and play nice with the compositor (each video track could be imported in the compositor).

This is an excellent demonstration of the opportunity I’m talking about. Blender and VSE can already do this awesome stuff, it’s just very inaccessible unless you know how to use ALL of blender.

I would like a 3d author to create a reusable version of that title fly-in as a “library effect”. Then I want a VSE-only user, directly inside the VSE, to be able to:

- drop in the “slide-in text title effect”

- scale the script to control the effect length

- drag-adjust a “time curve” to adjust the speed of the slide-in

- adjust some properties like “title text”, “title material”, “backdrop material” in an easy to use property-sidebar (not 2d node editor)

This provides value to both participants. The VSE-only user, because he doesn’t have to understand blender-3d, blender-nodes, or even art-design, to make something attractive. The 3d-effect author, because he has a consumer who wants what he can create. It could also act as a stepping stone into 3d, as the VSE-only user could try to experiment with small 3d tweaks at any time.

With something like this, I think blender would offer a capability which is unrivaled by Primere/FCP/Vegas… web-or-tv quality trivial effect creation, sharing, marketplace, and usage. This could generate meaningful community interaction and blender users.

I would hate to see the stacking UI (like modifiers or After Effects tracks) in the VSE. Perhaps we could add a property to every strip that allows you to send the strip to a node input? Instead of an aggregator “send to nodes” effect strip that I suggested earlier.

I’m NOT advocating a stacking-effect MODEL like AfterEffects or modifiers.

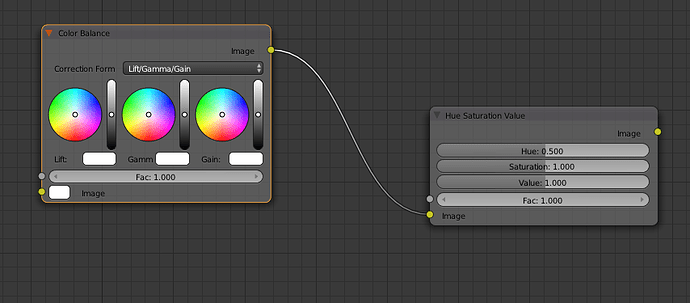

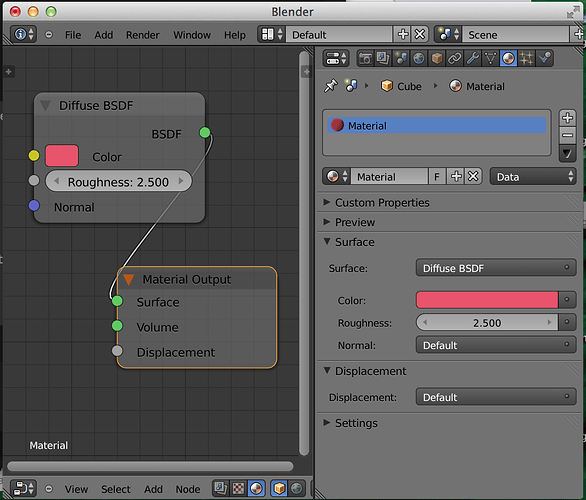

I’m talking about a unified node-model displayed in 1d or 2d, and Blender already has this! The Cycles materials Property panel already has a 1d “alternate visual layout” of simple node setups. See this mockup. These are the exact same data on both visualizations…

My idea was to turn effects into node-groups, and expose the “fields” above onto the collapsed node-group UI panel.

However, this may be thinking too far ahead. I think I could reach the goal above by doing just…

- allow a scene to export a set of simple “config parameters” (like the title-text, title-material, backdrop-material)

- allow those config parameters to be directly editable in the VSE on the a scene-strip-property-panel

- library-browser to browse the exported “effect scenes” stored in blend files

- means to “hide” or “hierarchialize” scenes and their assets, so loading 30 “scene effects” doesn’t pollute your original authored scenes… clicking “edit this” in the VSE should un-hide the scene and assets so you can modify them as desired. (the scene-effect could be linked or appended in either case)

Then I could figure out the next step, which is related to your “pipe to compositor idea”:

- figure out how to allow “video input sources” for effect-scenes, and how to wire them up to other VSE strips… So you could use an effect-scene to send VSE strips into a 3d and/or compositing setup.

This would enable your use case of compositor setups with VSE-driven strip-line-up – as well as allowing more complex elements like 3d transitions, “swirling video strips”, or whatever people dream up.

Reminds me of effect pluggins for fcpx, aren’t they created in motion? One bunch of users helping another bunch of users.

I’ve never used Motion, but Exactly!

AFAIK, Blender 3d offers much higher quality rendering and more control than Motion. And using Blender VSE, you can quickly “edit” your effect-scene within the same tool. Ohh and you can do tracking and masking in the same tool as well. (some quick searches say that users dislike the clunky motion/fcp workflow and feature divisions)

We’re just missing a little wire-up to make this kind of thing easier.

P.S. just looking at Motion for the first time, I kinda like their “constrained 2d object mode”, where it manages the (ortho?) camera, keeps objects on the plane, and lets the user easily work with “layers” instead of thinking about 3d.

Bringing this back to tangible and thinking out-loud, I think we need mockups and/or mini-specs for…

-

better visual-strips : which show audio/video data (VSE can show audio waveforms today, but it runs over the title and looks terrible)

-

Snapping (@canaldin - can you explain what you want this to do exactly?)

-

Mute & Unmute track (@canaldin - is this for audio tracks only, or video too?)

-

linked audio-video tracks

-

viewing/editing “scene-clip parameters” in the VSE

-

creating/exporting “scene parameters” within a scene

-

Asset-Library suitable for an “effects browser”

-

direct VSE control over render-resolution, proxy-resolution (without using the global 3d-properties-panel)

-

scene-clip pre-render-status UI (to show the progress of rendering scene-clips)

Question:

What do folks think about the current “headerless” and “free pan” 2d strips view? Is there a drawback to adding (toggleable) row-headers? Is there some utility to allowing the view to free-pan arbitrarily far away from current clips?

But what do most Blender users want the VSE to do?

I’ll chime in, make it work like After Effects, yes stacking strips and all. While node based systems may be slightly more flexible in certain exotic production situations, everyday tasks are often needed quickly and setting up nodes can take longer than dropping layers into a comp. (Like what do we have now in the compositor is backwards. I am always having to internally chant in my mind…“top goes on bottom” in order to get alphas to work right.)

We should not think of the VSE as a video editor, instead it should be a motion graphics platform that can easily process footage as well.

All the node based effects that we have in the compositor should be able to be applied to any layer in a composition.

The VSE should be the compositor.

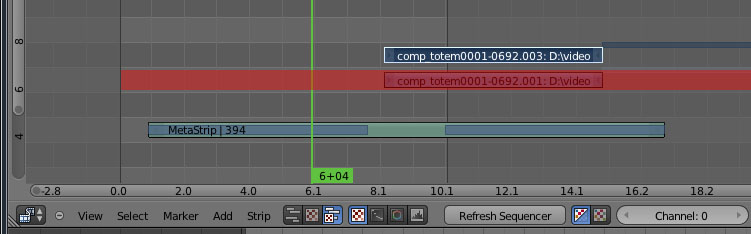

The system should support some kind of pre-composition either via scenes, groups or some other method. The net result should be that a pre-composition should be able to become a layer/bar/strip in any other composition.

We need the ability to draw directly on footage or solid to create masks or strokes.

The existing tracking system should be part of the VSE.

Motion blur should be native to the new VSE. The existing vector blur node in the compositor may be able to be leveraged for this.

Titling is not really important to me, text can easily be imported as an image. There is no need to replicate existing design tools in the VSE when most designers will use there external apps anyway.

Audio should be supported and independent of the FPS of the target. IT also should always remain in synch, no ifs ands or buts. Once again there is no need to replicate audio effects or editing tools as that can be done in external apps just like graphic design.

If there is anyway to leverage the GPU for faster playback or rendering, it should be implemented. Multi-threaded CPU support as well.

I like a lot of the suggestions everyone else has posted. I think automatic little features (bells and whistles) should be left off the table till the broad strokes are completed and we have a basic working system.

Realtime playback is not a show stopper for me. As an After Effects, FCP and Premiere user I am quite comfortable looking at a grainy 1/4th version of my footage for real-time playback. On a modest system, After Effects previews quite quickly in my experience (even at half and full resolution). As far as previews go, there should be a way to down-grade the quality to increase playback rate. All the major systems have this (AE, FCP, Premiere)

Also I should be able to render a draft quality of my final if needed. An OpenGL playblast (with audio synch) or downgrade the resolution as mentioned above.

I think blender should have something like nukex (node-based digital compositing software programmed in python)

this software has been used in the movie Avatar, Tron, and many more.

because blender is node-based and coded iin python I think it’s possible to have something like this in blender.

+1 ![]()

But as a quick solution I’d be very happy if we could use VSE strips as input in the compositor ![]()

Hmm, I do like nodes for flexibility but I understand the efficiency that After Effects allows with a linear UI. Perhaps it would be helpfull to engender a better effect strip. Like the Uber shader in cycles, it could have an infinite number of inputs and effect slots (modifiers). But the real issue here is a better integrated f-curve/dope sheet editors that are more directly linked to the strip you are using. In AE all the curves are editable as a toggled drop down inside the strip. In Final Cut Pro 7 they reside in an effect editor window, both are restricted by their screen real estate.

Again there seems to be a seperation of user expectations, with some wanting canned effects (easier access to basics) while others want to exclude non-core duties. But one persons core issue is another persons waste of time. How would the Blender Foundation decide what to include and what to discard?

My new top requirements are:

1- video source manipulation (bins and metadata)

2- linked sound to audio on timeline

3- framerate conform (just like the xy dimensions conform) but good

4- better effect integration (nodes or strips)

5- XML import/export of edit to specialist apps (audio or vision editing)

We’re not yet at a point to worry about decisions like what to include, what to discard. What we need now is mockups and specs, so myself and other developers can get to the business of coding them up. Feature ideas are great, but they are not implementable without this additional clarity in place.

Trying to get clarity and visuals on each point…

1- Do you have a favorite tool/screenshot you can post about how you like this to work?

2- is a clear request, though we still need UI to unlink/re-link, as well as an a better audio-strip+waveform mockup. thoughts? whose existing strips do you like the best and why?

3- are you talking here about need for better technology (aka slowmovideo) or a UI feature?

4- what UI do you like best for integrating effect strips and why?

5- what apps? EDL? AAF? what 2-3 workflows are most important?

Hello, first the questions

- I was talking abot snaps between two stips. Snaping elements shold be: heads and tails of strips, and markers. To do this one could introduce a snaping button like in 3d view, with a dropdown to snap to markers, strips or bpm and frames eventually. Also it would be cool to port the shift-tab shortcut for enable/disable snapping for the sake of consistency with 3d view.

- Sometimes you store homogeneous information into the same track, e.g. text overlays. One may need to write text in different languages and thus make titles on different tracks and mute/unmute a particular track across the entire timeline. I think the best way would be to select a strip which is on the track you want to mute and fire up a shortcut e.g. Shift+M. A more user-friendly way would be to make it like everyone does it, make a column on the left with an eye symbol next to each track, like in premiere, fcp etc.

Seems like there is a stack vs node war going on.

As for me they are not really comparable. It’s like comparing philosophy to grammar. The grammar tells you how to write, philosophy lets you understand what you are writing for.

I do really appreciate the ease and speed of After Effects, but I hate it whenever you have to do something more complex than a light sabers youtube video. I saw really tough people doing really cool and complex stuff with it but it becomes such an incomprehensible mess at some point, if one forgets to name the layers everything becomes a comp of a precomp of a precomp.

Nodes on the other hand follow your stream of thoughts and may be organized in a very clean and comprehensible fashion.

Personally I think that VSE should combine these approaches and put all of the basic stuff in the modifier stack AND make a new modifier called Node link or whatever you like. So that the image at it’s current state in the stack could be piped into compositor, be processed and piped back in the VSE and get all the subsequent modifications from the stack.

From a user point of view there are many cases in which one wouldn’t want to go into nodes, especially for easy edits. One goes into nodes only when he really needs to, when his stack gets messy or when his processing involves branching. This way the new users could mess around with modifiers in the stack and advanced users would use nodes only when needed, gaining in speed and usability.

I’ll try some mockups, sorry for the ugliness

So here I’ve outlined the idea of having a separate section for effect nodes in the compositor and make it work kinda like the materials work. You have a node tree for every material, and the materials sit inside their own datablocks and can be accessed with the dropdown menu. Obviously the nodes themselves should be those from the rendering section, not from the material one.

As for me this is the least disruptive solution for now. But I would speculate that there may be an integrated solution where all that’s going on in the scene would be passed through VSE thus avoiding the duality of rendering 3D or VSE as it is now. The 3D scene would be locked in the first layer of the VSE where you could eventually decide to render OpenGL, Cycles or disable it going purely for video editing. This way when you put a scene from another scene in the timline in the VSE you would get exactly the output of the other scene wheter it is OpenGL a video or a render, so you would be able to make complex and long cuts with ease, be able to divide your work among many people that produce short sequences and assemble them in the end like we do with 3d scenes now etc. etc. As for now there is really no unified pipeline in the image processing in blender, and it is probably too large of a goal.