@Okidoki

It’s subtle. It’s tough to explain unless we take a look at a direct comparison, but when you see an icon on a big monitor and it’s stationary and meant to attract the eye, a non-artist can tell the difference between a blender interpolation and a standard one. I have them requesting me to make it smoother yet not too smooth as to lose detail, and that’s where I’m forced not to achieve this in Blender. They see the result from Photoshop and are like ‘Oh cool you fixed the crunchy pixels’.

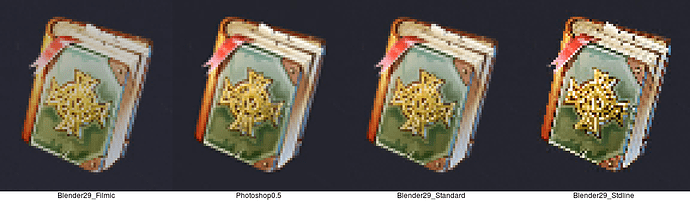

I’ve got two examples here to illustrate what I mean:

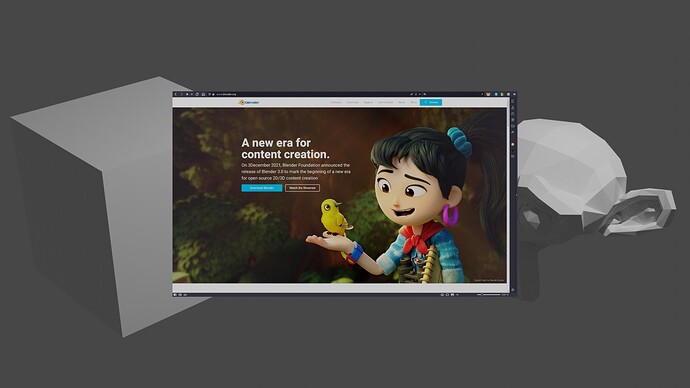

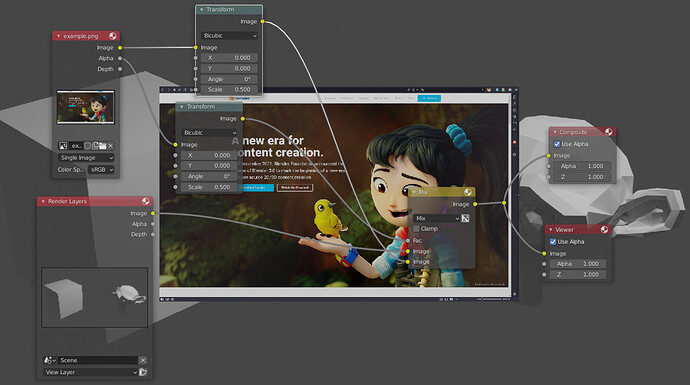

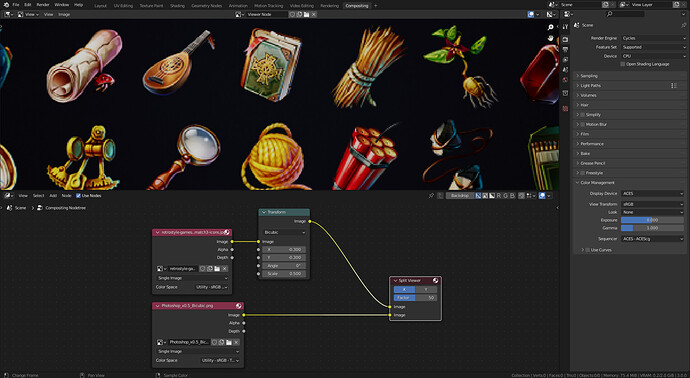

This is using your same setup of a simple bicupic transform to half scale in Blender:

The result is EXACTLY the same when using bilinear, or while using nearest neighbor with smooth or blur before it.

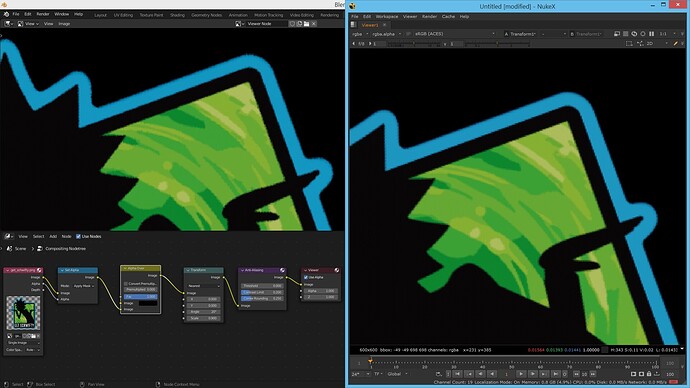

And Bicubic when done in photoshop (Not bicubic sharp or auto, but just regular bicupic option):

If you download that and flick it over this next image on and off, the difference starts to pop out.

Blender is losing fine details because the pixels are getting thickened, not just softened.

In addition to that, all pixels are being translated 1-half a pixel away from the original mark.

Krita does this to the left one pixel but still provides almost the exact same bicupic treatment as Photoshop, and of course is better documented should we dig down on this more:

This suggests to me that the algorithm in Photoshop and Krita is based off a similar model which makes blender the odd one out with something that is not the standard Bicupic operation.

Take a look for yourself and you’ll see that Blender’s bicubic is not doing the standard stuff that ensures optimum amount of retained details. What it’s doing and how it’s doing it is what I’d like to know. I really want to break that down and understand the problem better.

I still have faith that we can get the same effect as Krita and photoshop at least using the available nodes. Granted, you can get close but not perfect using the soften/sharpen, though I still can’t retain the same level of detail as standard Bicupic scales. And Anti-aliasing node is KIND of ok in SOME places, but I notice it fails on a lot of edges and you end up with gritty/noisy contours like the above example when compared to Nuke.

Does anyone have any additional information that explains the differences here better?

Particularly if anyone wants to try and match the results up in compositor and show me your results.

Kind regards,

Simon.

, no worries! I suspect from the slight displacement of pixels that the issue is as you suggest, to do with the plotting of pixel samples. That might explain how features appear slightly ‘thicker’ upon transform.

, no worries! I suspect from the slight displacement of pixels that the issue is as you suggest, to do with the plotting of pixel samples. That might explain how features appear slightly ‘thicker’ upon transform.