I think there is a general misunderstanding.

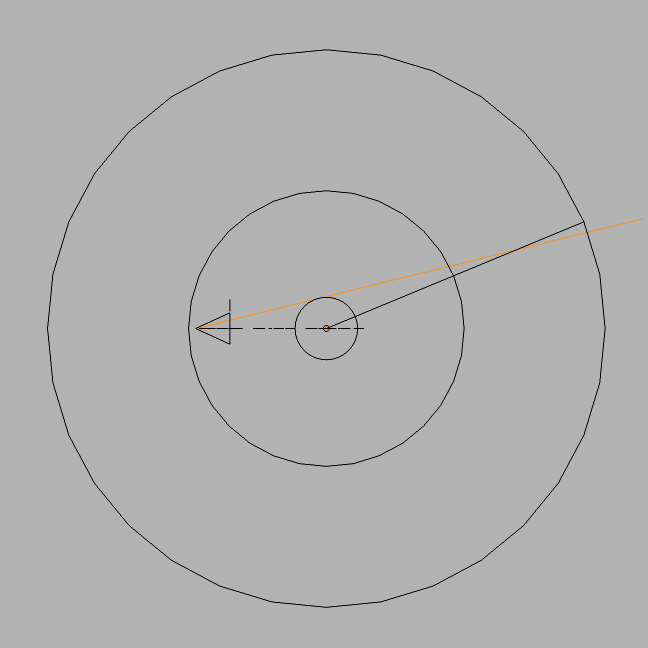

To me it seems you are not using your angular maps as environmental texture, but simply as image affecting the mirror channel of the material?

You method of projection might be simple, but it is wrong, not proven, if the idea is to create an angular map.

You create some kind of map, that works to an extend. But especially in an animation it is not useable.

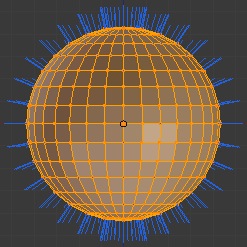

For the OP I´d recommend to use my spherical2angular, and simply draw in blender, directly on the spheres texture.

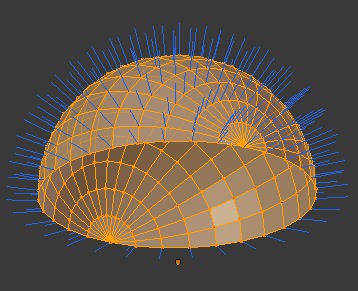

The alternative is to recreate the professional version of a lightprobe, a fisheye lens.

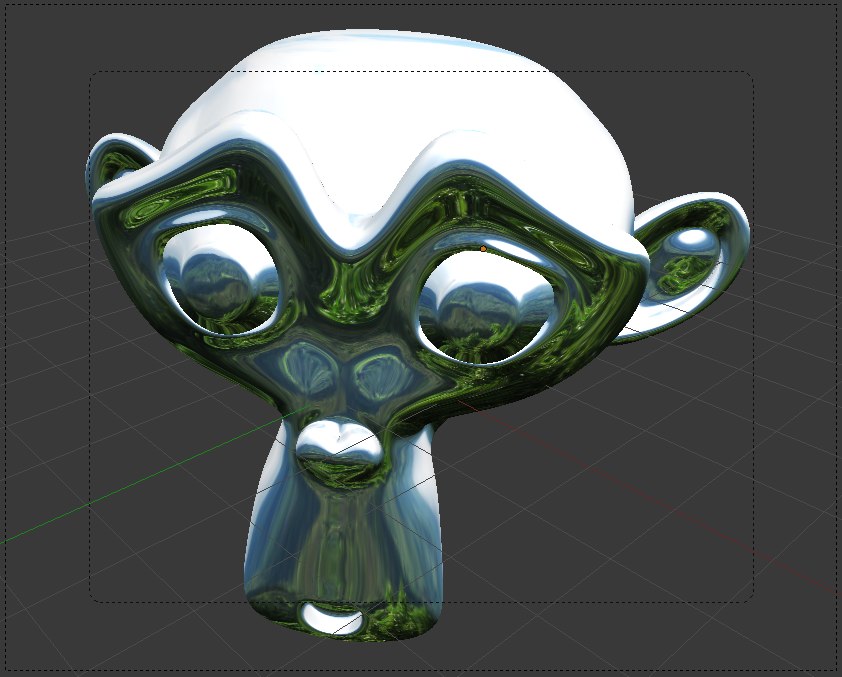

Like this:

http://www.pasteall.org/blend/7365

The IOR/Fresnel most likely is off compared to a real lens, so the distortion might be a tad off, it´s something to play with, but it´s at least close to an angular map. It´s the best and only way to project a planar image to a hemisphere.

But also this is at best an approximation, if the lens settings where correct, the image would hold no information towards the edges either, because the rays would bend pretty much perpendicular to the camera axis towards the edge of the lens. And there simply is nothing the ray can see.

You are basically mapping the plane to a hemisphere, I am projecting it to a hemisphere, that´s the huge difference. (not sure if those are the correct english terms though)

The huge question is, is the OP making a still or an animation.

A angmap is a useless background IMO. It´s good for reflections and IBL.

I´d simply use a plane (backdrop) or the part of a cylinder if it is an animation without too much relative world movement.

Every raytracer I use (octane, indigo, luxray, thea, vray) is perfectly capable of using spherical maps… highres for background, lowres for IBL.

Just Blender still works with cubemaps for environmental maps (background) and angmaps… well I am not certain what´s a good usage for them at all.