I could possibly attempt that if i really needed to but there’s several parts of it that are still really perplexing to me. It may as well be brain surgery.

I don’t see this coming to master anytime soon - Sergey already voiced his disagreement…

So… can such a thing be achieved in python with temporary outputs and running the exe in the background?

As I saw this coming (it being turned down) i started to look into the Blender Pyhton API… while i can program quite well, i just never jumped into blender python - also as i cant yet fully estimate its limitations

But it seems to me that the pyhton api was originally meant for ‘little tools’ and is very limited…

Maybe someone can / will do this much faster and better than me anyway, but i thought its a good example to ask if it is feasible?

Questions would be:

- google says its not easy (possible) to get access to the raw render result unless its saved somewhere before (if that is the case - why the hell?)

- writing a binary stream of floats to a file (the pfm file) - how fast is pyhton there - no point doing it if the loop is slow.

- reading the binary pfm back - same question.

- overwriting the render result / creating a new channel with the denoised result that is accessible in the compositor - possible?

I know this is a bit off topic, but i am curious…

BlendLuxCore has it already implemented:

https://forums.luxcorerender.org/viewtopic.php?f=5&t=840&start=130#p9547

to install it you have to merge the bins into the addon folder… at least that worked for me

(together with photoGI - which is still heavy wip)

This stack overflow response on adding compositor nodes via an addon seem to suggest this path is not possible

https://blender.stackexchange.com/questions/57138/can-custom-nodes-be-written-for-the-compositor

Unless someone else has a more up to date response, something that doesn’t require recompiling blender from source

Read the rest of the discussion, it’s a lot more than just Sergey saying no.

What the Blender developers are concerned about is adding a denoising option that is optimized for low sample counts, but at the cost of scaling up poorly and killing detail even with high sample numbers. This is especially so if the resultant patch adds 35 megabytes to the size of Blender itself.

The idea they have is to utilize some of the new denoising technology with what Blender already has, so we still have the detail preservation while having the ability to need less samples than before.

so… “the realtime cycles” … is near or am I doing wrong?

… and what is expected from the future ???

what will happen between eevee and cycles ???

hehehe interesting questions here …

here everything is back in play …

why not create an addon that downloads the 35-MB bin and installs it only once in the configuration folder?

do not need to be downloaded all the time together with the blender

that, I think,is the addon path i was discussing above, for compositing nodes is it possible?

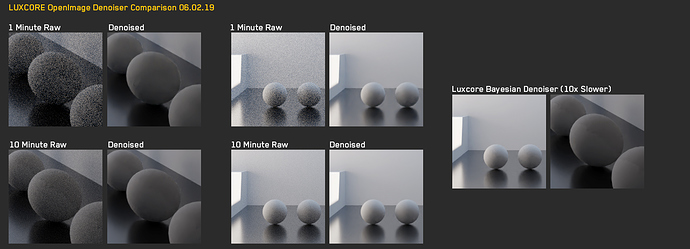

I agree with Sergey - after testing some more in luxcore it really is good for low sample counts, but is not scaling well… 1 min or 10 min renderings denoise to the same result (visually)

edit: it is not usable in any production environment - but i have the hunch that rendering at a higher resolution with lower sample count will yield better results - so i guess there is some space for improvement of the denoiser

Maybe this one together with E-Cycles by @bliblubli could be used for viewport preview only, where low sample counts are used anyway?

At least im my tests, denoising takes more than a second on a 4 core 8 thread CPU for full HD, which is a pretty common case. AVX-512 is not available on current consumer CPUs if I’m right, or only the very latest. On top of that, the viewport render would have to generate extra passes, which would also slow down things. So it wouldn’t be very usable I think, at least not in the near future. But I’m investigating.

@Atair, I find it very hard to believe that denoising a 1 min and 10 min render will produce roughly the same denoised image. I don’t see how is that possible (well, assuming the two noisy images are not identical for some reason ;). Could you please post both the noisy and denoised versions of these two different SPPs?

BTW we didn’t optimize the filter for low SPPs and we never said that we did. I don’t know where does this come from.

@bliblubli, AVX-512 is available on some high-end consumer CPUs, e.g. the Core i9 X-series. Denoising a full HD image on the higher core count versions of those should take ~200-300 ms.

I concentrate on things that benefit the most first, but I have it on my radar

Here is a comparison, i think that while it excels at lower sampling rates, it has a hard time getting rid of artifacts at object borders - the stock denoisers are better there, although much much slower.

This are the default settings from the current Luxcore implementation - maybe there is a bug / wrong setting?

Most of these artifacts seems gone now, reading at LuxCore forum, they tweaked the normal pass and other things a lot in the last couple of days.

I can be wrong but i reccomend to take a look at their topic

https://forums.luxcorerender.org/viewtopic.php?p=9351#p9351

Could you show these pictures in full size in a lossless format (e.g. PNG)?

@marcoG_ita

Most images in that thread are outdated. We now use an anti-aliased shading normal AOV, and the albedo AOV is now correct and also works with mirrors and glass.

@B.Y.O.B

Indeed my intention was to suggest to read from page 7 which I linked until the last page where recent tests show those artifacts are gone and works pretty well

@Atair, did you use albedo and normal buffers for these images? It seems to me that you didn’t (there shouldn’t be such artifacts at object borders if a normal buffer is specified too). Or maybe there was some issue with them (e.g. aliasing). Either way, using such a trivial scene (which doesn’t even have textures!), and a single image, is hardly a good way to test final-frame rendering.