Make sure that in Edit mode, those edges aren’t marked as sharp (clear them if they are) also make sure that the points there are welded - sometimes heavy meshes like that have funky UV islands. You can also increase the size of your light - which will usually help a little. That’s a Shadow Terminator issue that seems to pop up in a lot of path-tracers I’ve used. In Cycles you can adjust the Shadow Terminator Offset … perhaps that’s the same as the “Epsilon” setting that @burnin mentioned?

Does anyone know why Luxcore uses so much CPU and RAM resources even when GPU rendering? I’m assuming this might have something to do with noise filtering, but it’s crazy how even rendering small scenes will BSOD my machine (as I watch CPU usage and RAM skyrocket - even with just a icosphere in the scene). My system isn’t wimpy - an i7 5960 8-core CPU, 32GB RAM, 8GB RTX 2070 - but trying to render some of the demo scenes at 2X resolution on GPU will crash me out every time.

I’m hoping I’m just missing a setting somewhere…

yes, epsilon value is to fix Terminator issue

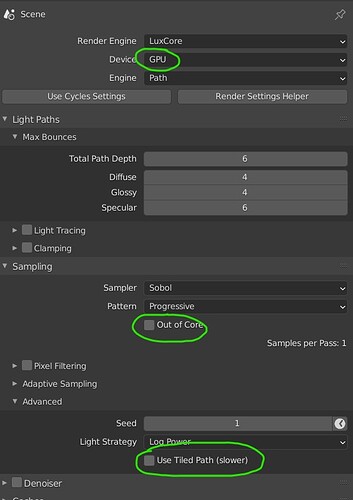

for higher res renderings you can use tiled sampler and/or Out-of-core setting which lets you offload VRAM

PS

there’s also this

Out-of-core settings? Can you give more information pls?

If i change epsilon values it crushed blender.

https://forums.luxcorerender.org/viewtopic.php?f=5&t=2102&sid=cff075c867f2c0b4f23114b0dcf9d518

If it’s reproducable, report the Bug: https://github.com/LuxCoreRender/BlendLuxCore/issues/new/choose

Out of core uses system RAM along with GPU RAM when necessary to fit the scene into memory (as opposed to running out of VRAM on the GPU and failing). You have to have GPU set as your rendering device and then it will show up under Sampling:

LuxCore, by default, does CPU+GPU rendering, I assume it is what you are observing. You can just scale down the number of CPU threads if you want (i.e. down to 0, to do GPU-only rendering).

Is there something for cycles(same thing)?

AFAIK, when using CUDA, CPU RAM is supposed to be automatically used. I’m sure that over time, this will improve as I’ve still seen out of memory errors - but I have faith that it will get better.

From the Release Notes:

CUDA rendering now supports rendering scenes that don’t fit in GPU memory, but can be kept in CPU memory. This feature is automatic but comes at a performance cost that depends on the scene and hardware. When image textures do not fit in GPU memory, we have measured slowdowns of 20-30% in our benchmark scenes. When other scene data does not fit on the GPU either, rendering can be a lot slower, to the point that it is better to render on the CPU.

More here: https://wiki.blender.org/wiki/Reference/Release_Notes/2.80/Cycles#GPU_rendering

Is that ridge in the background using displacement? Displacement is a huge memory hog (something I hope they will fix - it’s kind of ridiculous)

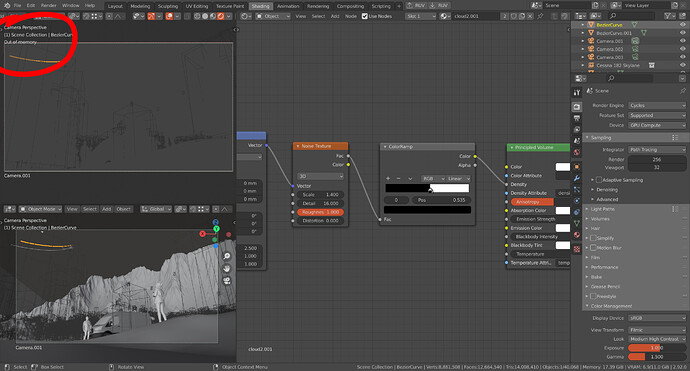

That looks like an “out of memory” for the system, not a CUDA out of memory error. Can you open up Task Manager and see if Blender is using up all of your system memory?

I have not checked up on Dade and the team much since Cycles really took off (I rendered a few image with Lux Classic), but I am left to wonder if they are now using scene-level dicing like Cycles does (with no cache implemented yet)?

Classic Luxrender used a technique where a ray hits the mesh, then the mesh is subdivided at that spot, data the engine then forgets when the ray moves on. Such a technique allows for nearly unlimited dicing with little memory, but with the drawback of being incredibly slow.

I think the thread got kind of hijacked. Ruby_en is pointing out that he ran out of memory using Cycles … and I’m wondering if Cycles stores geometry data on the GPU VRAM and textures are shunted off to CPU RAM when there isn’t space. Regardless, I’ve run out of memory a LOT when using Cycles displacement.

Interestingly enough, Octane has very fast displacement which uses very little memory. Not sure how they’re doing it, but would love to see something similar in Cycles (so, now we’ve mentioned TWO non-Luxcore renderers - lol)

The Out-of-core option doesn’t seem to work properly. I have already opened a bug report about it. Also the Tiled Path option will lead you to a whole new set of problems. Mostly time can explode if you don’t know how to set the options properly. But it also doesn’t save you from VRAM issues. So if you have a normal amount of VRAM (6GB in my case) you will soon run into problems anyway if you work with bigger textures or higher resolutions.

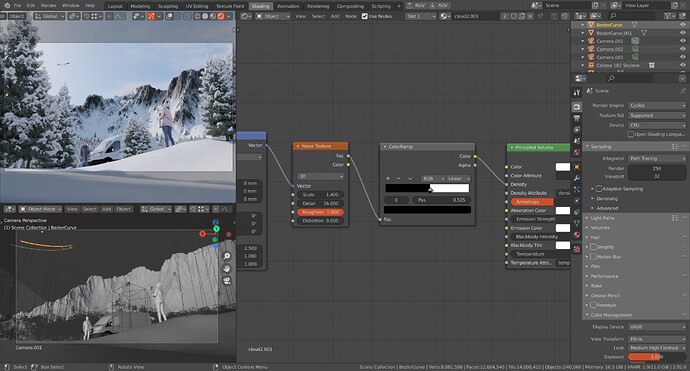

In Cycles the offloading of memory from VRAM to internal RAM works automatically (if used by the OS). So while it’s still possible to max-out the VRAM in Cycles, it’s harder.

Yeah, I’m also running into problems related to memory.

Even though I have enabled out of core, it doesn’t seem to change anything as I’m still getting the out of memory error.

And this is on a machine with 64GB of RAM, so it should not be an issue?

Hmmm - so - in your Preferences>System, are you setting your CPU as a CUDA device as well as the GPU? I’m just wondering if you have to have your CPU checked before it will use the system RAM to take up for VRAM.

If that doesn’t work - or you’ve already tried that, I would submit it as a bug.

This looks to me like the “terminator problem”.

Unfortunately there is no real solution. You can only try to mitigate the effect by subdividing the geometry further and/or increase the size of the light source that is causing the problem.