Isn’t that great that you can do such things on entry level devices today

I was plesently surprised, I can have a cycles render running in the background eating up all my cpu and my Mac doesn’t just stay responsive, but it still has a good 6 hours of battery life

Here we go:

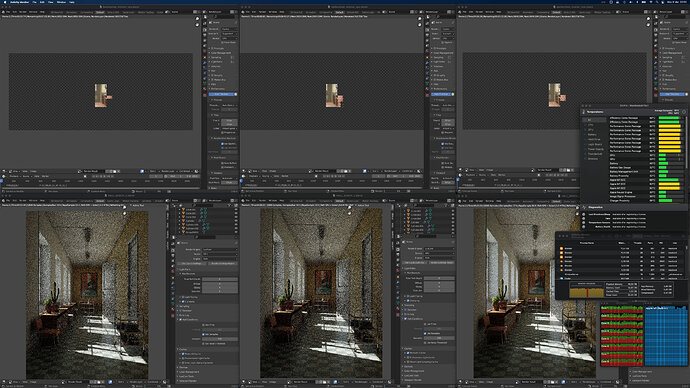

3x Barbershop (Cycles CPU, native M1 Stefan Werner’s build) + 3x HallBench (LuxCore GPU, x86 old crap - official Blender 2.92). All 6 instances rendering at the same time:

Please don’t do this to your lovely tiny machine.

Yes, absolutely. Passively cooled don’t forget.

My M1 Mini is actively cooled but I never heard that fan at any circumstances.

I now hear when my Trash Can is running for screensharing in idle,

where i always thought it would be totally silent

(in a not so totally silent environment as I am currently am)

And each time when it is fully loaded I put my hand behind M1 Mini exhaust

but the Air coming out feels cooler than my room temperature.

I am really looking where we are in 2 years from now.

One more thing - multi-GPU Eevee rendering ![]()

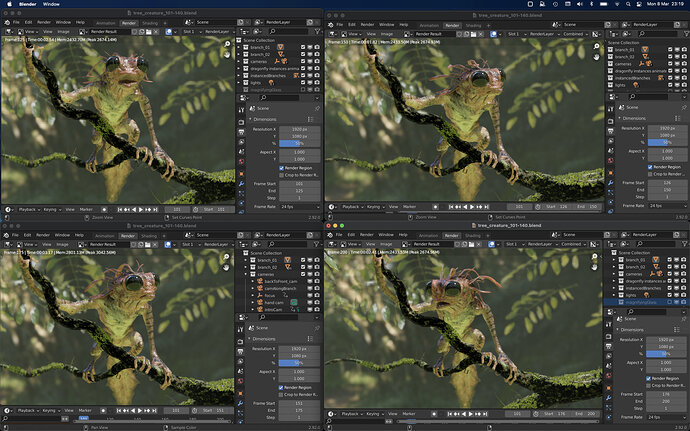

Tree Creature project. 200 frames

1 instance - 3:04,49

4 instance - 1:30,56 (50 frames each instance)

Wait! What? Are you running four instances at once with the frames stepped to simulate 4 GPU’s completing an animation render? And it’s faster?

Hadn’t thought of this and now I’m thinking of doing some nasty stuff to my mini.

… and one more interesting thing. Frame rate for Creature project is about 3 fps, but if you playback four Blender instances at once, then frame rate is 3FPS for EACH ONE INSTANCE!

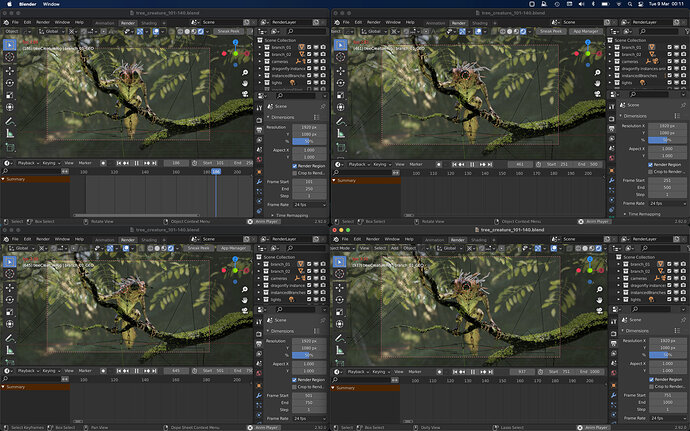

Yes. I use this trick for a long time for Cycles rendering:

It seems there is some Apple magic in OpenGL to Metal translation or OpenGL multi-GPU implementation. I am not sure what is going on here but it works for Eevee as well.

This technique should be definitely implemented in Blender animation engine. It gives huge boost.

Awesome! Gonna try this on my next render.

Ha! What do ya know… it worked.

Four instances with 40 frames each, rendered a 160 frame animation in half the time it took to just one instance to render all 160.

Awesome workflow tip, thanks!

This is actually a pretty amazing trick. It never even occurred to me to have simultaneous renders in multiple instances of Blender, so what you’re saying is that on, say an 80-frame sequence, you can set Blender 1 to render frame 1-20, Blender 2 renders frame 21-40 and so on, and at the end of the process you will get all 80 frames in less time than if you had just rendered them all from a single Blender instance?

Yeah I was shocked too!

Yes that’s the technique I used. I guess you could step the animations also. I’m guessing that all instances need to be under the GPU’s RAM limit.

As for it working…

I assumed it would be switching to each instance and rendering in turn, but after watching the file destination folder I could see the PNGs coming in sporadically, some back to back within a second or two. So it’s obviously rendering in tandem.

Cool new workflow for the M1’s

Credit @anon80315389

Four instances is just an example of course.

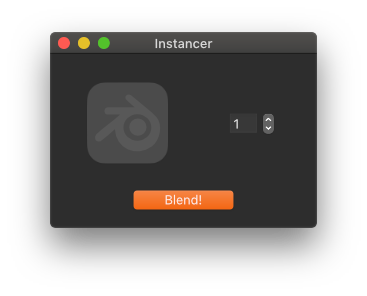

In real work I use command line rendering for multiple Blender. However it is a bit annoying to create and run commands. It would be great to have a simple distribution app. Even something extremely primitive:

I do the same, using Terminal to luang multiple instances. Something basic as your mock-up would be nice.

FWIW, it’s pretty common to do this. Blender even supports a means of doing this easier. In your .blend file, go to the Output panel in Output Properties. At the bottom of that panel, are two checkboxes after the Image Sequence label: Overwrite and Placeholders. Disable Overwrite and enable Placeholders. Save your file and then fire up your multiple instances of Blender for rendering (command line is fastest… I tend to use a bash script) and you don’t have to worry about setting frame counts per instance.

What’s happening when you set those toggles is that when an instance of Blender is rendering a frame, it creates an empty “placeholder” file while it processes. And since you’ve disabled overwriting, any other instance of Blender running your .blend file will see that placeholder and skip it, moving on to the next frame (and making a placeholder for that). It’s a “poor man’s render farm” technique that we’ve had since the early years of Blender and it works really quite well… especially for CPU-rendered scenes that don’t fully tax the CPU on a single frame.

To add to your tip. I read somewhere in this thread to render png, however I recommend to use OpenEXR float(half) and DWAA(lossy). You’ll have greater bit-depth and like 6 times smaller files but like no artifacts.

Thank you so much for your valuable info.

I can’t agree with “works really quite well… especially for CPU-rendered scenes” statement. In my opinion it works a lot better for GPU rendering because in that case there is an exceptional waste of resources. Part of the work is done by the GPU and part by the CPU, and often one waits for the other.

It seems this placeholders functionality was intruduced with multi-server renderfarms in mind. Now this proves very useful for rendering on a single computer with multi-GPU setups… and on M1 Macs

I insist that such optimization should be introduced into Blender in a convenient way for the user. I have no big problem with a terminal use. However I’d prefer one click solution. Also, not everyone wants to become rendering engineer – feel sick when have to use a terminal.

I’ve done a lot of testing with this. This solution isn’t great for GPUs because most GPUs don’t handle this kind of multiplexing all that well. Jobs tend to be serialized there and it’s tough to get the OS kernel to schedule a job across cores/threads like you can with CPUs. (I’ve had render jobs where I’ve run up to 50 separate instances of Blender on hyperthreaded Xeons… this isn’t something that’s exclusively useful on M1 Macs). The work-around would be to have one Blender instance dedicated to GPU rendering and the rest to CPU (or if you have multiple GPUs, one instance of Blender per GPU… but only if you’re rendering with Cycles… Eevee can’t use multiple GPUs).

I think you misleading completely something. I proved (and others too) that multi-instance GPU rendering on one machine means big time savings - it is a fact.

Do you want to dispute the facts? Just test it yourself in practice instead of theorizing. I may not have a lot of experience, but I do have machines I can test on. Do you have multi-GPU machine? Check this out. Do you have M1 Mac? Just test it. Then we can talk.