Is there any known way of created midi driven animation in lender? The last thing I heard about was midilman, but that hasn’t been updated in about 2 years. I’d love to be able to do Animusic-style animation. Any help would be appreciated!

Over the last couple months ive seen acouple people do things with midi, one person had this animated flute or whistle and someone did this… theres a few results with a full forum search for ‘midi’.

There is a way to convert midi notes and controller CC data to something Blender understands, and that would be by converting it to to CV (control voltage), which can be recorded or converted into an audio file.

So, in Blender you need to give a keyframe to a parameter, then go to:

graph editor window > key > bake sound to F-curves…and browse to your WAV.

Ok, but before you do this, but how do you convert midi CC to CV?

So, as an example:

In the DAW of your choice you have a CC controlling the cut-off of a filter. This is the audio you’ll actually hear in your music.

At the same time, you need to also route this same CC to control the volume of a separate audio track. On that audio track you place an audio file that is just a stream of samples all at 0dBFS (so, full scale direct current). Then you render this audio track and you can load it into Blender and the animation will have the same curve as the CC that controlled your filter.

There are other ways to create the CV wav like using Expert Sleepers plugins or Bitwig, but the way outlined above should work ok.

You could use a similar technique to convert midi note data to 1 volt/octave CC and render that to a wav (although you’ll need something like the Expert Sleepers Silent Way Voice Controller for that).

One thing to be aware of is you’ll be rendering at ultra low, non audio frequencies, so make sure that your DAW isn’t filtering out these frequencies (otherwise known as DC offset), because that is the very info you need in the rendered wav!

Hope this helps!

I hope Noah is still developping midiLman . There’s also http://www.jpfep.net/pages/addroutes/ but I didn’t try it yet.

I think that what’s needed is full MIDI channels, notes, CCs, etc. mapping to any Blender parameter via Blender drivers. Audio (FFT) can also be used to help is some cases.

I’ve heard of MIDI to CSV data files such as https://www.fourmilab.ch/webtools/midicsv/ and associated Perl and Python scripts. I don’t know what happens to CCs.

Also possible avenues:

Osculator (TouchOSC) : https://osculator.net/forum/forum/support/mobile-apps-ios-android/1086-storing-midi-cc-from-an-external-device-as-a-variable

Max from cycling74 or Plogue Bidule

Ask a VST developer to create it. In the past I had success with Christian at ddmf.eu

Regards, Uber Nemo, studio owner & amateur Blender artist

You could just use something like https://github.com/sniperwrb/python-midi and convert your MIDI into keyframes in Blender through a simple script. Animation Nodes also seems to have some MIDI support if you search for it. If you need real-time, that’s a whole another ball game.

This Python code snippet seems fine for notes but not sure that it will handle CCs that are at least as important as notes themselves for more advanced applications.

There are also similar MIDI control attempts for Adobe After Effects. Not impressive so far.

For real-time MIDI to viz (GLSL shader level) I only know TouchDesigner and Notch.one which are powerful enough to handle live data stream and render on the fly. Other apps usually are video-switchers, i.e. using pre-rendered video clips and transition, etc. between them under MIDI and/or audio control. Much easier but also more limited.

Last time i checked, Blender is able to read frequencies from wav files just fine, which is all the information you need to calculate a note pitch. No need to convert any midi data.

FFT will give you the frequency of the fundamental note but only for single notes from single instrument. For chords and multiple instruments it will not be enough. Also: to go further you also need to decode MIDI CCs to get articulation, effects, etc. If you also have percussions mixed in it becomes even more difficult to separate instruments.

Animation nodes has a midi file node, and it is very simple to set up a system that will take a file and convert it into an animation. Take a look at this tutorial on the docs:

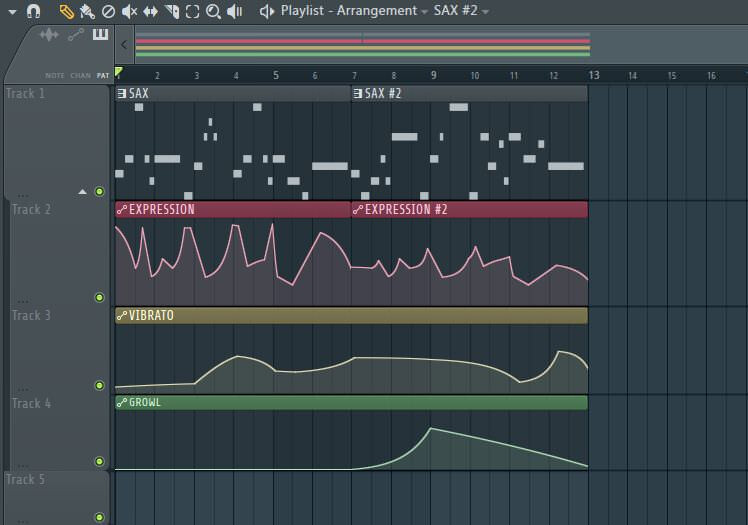

Thanks @Charles_Weaver for this interesting link. This unfortunately will also not work for anything except the simplest MIDI files. Like other solutions mentioned this one lacks the ability to decode and process CC (Continuous Controller) messages which are essential for any realistic MIDI rendering. CCs are used by musicians in their DAW to control expression, nuance, intensity, etc. More (intro) info here: https://professionalcomposers.com/midi-cc-list/ . They look like this (simple case):