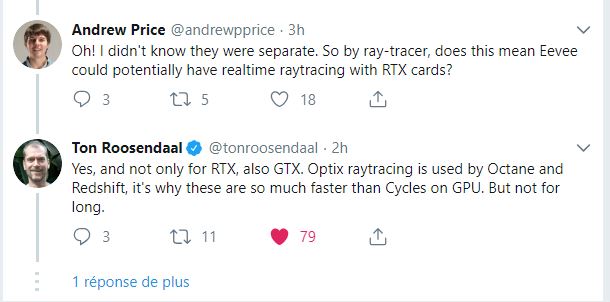

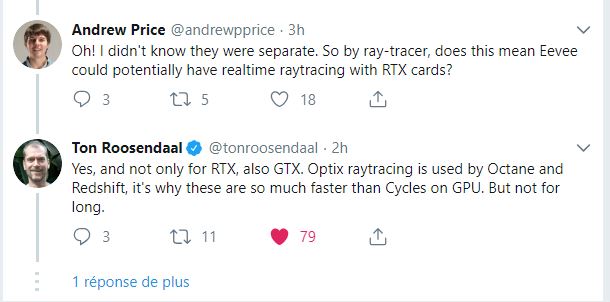

ton have the nvidia hype i think ![]()

ton have the nvidia hype i think ![]()

My main PC went bust tonight - during a render

So I need a new one, fast. Possibly tomorrow, with the black friday stuff going on.

I see some RTX2070 being offered/bundled, 16GB main memory (is that enough)? I only do occasional gaming (due hand strain).

Do I go with RTX2070 or a 1080 card? Even if it’s not fully utilized yet for Blender, does it work at all for GPU rendering?

Example1.

Example2.

Can’t really afford ryzen/threadripper stuff.

Normally I’d tell you to always go with the newer card all other things being equal. This time though, I’m not so sure about the reliability of the 20 series. Maybe it’s just a vocal minority that has experienced failures. Or maybe not. Make sure to keep that receipt and have the warranty card signed and stamped if you go that route.

GTX 1080ti - If you can get it.

rtx is going to be integrated , why 1080ti ?

Going to Mars? Hopefully you do.

I live in present so IMHO, best is to get used GTX 1080ti for ~500€ and buy RTX when 2nd or 3d gen rolls out and everything is proven stable. Save 1K & yourself from being stressed beta tester.

Another notable YouTube reviewer saw his RTX card die on him (this time, it’s a Gigabyte RTX 2070 with a custom design).

It doesn’t exactly instill confidence that a new Turing card will survive being pushed for several years, especially if this keeps up.

Nvidia has also admitted that something failed in their quality assurance regimen

after- sales are here for this … every cards have at least two years of warranty …

if a gen 2 or 3 will even be there …

gamers spit so much on this card i hardly believe that nvidia will pull all this technology solution ever in a consummer card

I have had my 2070 for about two or three weeks so far and haven’t had any issues. It’s a MSI Armor RTX 2070. Just checked my systems uptime and the card has been running 24/7 for 25 days now. YMMV

My suggestion is to go with the 2070, with Octane it’s faster than a 1080 and just a few points shy of the 1080ti. If fact it’s just about 15 points below a 2080. I’m seeing similar speeds with Cycles as well. When the devs have it running on the RT cores it will be a lot faster, so you have some future proofing there. Just a thought.

Post #82 in this thread reports of pretty significant issues with Cycles. Have these been resolved? Yeah it’s about future proofing, but it’s not so attractive if it takes 5 years  (why isn’t my enter key working here anymore? - can’t make new paragraphs).

(why isn’t my enter key working here anymore? - can’t make new paragraphs).

Test - yeah, now enter works again.

Lukas set me up with a build that supports CUDA 10 here (Linux version):

I ran the GPU benchmark with Cycles in the GPU benchmark thread, I haven’t had any issues, but I don’t use Cycles on a regular basis so YMMV. My 2070 rendered the scene in 1:31.39 which is faster than a 1080ti from the list, although there have been a lot of performance updates so the 1080ti times should be better.

Why Turing is so fast in rendering I mean gtx 1080ti have way more CUDA cores way more ROPs more Tera flops power but still rtx 2070 it’s faster in rendering

I’m just guessing here, but it might be because Turing is running at a faster base clock speed, also the memory is faster and at a higher bandwidth. If you run the GPU benchmark with the latest Blender build I’ll bet that it’s faster now. The times on the list are for the official Blender version and not for the current better optimized versions in master. The Octane benchmark puts the 1080ti about 15 points faster than my 2070.

Every new generation brings substantial boosts to CUDA performance, usually in the range of 25-30%. That’s why newer cards with less CUDA cores can compete and even surpass previous generations. Think of it like CPUs and IPC improvements.

tl;dr : technology improves

So official 2.79 is stable to use with RTX cards without any rendering artefacts?

I don’t use Octane, but I’m guessing any new hardware will be able to outperform my 680 ![]()

I’ve used my own build of 2.8 with CUDA 10 support on several jobs (stills + animation) and I haven’t noticed any artifacts.

ROPs are only available to rasterization via OpenGL/DX/Vulkan and do not have any influence on Cycles (or any other ray tracer, to my knowledge). TFLOPS also don’t account for much, as ray tracing on GPUs is typically memory bound and doesn’t reach anywhere near peak compute occupation.

Does 2.8 support RTX cards yet? I ordered a 2070 and while I know there are 2.79 builds that support it I haven’t heard if the buildbot versions of 2.8 support it yet.

If you’re down to build your own version, yes. All you have to do is add “sm_75” to CMakeCache and it should compile the necessary cubin files for RTX support.

As someone who knows nothing about coding that goes right over my head, but thanks. Once I’ve got my new PC built I’ll try to see if I can figure it out. Is it something that will stay each time I compile the latest version?