I know but see the green wall in the left where the curtains are, it’s slightly brighter with oren nayar so that’s how I know light is scattered differently.

As far as I can tell, that general purpose idea is literally yours?

I never said it’s for literally everything. Of course it isn’t!

Well… to be fair…

While in reality it’s not meant for dusty stuff as far as I can tell… Specifically for “materials with spherical Lambertian scatterers”. Which is the whole point for all my questions of just how useful that is. Is dust “a material with spherical Lambertian scatterers”?..

Well… I guess it could be… possibly… it depends on the dust…

Loads of things scatter at least approximately in this way though.

I already mentioned how Velvet materials are basically the same rough concept but for stuff that has tiny hair assumed to be perpendicular to the surface. A different assumed underlying geometry.

No base shader is gonna be a one-size-fits-all solution. If you really want “full realism”. you’d have to construct a different unique shader for every single material. Or build your objects in atomic-level detail and calculate light bounces in terms of molecules and their orbitals etc.

You have to make simplifying assumptions to get any progress at all.

The Lambert shader is the single simplest one, assuming:

- rays get scattered in any direction in a hemisphere, attenuated by the cosine of the angle between the incoming and normal directions

- rays never get bounced “down” towards or into the surface. It’s always a single scatter event.

Beyond satisfying energy conservation laws, this is extremely unphysical and simplified.

If we can relax those assumptions, surely that’s a good thing?

This Lambert Sphere shader does just that. At low roughnesses it should essentially be the exact same as regular Lambert, but it gives you one new parameter of artistic control, a roughness.

Of course there are very different kinds of dust. I’d wager all of them are better approximated by tiny spheres than what regular plain Lambert does.

Will the Lambert-Sphere shader cover all essential features of arbitrary dust?

No, of course not. But it WILL be closer. Because a part of the look that all dusts share is down to the nature of unstructured random piles of tiny particles. Shape-specific things do matter, but there’s gonna be a similarity in appearance between just about any type of dust that is closer to this new shader than to the default diffuse one.

If you, say, had some very fine dust of something shiny, perhaps the Mirror Spheres shader would be a better fit than the Lambert Spheres one. But the differences between those two are much smaller than between either of them and regular Lambert.

And that’s despite completely different behavior on the particle level!

You can always do better if you know more about your material. But as far as I can tell this shader is at least an excellent second approximation for a broad set of diffuse materials. (The first approximation is Lambert)

I think we could possibly agree there. If somebody defines that set of those flexible analytic parametric BRDFs, like the whole set. And it’s implemented into Cycles. I think that would be awesome.

But then I have some questions about that. ![]() I mean like open questions - What would that set look like? Is a set the best way or is it possible to maybe generalize some parameters? What would be more intuitive to use? Is it better than my way of judging the result only? In what ways? Does it matter at all? How much impact would it have on artwork?

I mean like open questions - What would that set look like? Is a set the best way or is it possible to maybe generalize some parameters? What would be more intuitive to use? Is it better than my way of judging the result only? In what ways? Does it matter at all? How much impact would it have on artwork?

That would possible be a meaningful step forward.

I mean we do have a set of BRDFs. that’s precisely what we have:

They are generalized. Maybe extending that set from the perspective of microscopic surface detail…

I don’t think that set is closed. New specialist shaders are developed all the time. Like, there is loads on supporting realistic metallic microflake paints for cars, for instance.

I remember Disney/PIXAR developed shaders specifically meant for snow and sand in their Hyperion renderer, which also would be cool to have.

And this particular shader here is just from like three years ago. Pretty recent, all things considered. There will be more interesting and useful shaders in the future too.

Just quickly checking the zitations of the Lambert Spheres shader paper, at the moment there are six more papers in its wake, some of which talk about new shaders themselves:

all with various usecases.

So supporting “the whole set” isn’t gonna happen. There’s always gonna be an arbitrary cutoff, likely driven by interest or initiative. - the recent addition of the Ray Portal shader is a really cool idea.

this one seems to be a survey of many related techniques. It basically just collects a ton of existing works on the topic. It’s not just a paper though, it’s an entire 746 page book. (although it’s only 569 if you exclude the Bibliography. Being a survey-style work, it references LOADS of things. It’s meant as a starting point where you pick out whichever piece you are interested in and then go through the further reading list of that topic to get more details)

Is this lambert sphere shader in development or is this just a discussion about?

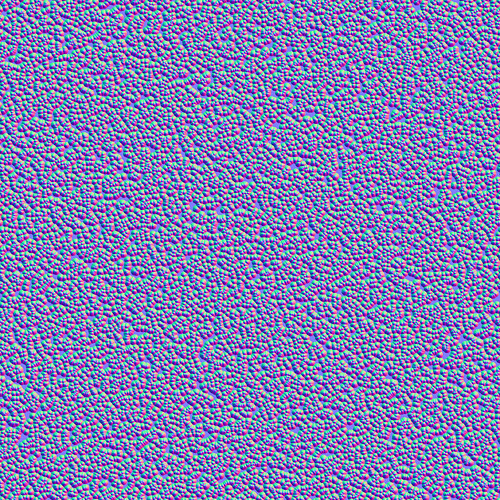

Playing with a normal map with dots to emulate something similar to the lambertian dots paper

and applied to the scene looks like this

Lamber/Normal map with dots

It loss a lot of energy

Comparisson with corrected exposure

You can see the light distribution changes a lot when the exposure is corrected, idk if it’s correct or not this behavior but personally I think it looks a lo more realistic

this is the texture if someone whants to try something.

No, I meant to experiment how much spheres differ from other kinds of geometry. Normal maps might not be really good enough for this though, I don’t know.

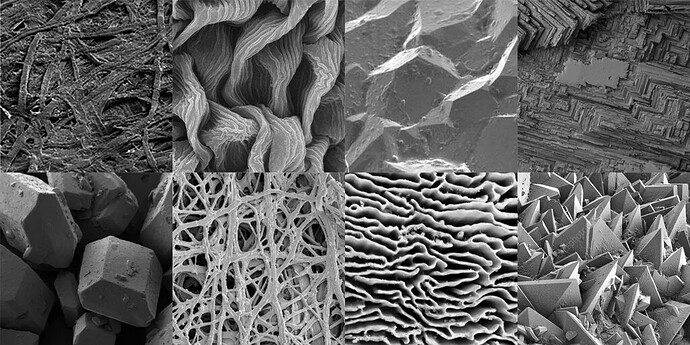

I mean like is it different if those are pill shaped details, or maybe diamond shaped, or maybe cubes, or maybe irregular crystal like stuff, or maybe Suzanne heads. Or maybe something like this:

Hm… Geometry nodes… ![]()

A normal map actually can be good to emulate this because whe only matter of how the exterior irregularities are to emulate different types of light dispersion in different types of surfaces, I will do more normal maps. I’m using substance designer so it’s easer to change the shape to something else.

Well, they don’t account for lack of surface for one thing, like if there is a hole where some light is pretty much lost. Or do they? I don’t know. They don’t account for multiple reflections, do they? Do secondary bounces matter?.. How much?..

I suspect some or even most of these will average pretty close to existing shader types, but then some might have patterns that differ in some ways.

This all seems in line with this to me:

BRDF measurements have shown that real world materials exhibit a wide range

of reflectance behaviours [Matusik et al. 2003; Dupuy and Jakob 2018].

I already regret I visited this thread ![]()

If this gets split to a separate thread, there is potential for a lot of time to be lost…

Isn’t this similar to the discussion we had over on the microroughness thread a few years ago.

The experiments you and I did suggested that the geometry of the microsurface does indeed affect the overall result (at least in terms of microroughness falloff which is what we were concerned with at that time). It makes sense that other properties might also be affected, like anisotropy etc depending on the underlying geometry of the microsurface.

This tiny normal dots reminds me of Thea render with its micro roughness.

A graph of the pattern at 4:20 min

Btw,i guess to simulate the sphere effect with a normalmap can be done to a point where the scaleing gets to small for a correct calculation.For this reason you need a shader with microfacets calculated as spheres with its roughness scaleing.

This whole thing started here:

or technically here:

and to my knowledge, there are no plans to implement this

Yes. But now it’s diffuse. ![]() And Geometry Nodes exist.

And Geometry Nodes exist.

I guess it comes down to the question of whether diffuse actually exists, or are all reflections fundamentally glossy, with the perception of ‘diffuseness’ being emergent from the sub-visible micro geometry of the surface.

It might not be fast or efficient - but in theory, could we have a single glossy shader - with all properties like diffuseness, microroughness, anisotropy etc being controlled instead by modification of the microsurface properties via normals or instanced geometry.

I think Maxwell render used to be more similar to that, at least many years back when I used it briefly, probably still is, but I don’t know. I think it’s materials used to have layers but basically did not have separate components for diffuse and glossy, but only roughness parameter and various other parameter including color at 0° and 90° facing angle.

It’s also possible to make materials in Blender mixing 2 glossy BSDFs instead of Diffuse and Glossy. I have experimented with that a bit, it works, but is less convenient as you cannot separate renders into passes that useful as with diffuse component. It’s perfectly fine to use completely rough Glossy BSDF instead of diffuse as far as I can tell. Probably renders slower though. Thinking about it, now we have AOVs, so I might try that in my workflows.

In any case, I don’t know any renderers with shaders designed to specifically take effects of surface detail into account and I think they are possible to see with glossy objects like we have explored with micro-roughness, and also with diffuse. It would be interesting to know if there is any research related to this with measurements of materials with some more complex surface detail. I don’t know much about how people came up with currently popular shader models, maybe I am missing something.

But should now be easy to test theories with geometry nodes, so I am thinking of some possible experiments maybe… Contemplating it. Just to explore if this could possibly matter at all. It probably doesn’t. It’s just interesting. It would be interesting to observe some real diffuse materials more closely with all this in mind.

fwiw there are loads of processes that, on a micro/nanoscale, cause light to be redistributed at random instead of in a strictly predictable manner.

In fact, strictly speaking, no bounce is perfectly sharp. Although the level of diffusion is on the nano-scale.

It does cause, or at least is related to wave-like thin-film interference effects like soap bubbles and such, but short of that, the inherent imprecision is so minimal that it effectively doesn’t matter in almost all cases, and we can reasonably assume perfect reflections.

These types of effects begin to matter for structures that are smaller than wavelengths of visible light, around 360-830nm ish.

Even short of that kind of randomness though, there are situations where atomic-scale randomness comes into play. Most notably, absorption and re-emission are randomized processes: An atom doesn’t remember what direction a photon it absorbed came from. It’ll emit photons after excitation in literally any direction at random.

That said, that describes fluorescence or phosphorescence, not diffuse reflections. They will also change the color of the outgoing light compared to what came in. (I.e. turning UV light into some intense visible-light glow) I’m not immediately aware of regular diffuse reflections that aren’t structural but rather down to like orbital interactions. Maybe those are a thing too, I just don’t know.

Really? I am not pretending that I understand how quantum field theory works, but light is not only a particle, it’s a wave in electric and magnetic fields as well and we should probably not think of it as a ball bouncing around. You can clearly see the directions are exact in a mirror. Let’s not go any further. Let’s also assume the light source is not a laser, but one that emits some wide spectrum of unpolarized light in some range of different directions. Let’s not worry about interference.

It doesn’t. The only thing that would be 100% realistic would be volumetric modelling down the the molecular/atomic scale. And then try to figure out a good way to do actual sampling at those levels.

Diffuse is just an approximation of the absorption component; whatever bounces into the material, picks up color (by absorption), comes back to the surface, and then bounces back out at random direction. Could also be mixed with subsurface (no light transport), translucency (light transport), or refraction.

No it can’t. It can scatter as much as you want, but it’ll never account for masking, shadowing, or loss of specular energy.

That wouldn’t even work as we can’t control sampling. There is a reason we have an anisotropic control in the glossy shader (was previously a unique shader); just like Oren-Nayar/Rough Diffuse effects, this is impossible to fake.

No. Ignore the saturation stuff and just turn up the Diffuse roughness instead. Under the same lighting, you cannot “fake” a rough diffuse surface with a Lambertian (Diffuse) shader. You NEED some sort of Orean-Nayar. We could write our own shaders back in recursive raytracing days, but with path tracing’s “enclosures” this isn’t an option.

What’s this? ![]() We’ve had Oren Nayar as part of Diffuse since, like, forever. And I’ve used Oren Nayar for some 30 years (iirc the full thing rather than the approximation, and it was ridiculously expensive).

We’ve had Oren Nayar as part of Diffuse since, like, forever. And I’ve used Oren Nayar for some 30 years (iirc the full thing rather than the approximation, and it was ridiculously expensive).

The discussion here has been to implement Oren Nayar into Principled as it is available in the Diffuse shader (it has problems), DisneyDiffuse which attempts to fix some problems (specular roughness is coupled to also drive diffuse roughness), or some other method by whatever render engine or research paper we compare to .

Sheen/Velvet is another specialty diffuse shader (simulates rendering microscopic cones rather than spheres), and we have no way to simulate a fabric nap direction in a shader (the hacks I use have severe issues). No, millions of actual hairs isn’t always a valid option.