When you use them to displace the surface, then it’s a displacement map and not a bump map. If you use it bend the normal, it’s a bump map. Since there are no bent normals in physics, there is no physically correct way of rendering them.

I am not sure why everyone is discussing this…I is perfectly clear that this is good for gi baking, and cycles for production…so generally I call this a win without much need for improvement. so whether this is supporting bumps or not is kinda irrelevant…this is all my opinion we are all entitled to one…

by the time this is actually ready for a full project it will be, if not currently, obsolete.

with all that being said, I would like to see more images please :).

I agree. Why is everyone so against this addition. It’s for GI baking, not full production. What’s with this “all or nothing” attitude. Blender desperately needs GI baking and this provides it. We should support it, not tear it down. If you want a better tool for production rendering, use cycles.

Of course there are “bent normals” in physics. The whole concept of diffuse reflection is based on “bent normals”.

Requiring people to use displacement (for the sake of physical correctness), would serve as a massive, possibly showstopping catch in terms of using the GI implementation (you could use it for lightmaps perhaps, but you otherwise would need 16 -32 gigabytes of RAM or more, that and the fact that would run into the fact that BI really slows down with high polygon counts and might slow your renders down a lot more than if you used bumpmaps).

Even Luxrender and Maxwell, two engines made by teams that focus almost exclusively on physical correctness, allow for bump-maps and normal-maps on objects, I can’t fathom why anyone would try to convince people that such a bread and butter feature such as that isn’t exactly needed (frankly, questioning that is like asking if users really need to be able to use textures and specular models).

No one is against it. The current implementation is perfectly fine for GI baking. I think everyone agrees with that.

The reason why I pointed out the bump map flaw is because the OP praised his own implementation as being on par with Cycles and suggested that “physically incorrect” methods should stay away from trunk or at least be labeled “fake”. These were his words:

As a reminder, this is the “correct” method:

And this is the “fake” method:

Pay attention to the render times. Now tell me, whose time would we save by not adding both methods?

Don’t mistake the harsh words for hatred. Eye already has been jumping up and down on the patch tracker that this should be included in trunk. He’s now playing drill sergeant to Graham to prepare the man for the holes that others may poke in the implementation.

Though, this should probably be a sign to Eye that he’s using an excessive amount of angry words, and confusing people.

Add colored walls so you actually get some bounced colors, then we will see a huge difference in the implementations.

Also, in your (fake) method render, you have lightened the shadow so you don’t have full shadow there, correct? Do the same on the Ray Indirect method and you should see some of the texture come back.

Right now, my guess as to why you don’t see any bump texture there is because that area is 100% bounce lit. With no direct lighting, your bump (fake displacement) texture can’t calculate a specular (fake light reflection) or diffuse (fake light/color absorbtion scatter and following reflection) because it is in 100% shadow. Also note that in reality, a lighting situation like this would actuality decrease the harsh shadows that you’d get from a bumpy surface, though in reality you also get a lot more samples and actual displacement down to the atomic level.

In a nutshell, if you decrease the shadow in both renders (not just your method), you would get some direct lighting which would bring some of that texture back.

As was mentioned by others, everything in CG is fake and approximate to real world physics. lets stop arguing that. My comment is talking about fake bounce lighting compared with real light/color bounce. Not real as in life, but real as in actually sampling color/light data from neighboring surfaces thus approximating real. Of course it is going to be slower than a LERP function on the LdotN result.

I agree with all the comments that we probably don’t need to use the bump normal since BI is primarily used for baking maps for games these days anyway.

You might be surprised how many people would want to see GI in Blender Internal that works with bumpmaps, there’s still a demand for legacy engines in this community for artistic purposes and other things and if we see the situation of this being the last new raytracing-based feature in BI, you might as well not make it feel half-finished (even if people have to use just one bounce of GI to not slow down the engine too much).

All in all, my opinion of BI is that it could indeed get to a point of really shining and obtaining a place alongside Cycles if we start seeing more NPR/legacy-based features like the soft light patch, eventually one may want to see the replacement of slow path-based GI with a renderman style point-based system (something which allowed bounced light in effects from major blockbusters over the past decade and is a lot faster and higher quality providing that one bounce is used).

So in essence, BI sees rapid expansion in its legacy-based feature set and Cycles goes the direction of the likes of Arnold and Indigo. Then the two engines can coexist because of the difference in the way they do things and the jobs they are optimal for.

I agree with Ace Dragon,bump normal for indirect could be useful.

Of course it depends on how much time you want to spend on it sgraham.

I am not opposed to putting it in there. I don’t think it would take too long unless it adds unforeseen complications. My only concern is making it slower than it already is.

Does someone want to supply a good test-case blend file? Preferably one that makes it obvious that normals are facing the true normal or bump normal direction. like a plane with a wavy bump and a plane above that with stepped random colors.

nevermind, I have set up a test scene which is perfect. It shows the problem of no bump normal. Stay tuned.

I have it working, but somehow I’ve introduced NAN’s into my sampling… at least I think that’s what the black dot on the green cube means. Bad conditional or divide by zero? will take some debugging.

One thing I noticed while digging in is that I have to force evaluate textures in order for my normal to get bump, however, as I was thinking about this, it would make sense for this normal to be given to my function by default/automatically/pre-evaluated, unless the normal calc/direct diffuse is happening AFTER the Indirect code. I might be able to rearrange those evals and get the bump normal for free as long as the evals take place before compositing the various colors… anyway, more digging to do, but here’s the results in the meantime.

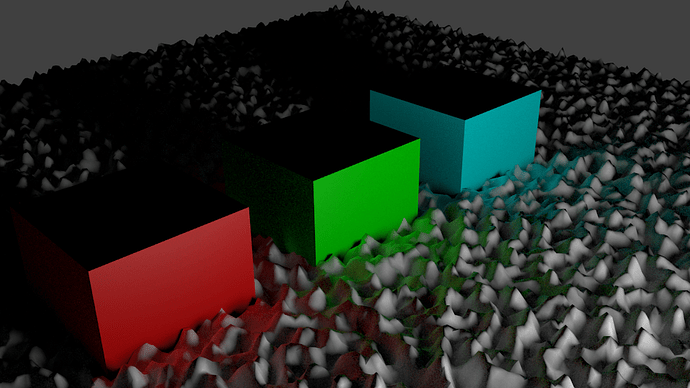

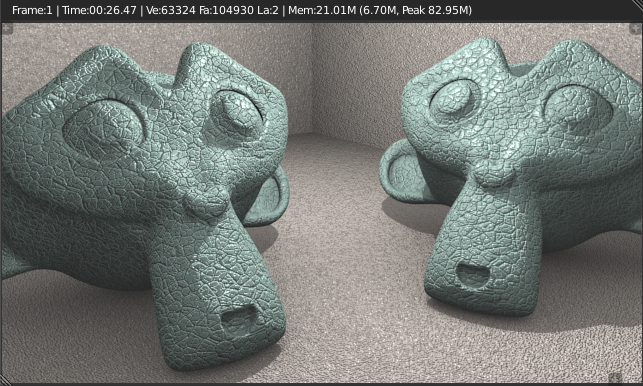

displaced surface (what the bump is emulating)

using the normal which is by default being passed to the indirect lighting function (current build/patch)

result of forcing normal to come from evaluating bump map/textures

It is subtle, but looking at the floor in front of the cubes, what is happening is the dark areas are facing toward the colored blocks, those normals are bent toward the colored blocks due to the bump, so they get brighter in color. The brighter parts of the bump are facing toward the light, so they get whiter (less bounce hitting from blocks)

Crazy, that is too cool

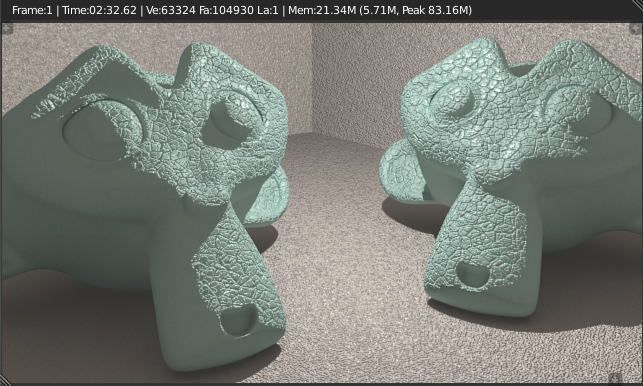

Tried it… it produces very nice results. It is a little bit slow but ok, its results are worthy of it.

BI should not be abandoned in any way… it should be enriched instead and it should remain as an alternative, aimed at different kinds of works and styles. Cycles is great, surely, but it has its own way of describing things. BI is very versatile in offering means for producing a vast variety of artistic expressions. Strict photorealism is not the only needed thing in rendering… there are so many cases that someone may need to express things in some not strictly photorealistic style, so there will be always the need of a renderer rich in offering access to means for conveying a lot of visual nuances.

OK, here’s a test file where the problem is most obvious.

scene4.blend (92.7 KB)

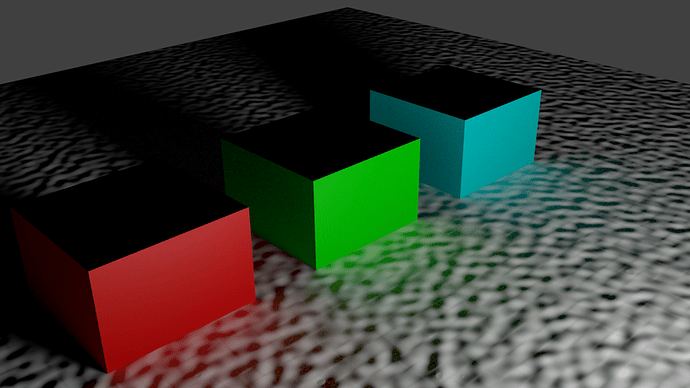

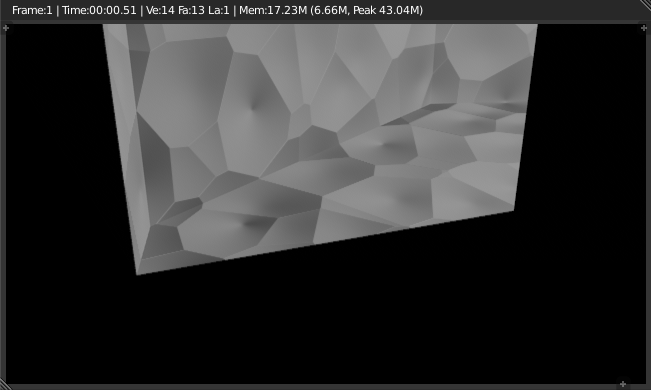

First, the direct lighting render:

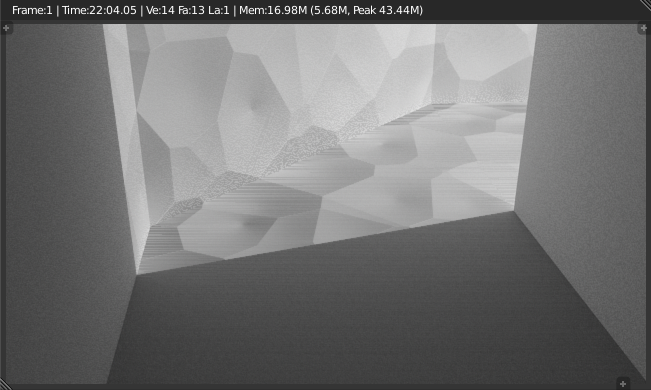

And here’s the same scene with raytraced indirect lighting (2 bounces, 8 samples):

You can see that the bump map is entirely missing. There are some artifacts as well. Run this through your build and see if there is any improvement.

If bump maps slow down rendering even more, I’d strongly recommend to add a toggle.

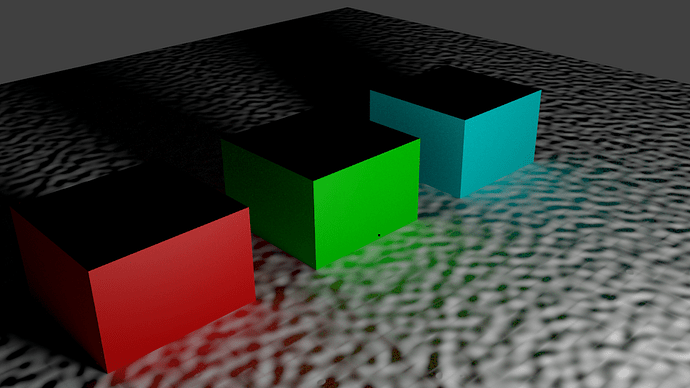

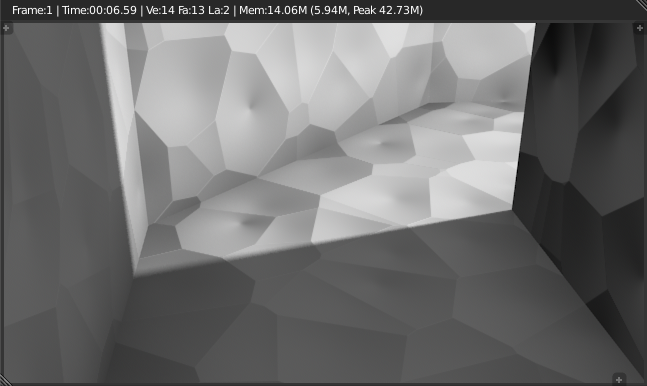

For comparison, the softlight version:

I just noticed something else interesting about the code… so normally you have a certain number of samples per pixel which gives you anti-aliasing, right? Under the render settings you see an anti-aliasing tab with the number of samples…

ok. well, what Blender is doing right now is NOT anti-aliasing the AO/ENV/GI result. Instead it happens 1 time for the entire pixel, and only the direct shading is anti aliased. Does that seem wrong to anyone else? Maybe it’s not a big deal because each AO has a lot of samples of its own… I dunno… but either way, if I move AO below the direct lighting/texture sample loop for AA, it can still happen once, it would just use the LAST sample’s bent normal rather than doing it for each AA sample’s bent normal (super slow)… and these are the unforeseen complications that make this process slow.

I think the only reason it is less important now that AO is AA sampled is because it is not using the bump normal… with a high frequency bump, you could get a lot of different normals for 1 pixel, at which point it would become important.

25% image size, 2 bounce, 8 samples, w/bump fix

One thing I didn’t realize about path tracers until last night, is that they only trace a single path per pixel sample - no branching of rays to gather bounce lighting. so as I understand it according to the wikipedia page, if there is an opportunity to branch in a path tracer, it randomly picks a branch path (hence the name path tracing) and keeps that single ray going. Without all that branching accumulating, no wonder it is so fast with lots of bounces! They invest more in the direct lighting samples than they do in secondary/tertiary bounce branching, which makes sense. Why spend so much time integrating bounces that hardly contribute? BI could theoretically be set up the same way. Would be an interesting experiment.

EDIT - also note that there are a lot more pixel samples… as in thousands rather than the 8 or so we are doing with BI by default.