I have a real building. It’s got lots of reflective windows. I would like to composite a CG object next to the building. I would like the building’s window to reflect that CG object so it will be believable. Can someone point in the direction as to how to do this?

You go to the finished python script forum und get the amazing camera matcher.

This will match your scene camera to your photograph.

After that you add planes for the windows and setup a material that only mirrors but doesn’t render diffuse.

In the end you composit the render with your photo.

Make planes and place them where the windows are, put them in a separate render layer than your object. Give the planes a highly reflective material. Have the layer with the windows include a reflection pass. Composite the reflection pass with the original footage with some sort of node, try alphaover and different types of mix nodes.

From experience this does suck in Blender, especially when trying to combine shadows too.

[video]http://youtu.be/tVXQ-End8DU[/video]

Good luck and I feel your pain.

Great suggestions everybody, I’ve got a lot a tinkering to do now. Can’t wait!

Hi 3pointEdit,

I too have had trouble adding a replection node setup after camera tracking a scene in blender 2.61 - Have you got a simple explanation how you did the reflections in the youtube clip? I’m thinking on the lines of a shiny floor, or varnished table top. You’re probably aware that Blender 2.61 creates a basic node setup after doing a camera track, but it only includes shadows, not reflections. I’m not master of nodes, just basic stuff - so I get lost too easily

Jay, check out the .blend I posted in my previous post. You might find it useful.

Thanks Daccy, I’ll check it out - hope it doesn’t confuse me!

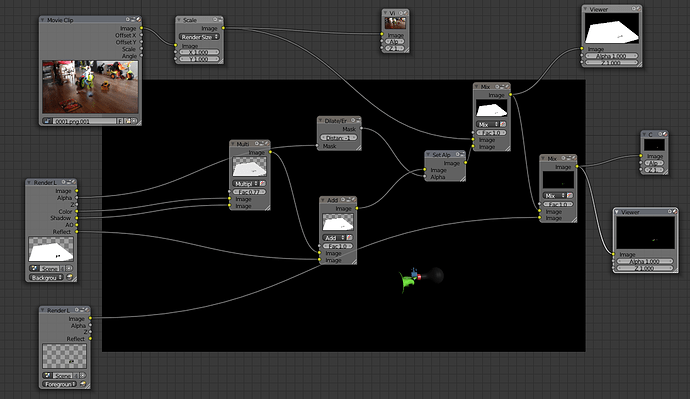

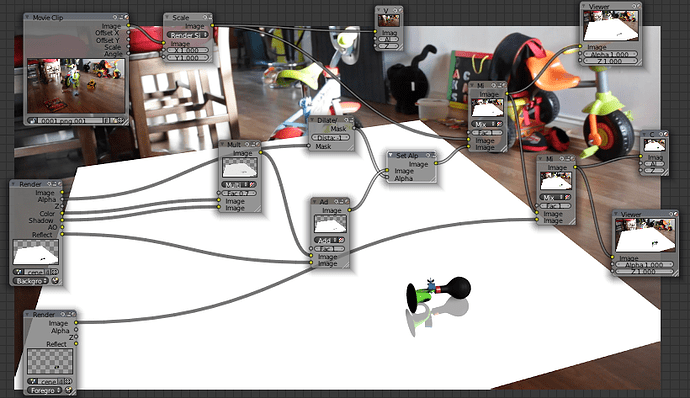

I’ve just checked out your file, didn’t understand it completely, but copied the node setup and render layers - plus made the ground plane reflective… here’s the screenshot:

Still not getting anything like your output, any ideas?

Ok my practical approaches: UV map all windows on each side of the building as layed out to the image and blend it with an AO, grunge, specular and reflective layers. Alternatively, projection map the image onto them.

Your noodles look correct. You just need to activate the alpha button on the Mix nodes.

Hi Daccy, I wasn’t aware of the Alpha button on the mix nodes - click them, but now the plane is visible, albeit with the reflection.

I think I copied all the settings from you blend, but maybe I’ve missed something? (Most probably)

You also need to assign the image as a texture to the plane. Make sure to choose ‘Window’ under the texture coordinates properties, as this will project it “from view”.

Hi Daccy, sorry for the confusion and endless questions. I think maybe we’re doing things slightly differently. I don’t have an image plane set up from the track to act as a background as blenders default Camera Track doesn’t create one like in your example-least I think thats what you’re refering to. Or am I totally confused? I asked this question before in another post, and you kindly answered it, but this is where I’m stumped - If I was to use your method, after camera tracking, how would I create an image plane to line up with the camera to do it the way you suggest? and isn’t that going against the way the camera tracker sets things up, or is your way a better way?

I’m pretty new to compositing this kind of stuff - and this was my first experiment with blenders new camera tracker - I feel a dunce

I apologize, perhaps I wasn’t clear enough. The method I used is not bound to still images only as it’s used for sequences as well. To line-up a mesh with the object(s) in a shot is however an entirely different process, which is actually done in the tracking-phase. Sebastian König has a pretty good tracking tutorial on CGCookie which covers these things:

(It’s around the 22 minute mark, I believe)

Regarding the texture issue. Werther you’re using a still image or a sequence, in either cases you need to assign them as a texture to the mesh at hand, projecting it using the ‘Window’ option under Texture Coordinates. As I wrote earlier, this will automatically project the image/sequence onto the mesh, as seen from the camera, thus blending it seamlessly with the original footage.

And don’t worry, the learning curve for tracking/compositing tend to be quite steep, especially when learning material is more or less scarce, as it happens to be right now.

Let me know if you have further questions.

Hi Daccy, thanks for the info - I agree there are very little docs on tracking/compositing at the moment, some people seem to have cracked it, but users creating reflections are few and far between.

I’ve followed all the the tuts on camera tracking since I saw Sebastian’s first tuts/demos of the tomato branch. Sadly a lot has changed since his first vids. From what I recall in the old video he creates image planes for the image sequence (like your example), but now (2.61) it’s projected into the background (3D viewport Background Image) after you build the track.

Although I could recreate the older method and add a plane to display the image sequence for the camera to look-at, I feel that I’d be working against the method that’s now default in the current Blender release. And as I’m new to this type of technique, I don’t wan’t to be thinking I know better when there may be a more logical way to generate the shadows and the reflections using the default blender generated node layout - here’s a shot of the current auto generated node layout by blender (2.61):

To be honest, I’m not up to date with the current tracking features, the auto generated noodles being one of them. But from what I’ve read it’s limited to only catching shadows [needs confirmation]. Of course it’s up to you which method to use, as there isn’t a “wrong” or “right” one (unless you follow a deadline), it’s the end result that matters. Unfortunately I can’t help you with this new method right now, but I can hopefully answer any question regarding the old one. I might even give the new one a spin later today to see what it actually does.

Please don’t get me wrong Daccy, I don’t think there’s anything wrong with your method, it’s just that I’d love to know where I should go with the new “noodles” to get some reflections.

I doubt very much I’m the only one interested in this, the lack of renders/animations for camera tracking in 2.61 is probably testiment. If you get chance to try out the new method, please let us know how you get on - I’m sure it’ll be usefull not just to me, but to everyone else that’s interested in blender camera tracking/compositing.

Good luck

As i assumed, the auto generated nodes can only catch shadows, unfortunately.

I must run of to the gym, but I’ll take a better look when I get back.

I’ve been tinkering around with the tracker/compositor and found out that the auto nodes can be used to a certain extent. To include reflections it has to be slightly modified though. Also, the footage must be projected onto the shadow/reflection catcher for the latter to work properly. There’s some other smaller details as well. If there’s any interest I can do a tutorial that covers this.