Thanks. I’ve written tuts, but I’ve always been on the academic, ivory tower side of things-- “intersection of plane and sphere” as opposed to “different kind of floor!”-- and it turns most users off. I write some tuts when I feel like it, but I wrote more when I was starting (and some of it is kind of embarrassing, because of course I got some things wrong), because I think it’s easiest to write about what I just learned. And now, what I’m learning just isn’t very interesting to the majority of peeps I see, who are more in the stage of, “How do I export my Cycles mat to Unity? Why does this boolean edge look so bad?”

So there’s like one or two people who are interested in what I know. And they know they can write me if they have issues. In the meantime, every answer here, on Reddit, on StackExchange is kind of a little tutorial. (And I hate videos anyways, I’m so grateful every time I find some text that describes something I need to know, something I can read in thirty seconds, or maybe spend thirty seconds on just one difficult sentence, instead of sitting or scrubbing through 15 minutes of “Please hit the thumbs up button!”)

Eevee might help. When people don’t need a week to render a music video for their favorite artist, they’re more likely to start animating.

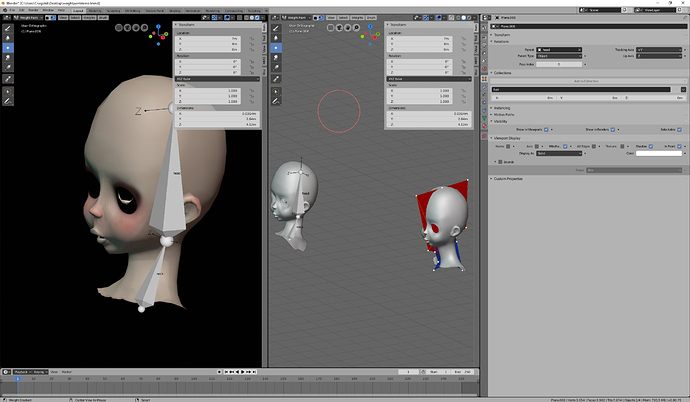

But I’m not sure that’s the entire problem. Animation, after all, is just modelling over time; animation tools like UV deforms are also modelling tools.

What I’ve noticed in my experience on that Blender suggestion site (forget its name) is that the largest group of people are focused on small things to streamline exporting to Unity and Unreal. I think that’s a huge part of the Blender base.

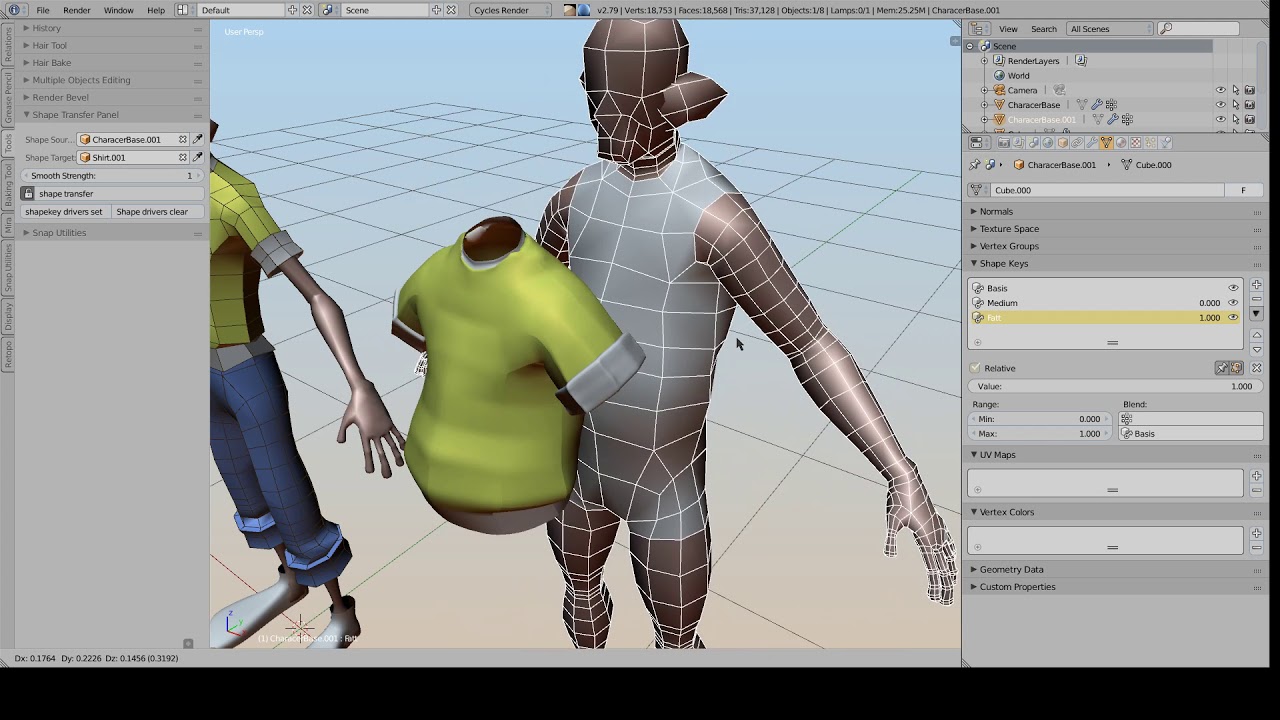

People are not trying to make Gollum, because making Gollum requires a hell of a lot of talent and experience, enough that if you had it, you’d be working the business and use Maya. It’s kind of a chicken-and-egg problem. Nobody’s using Blender to make Gollum, so nobody much cares about Blender providing the tools you’d need to make Gollum.

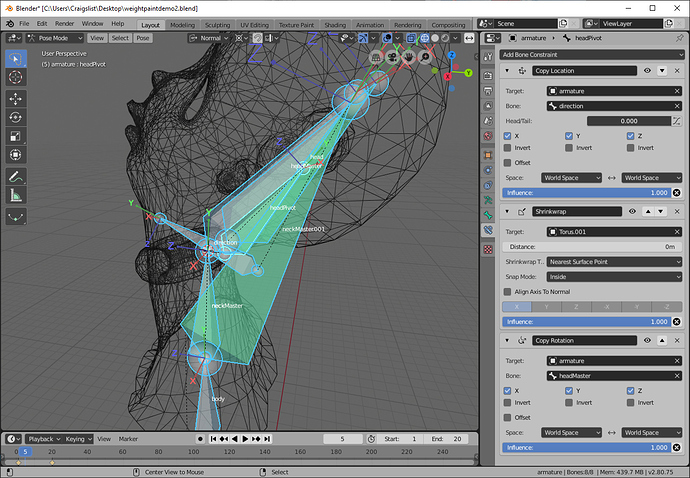

I think the ideal rigging nodes setup would have skinning nodes as well-- non-destructive access to weight paint tools, with parameters set from inputs like transforms and ray lengths and vectors, and of course, options to bake to fast, precalculated groups.

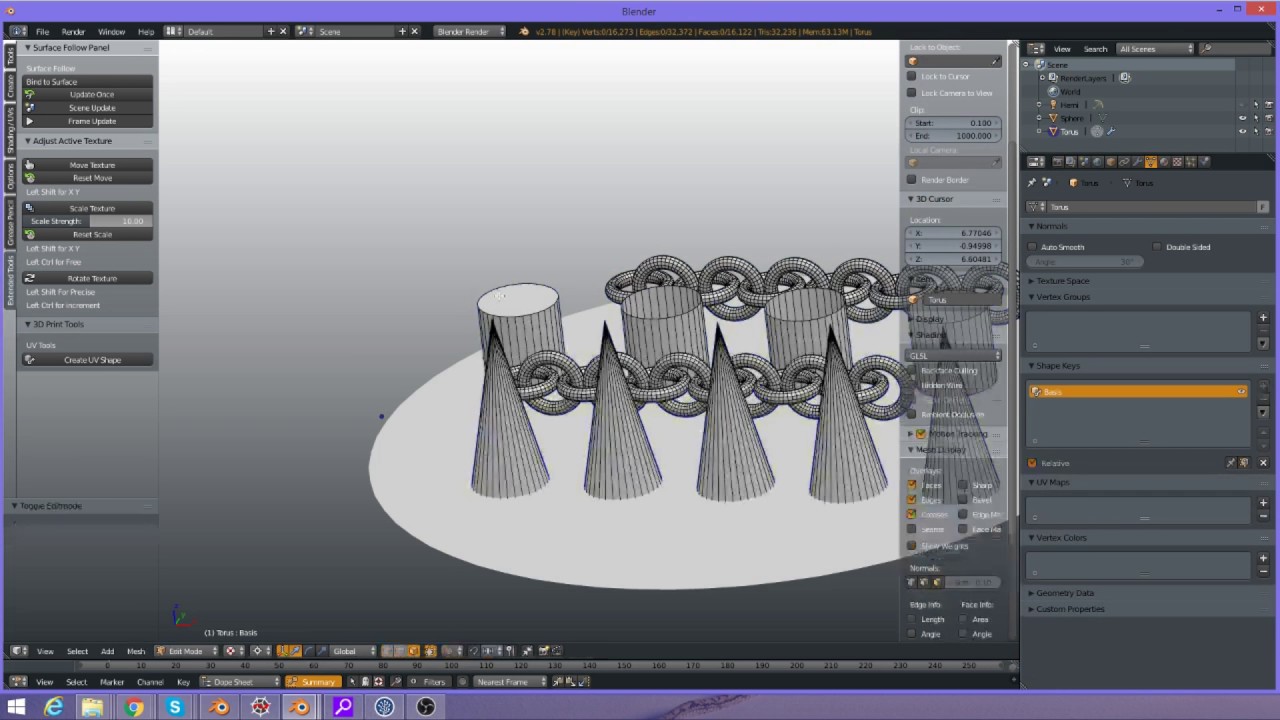

The main issue with all the proxies is just that it all contributes to the complexity ceiling. There are some things I make that are cool, but that are just too complicated to contemplate actually implementing. And all the complexity in a scene kind of adds up. (That’s part of what makes node structures good: you can modularize them arbitrarily in order to control that complexity.)

That’s essentially a UI issue. In my opinion, the bulk of it could be solved by modifier “references”, which create an invisible proxy at an arbitrary point in the modifier stack to be used as a reference by other modifiers or even future modifiers on the same object.

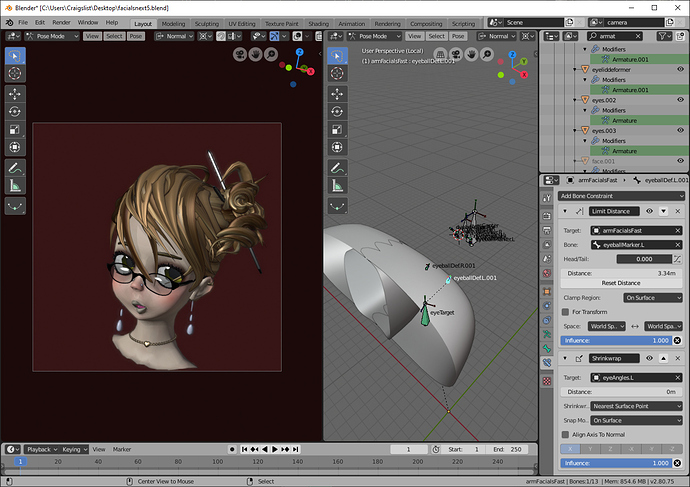

There really ought to be a structure for “handles” as well-- something that, selected and transformed in object or pose, is only pretending to be selected and transformed, but which is actually selecting and transforming something else-- something upon which the apparent selection depends. This takes care of a huge fraction of dependency issues. (Something like a no-falloff hook, located at origin, being controlled by a vertex parented empty. For example.)