Nice work with that EEVEE scene!

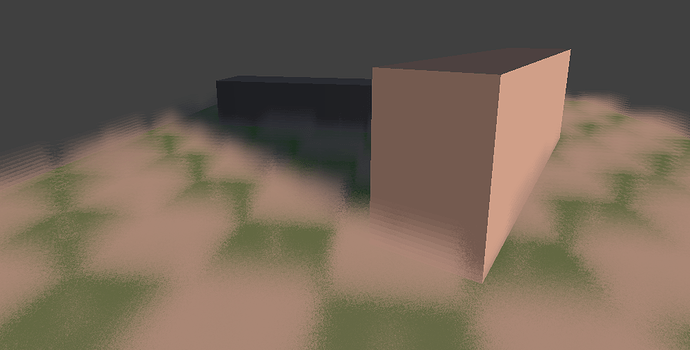

Shaded ground fog

The trick to making fog is figuring out where the normals should face: they should face upwards. This way they catch mostly the sky lighting rather than responding to where the light is coming from (which would reveal the planes themselves).

Couple that with a high roughness or low hardness and your fog will behave nicely to a variety of lighting scenarios:

In this case I’ve used a checkerboard texture to modulate the height of the fog, and 12 horizontal fog planes. I’ve also used a noise texture to distort the texture sample so that the edges of the fog texture aren’t so visible. If you have a more gradual fog shape you may not need that.

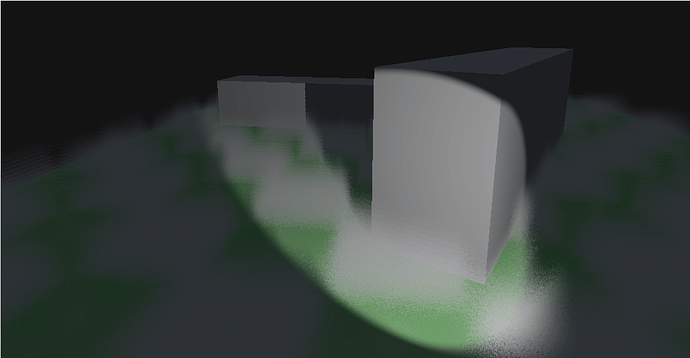

So currenly this effect uses 24 texture samples per pixel - which is pretty poor really. With a smoother fog “shape” and eliminating the noise sample that would go down to 12 - which is still quite high. Oh well.

If you want to use non-horizontal planes, then you can use a script to force the normals to point vertically. (or blender’s normal edit modifier)

Blend File:

groundfog.blend (666 KB)

I was thinking it would be intresting to use vertex color on the ground passed to the gpu to animate wind on grass / fog etc

(so a explosion could animate shader grass or this fog etc)

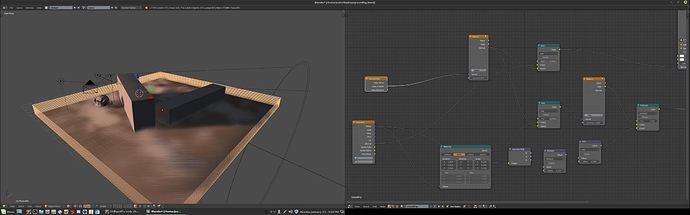

I slightly modified this using a cloud texture and setting the noise input to use the camera viewdepth…I was just messing around…as I told you before…I’m no node-ologist.

I would be interested if anyone could find a way to animate this :)…I may just throw in some uv scroll and add some vertex color transparency for the edges…ofc that would mean some inseting or subdividing…inset is probably more economical though.

I was playing with this and here is what I came out with…

https://drive.google.com/open?id=0B463PAYktLy_Vzk3ZmZTYnY5bms

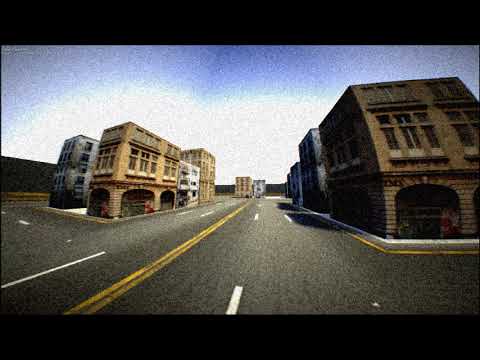

And some more Eevee goodness thanks to Yul:

Looking good !

Isn’t this the model used for the EEVEE demo they held a bit back ?

Yes it is. There are a whole bunch of EEVEE scenes over at: https://www.blender.org/2-8/

I’m thinking I’ll do a vehicle physics rig on the “Wanderer” scene sometime soon…

how is the frame rate? I could not make it out in the video…my children steal the bandwidth

Its written 60.0fps in the profiler, but I wonder what hardware sdgeoff is using

I guess the scene and the settings were simple enough to not drag a lot. Still looks amazing !

That was running on a GTX1060, so not the most powerful, but still in the mid-high spec region. The scene as downloaded from blender.org ran at about 10FPS. That was because it had about 12 lights (and in EEVEE all lights have shadows), so every time an object moved it did something like 72 shadow-texture-renders (assuming EEVEE uses cube-mapped shadows). In that video I stripped it back to just two lights, and it runs very well.

Yesterday youle put in a python binding to disable shadows for lights, so that should allow more lights without the crazy performance hit.

fairly certain just about the only way to do point shadows is with cube mapped shadows, but I’m no shader guru…as I have said before :). I am however very curious about performance in 2.8 with similar settings to current upbge…but that is possibly for another thread(and time) when everything is in a more complete state…still, thanks for sharing.

Point light shadows are also possible with dual parraballoid, but that can end up in little distortion and low detail in a certain ring. However, it requires rendering just 2 shadow textures. Later on Yul could consider having a look at this method. Also, would be nice to be able to layer shadow rendering and receiving (e.g. making only certain layers be rendered in the shadows and only certain layer objects receive the shadows). This would allow to bake most of the scene in many situations when dynamic light is not needed, but still let the light cast shadows from dynamic objects like player.

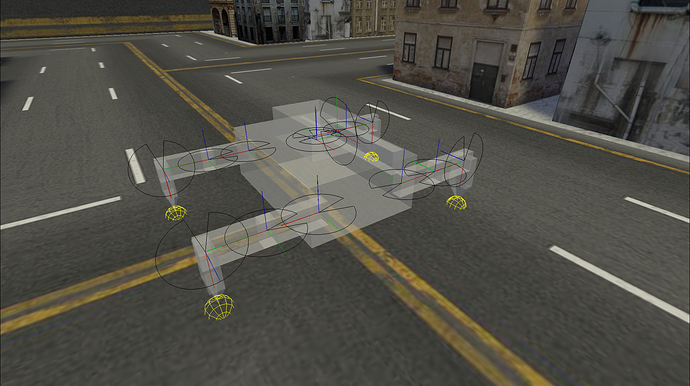

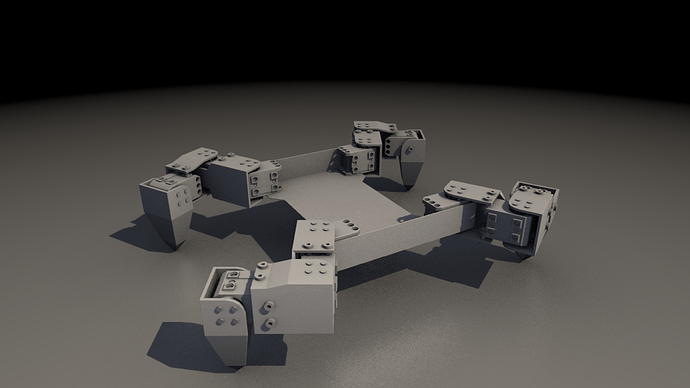

Woah, been ages since I posted here eh! Well here’s a little preview of something I’ve been working on:

It’s part of a project that also includes physical hardware. To give you some ideas what it is:

Mockup of mechanics (likely to change hugely)

My goodness, it’s going to need hundreds of bolts.

geometry shader for the greebles? (can they target a bunch of meshes w/diff transoforms?)

you going with bone partenting / ik for the animation initially?

The buildings are just plain textures. Nothing fancy. The bolts on the robot are modeled in (because that’s actually going to be built). There isn’t really much fancy from a BGE side in the above images.

The interface to the hardware and controller is completely abstracted. What this means is that the controller that will control the actual robot will also control the simulation of it. Coupled with the fact that the microcontroller (an ESP32) on the robot runs micropython, this means that the exact code running in the simulation will run on the actual robot!

What this means is that the simulation exposes functions like set_servo_parameter(servo_id, parameter, value) that the robot’s control system then accesses.

So no, the IK will be done in software, manually. It may end up an C module depending on performance on the microcontroller. (The microcontroller and servos should be arriving at my door middle next week)

In the middle image, you can see the bullet-physics joints that are used to simulate the servos. They have been set to a realistic speed/torque, and all the objects are realistic masses. So with a bit of luck, the simulation should be fairly accurate.

And the simulated version walks:

Pretty badly, but hey, it’s going places.

I like the perspective of the camera. it makes it look like you’re in a threatening machine

Looks awesome.

It is also so mind bending to think that this is a simulation, I wonder how close the actual thing will feel like

@Nicholas_A: It’s because it is a threatening machine. See the turret on top of the in-simulation mesh? Yup, the real version will have that too.

The robot will (hopefully) be an entry for Mechwarfare 2019. If you’re interested, here’s a video of mechwarfare 2018. And here’s a video of a training match from the onboard (pilot’s) camera. From the onboard cam, you can see that the simulation roughly matches, and you can also see why I am bothering to simulate the video interference.

The first physical parts are arriving this week…

And this weekends plan is to get a first pass at omnidirectional motion on the simulated mech.