Hi guys, I have this very weird and frustrating problem that keeps randomly happening with my 2 X 1080 Ti Nvidia cards with cycles, my second(lower) GPU gets disabled after I render any animation(it happens across many projects not tied to a specific scene). So this is a description of how exactly the problem occurs :

-Animation render is complete with no problems.

-I try to render again, either on the same blender project or another instance of Blender, I get an error “CUDA error: Out of memory” I know this is a VRAM problem, but it happens even with the simplest scenes.

-when I open blender User preferences and disable the lower GPU, the render works fine using only the upper GPU.(and when I open new blender project I only get the option of one GPU)

Now here is where the weird thing happens, when I try to close the blender file that the error occurred in, blender closes ,but the console window won’t close ,its stuck ,even when try to kill it with the task manager it won’t exist,I event installed special software that kill a certain process and STILL it won’t exist.

I also got familiar with my desktop fan noises that I know when there is a slight noise from the case, I know there is a process running in the background and the fans are not completely silent like in idle state, so maybe the second GPU got stuck at a certain point it won’t let blender exist?

Also even when I try to shutdown or restart the computer it won’t respond and it will say Windows is shutting down and the monitor gets black, and the pc stays on and it won’t shut down, I have to forcefully press the power button until the computer is off.

When I start the pc again, everything is back to normal and second GPU works normally, I render few animations later, and it randomly happens again and I have to shut down the computer with the power button many times a day.

I tried to update the Graphics driver, setting blender to factory settings, but it keeps happening randomly, even with the simplest scene.

And weirdly it only happens when an animation is done, like I can render heavy animations for 12-20 hours and it won’t stop and renders with both GPUs(to exclude overheating problems ) it only happens when the an animation is complete.

Also sometimes it shows an error at the console (CUDA error: Invalid value in cuCtxDestroy(context), line 279) but not all the time

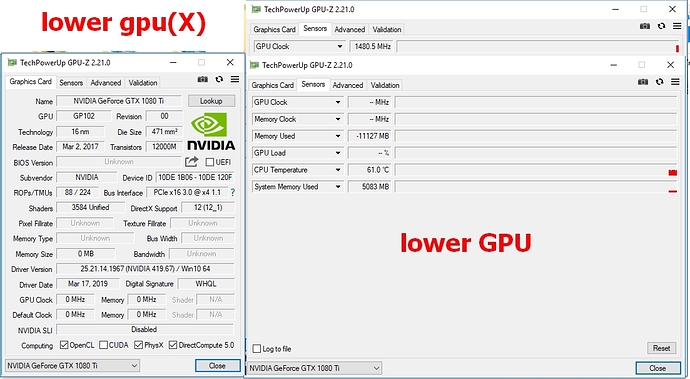

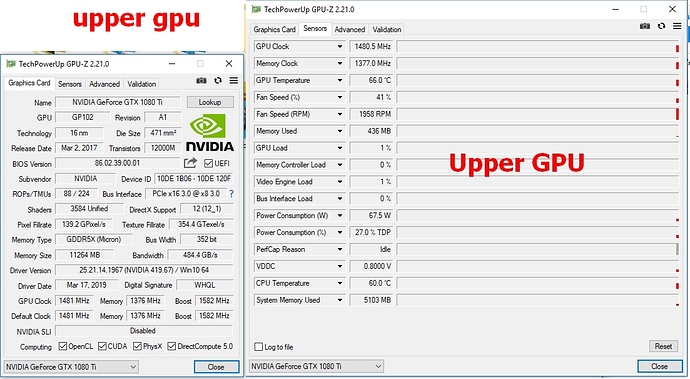

I attached GPU Z screenshots of the upper GPU and the lower GPU (the GPUs that gets disabled) something doesn’t look right.

System specs: I7-7700k ,32GB ram,Windows 10,2 X GTX 1080 Ti ,Evga supernova 1300W,Blender version 2.79

If anyone can help or have any slight idea how to fix this it will be really helpful

Thanks !