First, HSV is an arbitrary non-colour science based thing. If we think about what saturation is, we can think about it in terms of the ratio, or spacing, between channel values. So if the distance between the red light at a certain level and the other lights is large, the saturation of the colour is greater. If the differences are less, the lights result in a desaturated looking output.

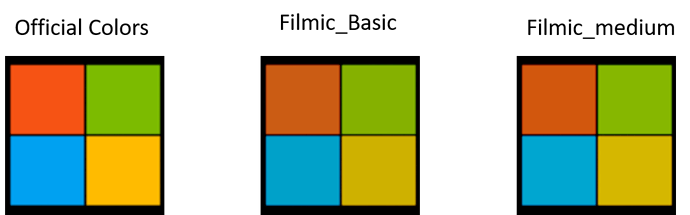

Filmic does a log-like compression on the values, which will immediately compress values more closely together than they were in the actual scene. There’s not much that we can do about this, as the values need to be crammed into the display referred domain. And of course, as you cited, at the upper intensity levels, we have to try to keep some semblance of the original intention of the colour without too much skew, so we tack on a controlled desaturation as we reach peak.

In the area of the curve with a linear segment that is step, the ratios are pulled apart a bit, and the result is that the original intention of the scene’s colours are closely returned. In the nonlinear curved part near the toe and the shoulder however, the values are going to drift.

Not many folks pay attention to the need for desaturation, hence many think Filmic is “just a tonemapper”. Equally important is the higher end desaturation. If we didn’t do this, the Notorious Six (Pure Red, Pure Green, Pure Blue, Red + Green, Red + Blue, and Blue + Green) would never desaturate, and folks would be doing all sorts of contortions to cheat the values. Worse than this, we would still get quite undesireable colour skew as values get intense. Hence, a reasonable view transform absolutely must have a desaturation component.

How we achieve that though, drifts into the domain of colour science. With Filmic, the ratios are desaturated in the linear domain. After all of the nonlinear bending via the compression and aesthetic curves however, we run the risk that the original intention in the scene cannot be maintained. This can result in the hue drifting a bit. Worse still is the nature of colour perception.

Colour perception is a tricky creature, and part of that is the nonlinear psychophysical response to spectrums. This means that hue linearity is virtually impossible to achieve. Filmic’s approach is good, but not as good as it might possibly be. Even if it were, there would still be problems as the entire transform chain is quite a complex beast when you dig into it like this.

The next iteration of Filmic has a more sophisticated approach to the saturation problem, but again, it will still have problems as given the constraints, it is all about minimizing issues while giving a pixel pusher a good degree of creative control. Nothing sadly, is ideal when it comes to perception.

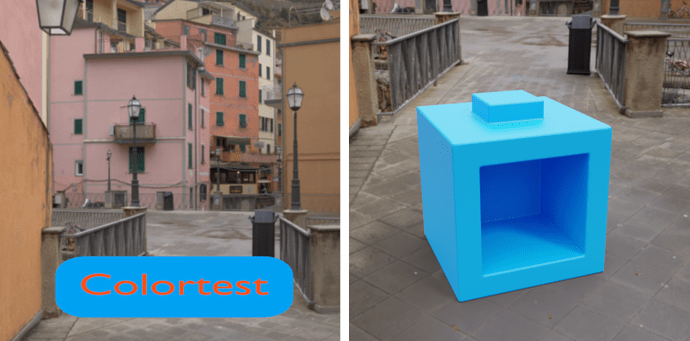

If you are trying to nail down brand identity colours, the best you can do is as follows:

- Identify the brand identity colour and the colour space it is described in.

- Translate that colour to the colour space you are working in.

- Expose the brand identity colour as best as you can within the known constraints to deliver the original ratio without destroying the creative intention of your work.

- Do a secondary grade on the key objects and try to push them more closely to the brand identity colour.

This of course might mean that you actually get dead on the brand identity colour but it doesn’t work and looks too surreal in the context of your other assets, or simply doesn’t work within the creative need of a work. If you familiarize yourself enough with the colour science side however, you can at least make a strong case for yourself and ease the mindset of anyone you need to. Not knowing or understanding can put you at a significant disadvantage, and make everyone uneasy.

TL;DR is that it is a challenge with brand identity colours. I helped two studios deal with this very issue, and in the end, the easiest analogy is that you are trying to end up with a colour exactly as some designer might have designed in a completely non-photographic situation, using photographic tools. Secondary grades are the best way to dial those values in, but even then, as noted above, the overall results might not meet the creative needs of the work.