And 99 of 100 times any 3D use only surface textures. For volumetric textures, if needed, should be exception: some separate shaders(“volumetric textures maps”) that can be used or loaded to cycles kernel, but only if they actually was used in current scene materials. Something like dependency injection. I am not a good programmer and don’t know Cycles Code so I can be massively wrong about that. I don’t know if it is valid concept in point of Cycles Code or CUDA and OpenCL but to me it seams logic to load to kernel only textures map that was used in scene materials.

Using tons of huge 2D bitmaps puts a strain on my systems I can’t live with. And I need 2D/3D generators to provide the means for mixing smaller textures without any need for unwrapping. Probably not the best solution for everyone, but it helps me get to my goals in time.

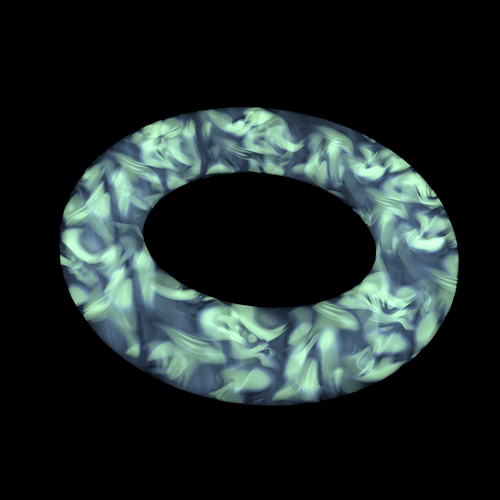

I don’t think you realize just how useful volume textures can be.

They can be used to make clouds and some types of fire without the need for simulation tools, they can also be used to enhance Blender’s own smoke and fire simulations, they can be used to create mist with an uneven density, ect…

That’s only the start, if Cycles ever gets the ability to create iso-surfaces then you can use textures for procedural (and solid shaded) sponges, dust-balls, packing material, ect… For more uses, check out what Houdini users are building with that app’s ability to use procedurals to create real objects based on OpenVDB technology.

They become even more useful when you start throwing emission in there (where you can have structures similar to lightning emit actual light, and all these things above being possible with no need to actually model much of anything to boot.

I will also say this on the idea that procedurals should be limited to making 2D bitmap textures, do that and you essentially neuter their use (because you not only strip away their ability to be completely seamless in terms of being applied to objects, but you also say goodbye to their ability to have an infinite resolution without needed a lot of memory and be non-repeating).

Ace Dragon I perfectly understand what is a difference between using volumetric textures and 2d maps. But in my opinion most artworks using almost exclusive 2d images as a textures or treat volumetric cycles procedural textures as they was a mean to get 2d maps at the end. Like you mentioned only in complex and specific scenarios like clouds, fire and other simulations volumetric textures are really powerful. This kind of work should not be prioritized in blender textures workflow. Especially when 99% of users need much more simpler solutions similar to substance/quixel, etc… texturing creation. In my opinion texture node tree should be active and act similar to substance designer and others, material node tree should be for building shaders (with ability to create volumetric textures). But for normal usage of 2D maps(whats average user creating most), for fast creation of realistic materials, users should have to access to many different 2D procedural, textures, patterns, generators, etc access in texture node tree.

But why deny access to powerful features, possibilities, and workflows just because some people might not need them for most cases? A lot of people might just be content using the new Principled shader for everything, should we get rid of the powerful and highly flexible building-block approach then?

The Substance-Designer type workflow with the texture node tree type could be implemented as an addition to the feature list instead. Look around the forum, there are lots of people who frequently use procedural textures at least as an augmentation to image textures (for things like breaking up patterns and introducing variation). A number of other people will forego image textures completely on some models if procedurals wind up as sufficient for a good level of quality.

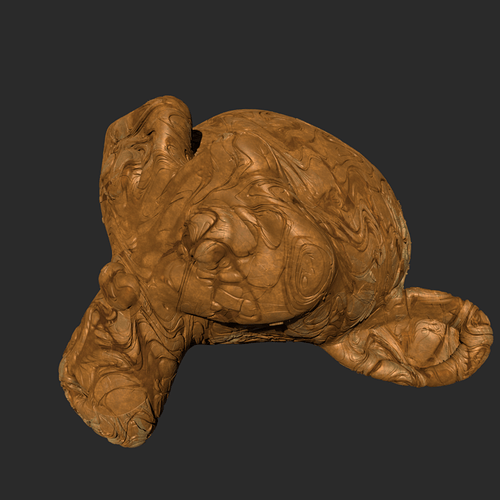

I haven’t even got to the point yet where, through microdisplacement, procedurals also have become a tool for the creation of high-resolution detail in models (that does not repeat along with holding up well on close-up shots). This is especially useful for terrain features, rough rocks, trees, ect…

I agree on that and if I sound like i want to deny access to any features then that wasn’t what I had exactly in my mind. I just want to put some light on simple 2D creation methods that are out there and that blender is missing, and we should focus more on those possibilities. I know that some talented individuals can create complex and realistic materials in blender, but we should give this ability to any new user by implementing proven solutions from industry like substance type of workflow.

I use Procedurals very often in my texturing. Procedural textures are very useful, in my opinion.

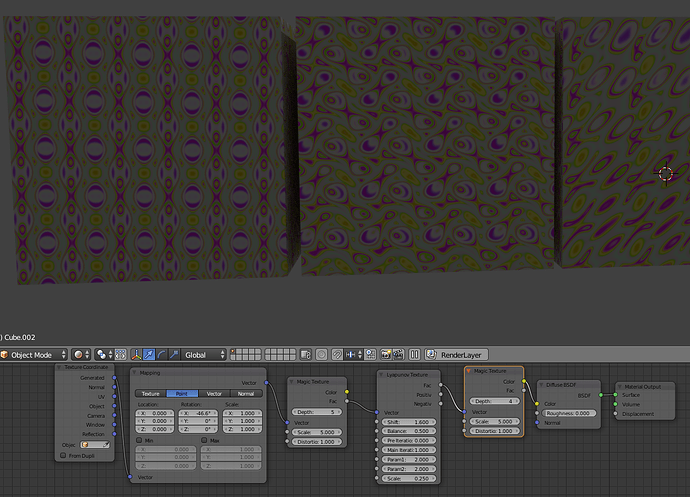

Maybe nice as wallpaper , fabric or cloth designs

Notice cubes all use the same texture but each show a different side

That’s one of the reasons there is baking in Blender. Some procedurals are too heavy to calculate, and it’s better to bake them into a texture, when we are happy with the whole. Substance and Mari do this on automatically, and the textures are baked with a texture engine, and then sent into the render engine as bitmaps (things like curvature, surface exposure, etc, are calculated right when you load your model, and the other procedurals use this information).

In Blender we need to do this steps on our own, and not rely completly on the Cycles engine to do all calculations at render time.

Things like the curvature maps are better to be calculated offline, because the BVH tree is not suitable to deal with geometry differencials (it’s design for path tracing calculations!).

On the topic of the new textures here, I’ve already posted my opinion. Maybe not fully explained, but here’s the why:

First, I don’t mind to have those textures packed with Blender. Lyapuno is already there for some time now, and in a very good place; As an OSL script! The Mandelbrot script can be also found here in the forum, or in other OSL resource sites.

In my opinion, Cycles should concentrate strengths in providing good tools for new procedural creations, and not be packed with procedurals routines for every this and that. I do a lot of procedural textures from scratch, and Cycles nodes’ system is one of the best I’ve seen in this matter (and the main reason I left all other software I used in the last 20 years). But there are still lot’s of small things that i need to switch to OSL for doing it properly.

For example… having a simple node that could do a switch (if A, then B, else C), or having a Floor and Ceil in the Math node or even an IsEqual comparison, is far more usefull than having any of the procedurals that come with blender. Doing these operations with the nodes we have, requires a ‘workaround’ logic, and almost up to 10 nodes to do it (i find this very ugly!).

If it was possible to have a simple loop system (even if locked to a max of N interations), we could easily recreate most of the procedurals that come in cycles, and so, but so much more…

When I see some developer trying to put some new texture in the kernel, my main question is allways: Will this texture be a valueable resource for creating better and more interesting procedurals? If the answer is yes, I have nothing to oppose, and i welcome them (perhaps with a great smile in my face!!).

But I cannot consider Lyapuno and Mandelbrot as bricks for creating other procedurals. Not in the same way we have Noise or Voronoi. Even the Brick texture is a bit superfluous in the kernel! LazyDodo has posted some time ago, a remake of the Brick texture with brick rotations and rounded corner, all made up with nodes, packed into a pynode, that works great.

These kind of scripts have a lot of potential! The kernel can be kept for doing the heavy lifting, and the textures are only loaded if in use. Not to mention the amount of new procedural textures we could distribute with Blender, create on our own, share with the comunity…

i dont really know how the whole kernel thing works, but could it be possible to make procedurals plugin-like, so if you dont want them you dont have to use extra ram? or would it be better to think of it like “use small kernel” option, which cuts out memory hogs?

as far as procedural textures, i use little other than voronoi, noise, and brick. dont underestimate the brick texture! i use it to create offset maps to randomize the look of planks, tiles, stones, which i create from noise and voronoi.

baking doesnt work for for me, most my objects (entire buildings) need the high resolution and randomness of computer generated textures. not to mention the pain of uv wrapping, or keeping track of a load of textures. i do alot of drafting and previewing, too.

btw, that volume posted earlier looked totally BA :ba:

I too think some additional maths and logic based nodes would make Cycles much more powerful.

One I suggested a while back is a generalised shader math node (in much the same way as we have the math node and colour mix nodes for values and colours). Currently we can add and mix shaders - but why not subtract, multiply, divide, difference etc - I have achieved some interesting results by making a hacky shader subtraction node group for example.

As you say - we need a comprehensive set of simple tools from which more complex behaviour can be built. Cycles node based materials are very powerful - but they still lack some basic tools that could make it even more powerful - or massively simplify the node group required to achieve a certain output.

That’s why I’m advocating for the a more extensive use of Python Nodes. They are possible, and shareable, and there’s a lot we can do with it. It’s a pity that we don’t exploit this new world… Developers could focus in the kernel and svm, the texture artists could develop more textures, and the 3D artists would have so much more on their tools.

as far as procedural textures, i use little other than voronoi, noise, and brick. dont underestimate the brick texture! i use it to create offset maps to randomize the look of planks, tiles, stones, which i create from noise and voronoi.

Voronoi and Noise are extremelly difficult to do with nodes alone (thought no impossible). They require some logic that we don’t have access from the kernel, like binary operations and loops… The Brick texture and lot’s of other patterns, on the other hand, are a bit easier to do with python nodes, as their process is quite linear. The SVM could have some more improvements, but it’s already doing a good job.

You can do it to some extend. Adding Shaders or mixing them, it’s a very simple process. The shader is just a function to deal with rays. Adding two different shaders is to add both functions to the materials light reaction list. When we mix the shaders, we are only scaling the colors that those functions use. This means that only the colors are changed, and subtracting two shaders is as simple as plugging a negative color into one of them (which is not physically based what so ever). In fact, if you look to osl version of the Mix Shaders, you’ll clearly see that the colors are scaled by the mix factor, and closures are only multiplied by that color.

You can do noise with nodes? Isn’t the detail level a recursive thing? I did Mandelbrot as a chain connected set of node groups (output of one feeding into the other), but if I added the 9th ('ish) chain Blender would hang during calculating shaders stage.

Yes, you can. But creating a pseudo random generator with only the options you have in the math node, is already too expensive. There are other algorithms, which you really have to program them, that are by far optimized for a render routine. Those should be in the kernel.

The SVM has its owns limitations also, but for 16 recursion levels, it stills works. Although very sluggish…

[SUB]There are only math and separateXYZ nodes in the Noise nodegroup.[/SUB]

Well, at least you can render some nice fractal volumes

I kind of wish it had some more parameters though

Isn’t it tedious to do all that in nodes instead of writing it in OSL?

Nodes has the advantage of being able to render on the GPU.

OSL is CPU only.

I know. Shouldn’t the goal then not be to get OSL for the GPU instead of trying to shoehorn esoteric functions into SVM?

Extending SVM and getting osl running on the gpu are pretty much the same thing, I’ve implemented the mathy bits of osl on the gpu once and most of the actual changes to SVM were actually just extensions to the math node. Given this had a 0% of making it into the codebase, i stopped work on it. I recently wrote a compiler to turn (a small subset of, it’s still bound by any limitations in SVM) OSL into nodegroups, (being used in bricktricks) naturally this is not as efficient as native support but better than nothing. (and still faster than OSL on the CPU) I’m currently rewriting it in python hope to turn it into an addon one day. Currently to check for equality i have to use 3 nodes (subtact-absolute-lessthan) , atan2 i have to approximate with 36 nodes. I see no reason why these things should not be ‘shoehorned’ into SVM? However everytime i try to do a change to cycles, i’m met with a ‘oh noes i’ll spill 3 more registers! this will make things slower!!’ but the same devs have no issues merging the monster of a shader that is the disney bsdf (awesome work , love it! no disrespect there). But the signals are pretty clear, there is no interest in improving the procedural side of cycles by the main devs or allow other people to do it.