How do I code a script to check which objects a camera sees and only do operations on them? And how do I do that with animations?

this is a fairly deep topic so I’m not going to jump into all of the details- but I’ll give you a high level overview that can help you with the research you’ll need to do in order to pull it off.

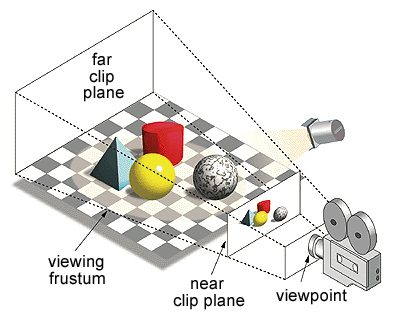

Basically, you need to do an AABB->Frustum intersection test. Consider ‘everything the camera sees’ as a tapered box (the view frustum).

For every object in your scene, you need to test the axis-aligned bounding box against this view frustum geometry to see if any part of it intersects. If so, the camera can see it.

Once you have a list of objects the camera can see, you can iterate through them and perform whatever operations you want.

I know the frustrum stuff (but thanks, it was a good explanation), I was simply hoping that there were existing functions that handled a lot of it, or at least some straight up preexisting method I could build on?

Not that I’m aware of, unfortunately.

Dangit. Thanks, though…

Modified code from BSE:ray_cast function not able to select all the vertices in camera view

Due to the time it takes to perform these tests in complex scenes 3 separate functions are listed to test by object origin point, or any polygon center, or any vertex from object in camera view. Vertex chack being the most accurate but slowest.

Once any polygon or vertex of an object is detected the evaluated object is added to a list and the loop continues to the next object skipping additional testing.

import bpy

from mathutils import Vector

from bpy_extras.object_utils import world_to_camera_view

# Deselect mesh polygons and vertices

def DeselectEdgesAndPolygons(obj):

for p in obj.data.polygons:

p.select = False

for e in obj.data.edges:

e.select = False

# Get context elements: scene, camera and mesh

scene = bpy.context.scene

cam = bpy.data.objects['Camera']

vis_objs = [ob for ob in bpy.context.view_layer.objects

if ob.visible_get() and ob.type == 'MESH']

objs_in_cam = []

depsgraph = bpy.context.evaluated_depsgraph_get()

# Threshold to test if ray cast corresponds to the original vertex

limit = 0.1

def objs_in_cam_vert():

for obj in vis_objs:

obj = obj.evaluated_get(depsgraph)

# In world coordinates, get a bvh tree and vertices

mWorld = obj.matrix_world

vertices = [mWorld @ v.co for v in obj.data.vertices]

for i, v in enumerate(vertices):

# Get the 2D projection of the vertex

co2D = world_to_camera_view(scene, cam, v)

# If inside the camera view

if 0.0 <= co2D.x <= 1.0 and 0.0 <= co2D.y <= 1.0 and co2D.z > 0:

# Try a ray cast, in order to test the vertex visibility

# from the camera

location = scene.ray_cast(

depsgraph,

cam.location,

(v - cam.location).normalized())

# If the ray hits something and if this hit is close to

# the vertex, we assume this is the vertex

if location[0] and (v - location[1]).length < limit:

if obj not in objs_in_cam:

objs_in_cam.append(obj)

continue

return objs_in_cam

def objs_in_cam_face_ctr():

for obj in vis_objs:

obj = obj.evaluated_get(depsgraph)

# In world coordinates, get a bvh tree and vertices

mWorld = obj.matrix_world

faces = [mWorld @ poly.center for poly in obj.data.polygons]

for i, face in enumerate(faces):

# Get the 2D projection of the vertex

co2D = world_to_camera_view(scene, cam, face)

# If inside the camera view

if 0.0 <= co2D.x <= 1.0 and 0.0 <= co2D.y <= 1.0 and co2D.z > 0:

# Try a ray cast, in order to test the face.center visibility

# from the camera

location = scene.ray_cast(

depsgraph,

cam.location,

(face - cam.location).normalized())

# If the ray hits something and if this hit is close to the

# face center, we assume this is the face

if location[0] and (face - location[1]).length < limit:

if obj not in objs_in_cam:

objs_in_cam.append(obj)

continue

return objs_in_cam

def objs_in_cam_origin():

for obj in vis_objs:

obj = obj.evaluated_get(depsgraph)

# In world coordinates, get a bvh tree and vertices

mWorld = obj.matrix_world

# Get the 2D projection of the vertex

co2D = world_to_camera_view(scene, cam, obj.location)

# If inside the camera view

if 0.0 <= co2D.x <= 1.0 and 0.0 <= co2D.y <= 1.0 and co2D.z > 0:

# Try a ray cast, in order to test the vertex visibility

# from the camera

location = scene.ray_cast(

depsgraph,

cam.location,

(obj.location - cam.location).normalized())

# If the ray hits something and if this hit is close to the origin,

# we assume this is the vertex

if location[0]:

if obj not in objs_in_cam:

objs_in_cam.append(obj)

continue

return objs_in_cam

print('-------------------')

#print(objs_in_cam_vert())

#print(objs_in_cam_face_ctr())

print(objs_in_cam_origin())

Thank you, that is a good place for me to start learning!!

this is one reason of many that an AABB test is better in every way. while this method technically works I don’t know why one would ever choose to do raycasts (computationally expensive, requires edge case testing) versus a basic plane intersection test (computationally inexpensive and extremely straightforward). There’s a reason why basically every game engine ever made does frustum culling in this way.

Depends on whether or not you want to verify if the object is both in camera view and not obscured by other objects in the scene.

world_to_camera_view(scene, cam, obj.location) if 0.0 <= co2D.x <= 1.0 and 0.0 <= co2D.y <= 1.0 and co2D.z > 0: will cover top bottom left right view planes adjusted to camera perspective. Z-axis could be refined for camera start end clipping; but if you need to know that the object is actually going to be in included in part or full of a render frame not sure you can get away with anything less than a ray cast test.

Granted i did not provide a bound box n p vert check which would be a good compromise to filter visible objects but it was just intended to provide an example.