Does anyone have official information about this subject ?

We keep speculating on Game-engine vs DCC’s but it would be highly interesting to hear a lead developer opinion on this generic direction we are heading into.

In my understanding, it just limit maximum texture size and subdivision.

I don’t want that. I want that oversized textures to resized, keeping texel size always smaller than pixel size.

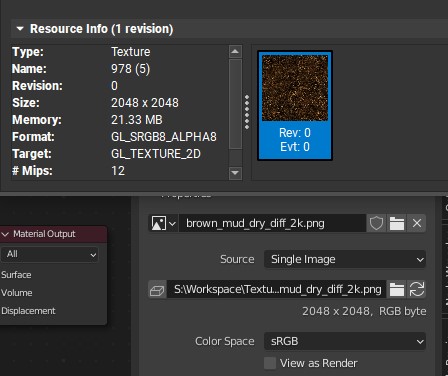

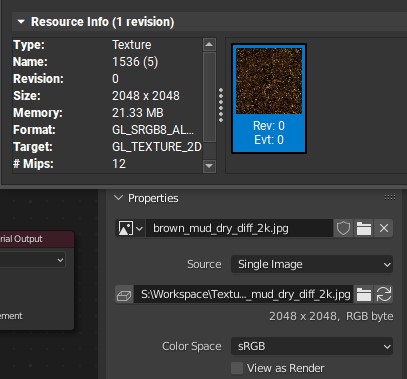

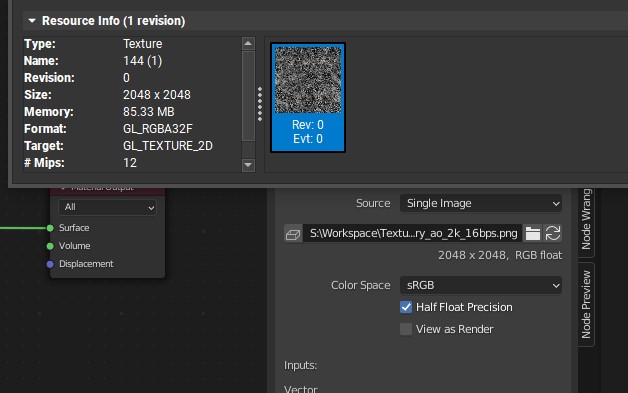

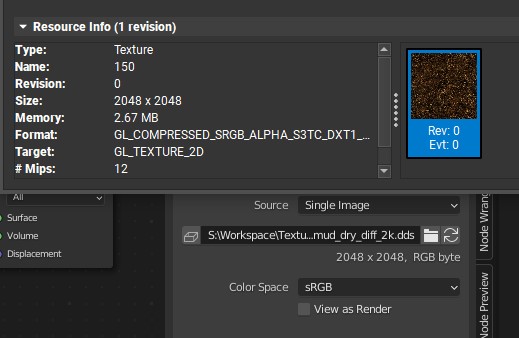

No. All image formats except DDS are loaded as plain RGBA bitmap 8/32 bit per channel in eevee.

And mipmaps are by default.

Examples / proof

2048*2048 * 4 (channels) * 1 byte (8bits) = 16 MB

16 MB * 1.333(3) (mipmaps) = 21.33

screens from nSight:

—

—

—

only exception is DXT compressions in DDS:

Perhaps the reason that you don’t understand the interest in Eevee is because you’re forgetting that just because you don’t use it, and don’t like the results you get with it, doesn’t mean that other people aren’t using it, and can’t get good results with it. This comes up ALL the time with the Cycles-only crowd, and you guys just need to stop and remember you’re not the only ones using Blender.

I’ve seen plenty of people who are good enough at Eevee to get results comparable to what some artists are doing in Unreal, and I’ve seen artists who get crappy results in Unreal because it’s still not quite the “Make Art” button people hope it will be from the tech demos and renders they’ve seen by professional artists. Like Unreal, people who care to get good at Eevee will (not that there is any need to learn to use Eevee properly if Cycles is more appropriate for your use-case). But sure, since Eevee is only a couple years old, it obviously doesn’t have the same maturity and capability as an older, much more developed real time render engine - and the smaller team ensures Eevee will likely never catch up completely.

Which puts Eevee versus Unreal in exactly the same boat as Blender sculpting versus Zbrush, Blender texture painting versus Substance, Blender cloth physics/sewing versus Marvelous. And yet, people generally don’t suggest removing or ceasing development on Blender features like these just because they can be done better in other programs.

Also remember that Cycles is only usable if you have either A) an amazing GPU that can render the scene quickly, B) a lot of time and patience to wait for less powerful GPU, or worse CPU, to render, or C) a mix of the above. For users who don’t have those and perfect realism isn’t the goal, Cycles is not an adequate replacement for Eevee.

And, don’t forget, not everyone is only interested in photorealism. Cycles is for photorealism. Eevee is for NPR

And another thing that is fundamental, imho, is that having a raster 3d engine that looks and feels similar to Unreal/Unity is important for game dev and speeds up the process a lot. When creating assets, the ability to preview and even showcase an asset in a way that is comparable to Unreal is important. It just saves a lot of time. This is the whole reason why Marmoset, for example, is so popular: you have a great real-time engine that is easy to use and looks the same as Unreal.

Once Eevee next will be here, with better global illumination and displacement support, it’ll be a game changer for me.

And btw I’m speaking about gamedev, but really, as VFX uses Unreal more and more, and VR also becomes more common, I can’t understate how handy it is to have Eevee. And this is without speaking about animation and NPR. It was a great strategic choice by the Blender team to work on it. It’s far from a “useless piece of code”, as someone above wrote!

Not sure I would agree with that. I’ve been able to get pretty good realism from EEVEE, and so has Ian Hubert:

"This was also all (apart from the live action elements), rendered in the realtime eevee render engine, which is pretty darn cool. "

If low on VRAM, is it enough smart to conserve accessed VRAM for draw call? What I mean that when low on VRAM it can work automatically by accessing only smaller mip levels?

I know that Eevee requires that everything what single draw uses, is found from VRAM so it can work without crashing even when scene uses more memory than there is VRAM.

Are you sure this is related to Blender and not your assets?

Games are decades rendered scenes where you can move camera around and have forest or monsters but have you check what this requires from application programming interfaces or game engines? There are limitations to mesh sizes, amount of textures, shaders, recommended texel density…

As far as I know, Cryengine/DirectX 11 is limited to 128 textures and shaders, and texel density is 1/512m and everything in screen is packed to fit these limitations. Textures may have array that is used to access similar types of textures using same shader but these can worked out in Blender using bigger textures.

So you may need to fit your village to two textures, your forest to one texture, instance meshes in houses and trees… And all textures and geometry need to fit in VRAM.

Also landscapes should not be one big mesh… they should be splitted to 64x64 or 128x128 mesh tiles like in Unreal Engine.

Blender can’t have everything but if you need realtime rendering then Blender + Unreal is a great combination that complement eachother ![]() Blender for modelling / texturing / animation and Unreal to assemble the scene and render.

Blender for modelling / texturing / animation and Unreal to assemble the scene and render.

Plus Unreal is also free so there is no reason to not use it.

Those are good points. I often wonder how many people who express frustration that Eevee can’t do everything Unreal can are people who’ve actually used Unreal or other game engines, or people who’ve seen gorgeous tech demos designed to sell everyone on the limitless potential of the game render engine (as marketing material is supposed to do), without really recognizing what kinds of optimizations are going on behind the scenes or that they’re likely rendering the scenes on cutting edge hardware that not everyone has at home. Unreal is a good engine, and can do things Eevee can’t, but Eevee is still a very capable engine and can make all kinds of beautiful images.

In many cases people are mistaking their scene’s issues as Eevee issues and not recognizing the role their own workflow and knowledge gaps are creating issues. Changing render engines won’t fix poor optimization and knowledge gaps on proper shaders, lighting, and render settings. There was one Blender user who uploaded Eevee and UE5 renders of their character, and they’re so good at Eevee the Eevee ones were still better, in my opinion at least.

(Not that there aren’t also things Unreal or Marmoset or whatever are capable of doing realtime I’m not jealous of too. I’d really like anisotropic shading and also a decent sky model that works in Eevee. I think even Sketchfab can do anisotropic shading. There’s no technical reason they can’t add it to Eevee, it just hasn’t been a development priority).

Eevee is also undergoing a major rewrite that is bringing large numbers of enhancements and general improvements to all areas. This is also the reason why there has not been a lot of work on the current version. Clement just recently posted on devtalk that he has started the next stage which involves the lighting engine.

Yep. I’m really excited for that when it’s finally released, and do recognize that’s a big part of why other eevee improvements haven’t been a development priority, unless they’re adding some of them in as part of the rewrite. I know there are definite plans to convert Nishita to Eevee so that one could happen. But yes, anything they try to add to Eevee now would be twice the work since they’d have to write it for both the current and rewritten version, and it’d be less developer hours going into the rewrite slowing that down too.

It’s just that anisotropic had been discussed well before they announced the Eevee rewrite, and the last time a developer was asked about anisotropic in Eevee, it seemed like they no longer had any plans to add it anytime soon, if ever. I’ve experimented with a lot of methods of faking anisotropic for hair, and coming up with one method that looks good on all hair models in all lighting conditions has been problematic. On the other hand, I’ve had plenty of success with other things in Eevee, so while anisotropic shading remains a sore point, I’m still happy enough with it that it hasn’t driven me to Unreal or Marmoset.

Also, you wouldn’t happen to have a link to that devtalk would you?

EEVEE Next - Blender Development - Blender Developer Talk

To keep it clean and easy to reference, the thread is closed to feedback. The master builds from the buildbot actually allow you to taste the new Eevee with an experimental feature flag, but it does not yet do enough to make it usable for production.

Thanks. Which build? Not seeing it in the 3.3 beta.

Yes, the devs. disable the experimental features for beta and release builds (in part so the bugtracker does not get filled with reports for things they do not consider ready for production).

The new Eevee for instance is definitely not something you can start using now.