Everything you mention here can already be achieved in Cycles as far as I can see. All those are tremendously expensive operations of course and they could certainly be optimized with this kind of approach.

For the Blender world, this is certainly an exotic use case. This kind of rendering technology is pointless, if you don’t have the simulation tools to get the required data for the rendering. So it would not be possible to just integrate it into Cycles alone, it would require changes in several areas of Blender and as such would require an immense investment.

In the near and midterm future, I expect that the Blender Foundation and Blender Animation Studio especially invest into 2.8 and the logical follow up features of it. As long as there is no studio or community that is willing to invest into that, it is very unlikely to happen in my opinion.

Does alembic support particles already?

EDIT: I will reply myself, it does not support particle systems perse, but it seems it support particles as animated points, this is from the documentation:

Particles are exported as animated points.

So we have all we need, sometimes you don´t need to do a super complex simulation to be able to leverage this, check this video:

This is done with molecular, and rendered in cycles, using spehere instances I presume, I´m not sure, but this could leverage an optimization on this regard to be used on production

Also check this one:

The foam can take leverate of this solution to increase is particle count and achieve better shading without a big render time penalty.

I understand that when you say that the change and investment is BIG you say that because you think in having a full new simulation framework in Blender, wich could be great but as you say it could take A LOT of time and money, but the fact is that in production many times the simulations are done outside of the main app, that is what real flow exists for  so this could be great even without that new simulation framework, as useful as Alembic wich has been proven to be an aswesom tool even when it was not too valued when it was not in Blender, and as it is OpenVDB, that I hope it gets fully integrated in Blender rather sooner than later.

so this could be great even without that new simulation framework, as useful as Alembic wich has been proven to be an aswesom tool even when it was not too valued when it was not in Blender, and as it is OpenVDB, that I hope it gets fully integrated in Blender rather sooner than later.

But in any case is great to know that things can be achieved, I´ll try the instancing solution with a sphere (if someone could point me in the right direction is appreciated  )

)

Cheers!

Cheers!

For rendering vertices, there are two ways right now which could theoretically work (with drawbacks of course).

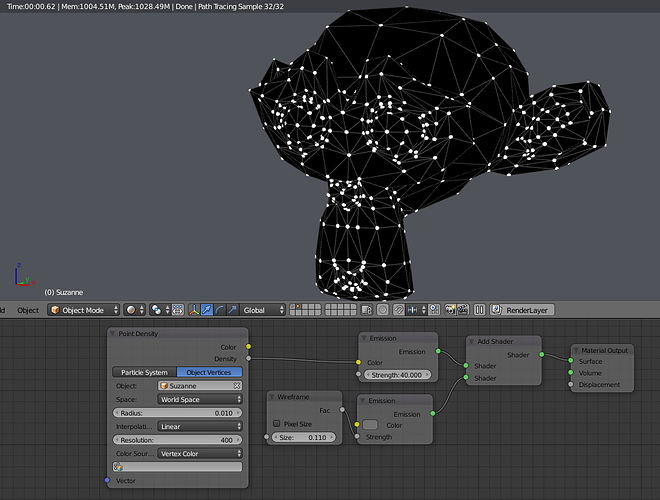

1). Point density texture with a very small radius around said points and a high resolution. It will really jack up your rendertimes though as you will need to have a very small step size (in the render settings).

2). A sphere mesh instanced on vertices using dupli-verts (object context, duplication panel). Would render much faster than the first idea, but the viewport will chug if you have enough of them visible at once. That drawback though may likely just be a thing for 2.79, as there’s a chance it might get much better in 2.8.

Though true point rendering might allow things that the two idea above can’t provide, but I don’t think there’s enough user demand for such a feature to warrant it being a priority.

Adding analytical sphere intersection and shading, and the ability to treat vertices as instances of those spheres to Cycles would be a trivial, sub-one-hour project. It’s just not been a goal of the project since there are already workarounds.

A proper implementation takes at least a week I expect. You need to do UI, Blender export, attributes, BVH building, BVH traversal and optimized point intersection, motion blur, multiple importance sampling for emission, fixing GPU performance regressions, write release notes, docs, tests, … . If this would take less than an hour I would have done it years ago, since I think this would be a great feature to have in Cycles.

3.) emit a single particle from each vert.

Why are you guys making this so complicated?

The way I see it, there are two current “use cases” with point clouds which probably makes this a low priority (although to agree with brecht, an interesting one if it could be done easily).

The first case is strictly for review in an engineering context, and is already well covered by both laser-scanner manufacturers (Leica, Faro, etc.) as well as major engineering developers (Bentley, Autodesk, etc.) without any conversion gymnastics. This doesn’t require any kind of high quality rendering, just good-enough visual comparison.

The second case is for high quality rendering; however that’s pretty well covered by the various of point cloud/image sequence to mesh converters available. Why bugger about with several million points (and that’s a small one) when what you’re really after is the objects they are describing? I’ve done some rendering with raw point clouds and the results are rarely appealing.

Hey Brecht, what exactly are you saying would take a week to implement?

implementing sphere as cycles ray tracing primitives so that you are not using mesh instances to represent particles.

oooooh. I see. I thought that particle instances were render time instances as well? that’s why they render so fast. So, you’re only using the data of one sphere when you render. It’s not like you have all those spheres hanging out there in space right?

If not, and all the geo that makes up instances needs to be turned into real geo before rendering, then I think implementing render time instancing would be much more helpful.

Yes, they are instanced geometry. But you don’t need that geometry, you can describe a sphere primitive by the center position and its radius. You don’t need triangles for it => way faster intersections, slightly less memory consumption.

I see a few mentions of point density. Remember point density does not have to be used with volumetrics. It works fine for surfaces too:

The problem: point density is a memory hog at usable resolutions, as you can see. It needs a better data structure. All that empty space.

An analytical sphere never has to be turned into mesh geometry and that’s the huge advantage of it. It does not have any geometry besides the point and radius. Computing intersections between rays and spheres is a lot faster than computing intersections between rays and triangle faces. Since a mesh sphere consists of lots of triangles, you can save lots of intersections checks if an analytical sphere is used instead.

If you are dealing with instances, it is not unlikely that the camera is at one point very close, such that you might have to further subdivide the instance. You are never going to run into this kind of issue with an analytical sphere.

Even though the implementation might only take one week for Cycles (that can usually be translated to “one week * Pi”), it is necessary to somehow create or get the data. As mentioned, the Alembic importer in Blender doesn’t support it, no physical simulation in Blender supports it. So at least one of those would be necessary.

Besides that a visualization in the viewport is needed. As there would be a huge amount of particles, performance optimizations or maybe simplifications would be needed. Reading the data for playback would likely be the bottleneck, but if I remember correctly, some work has been in that area for Alembic playbacks.

Mmmm… wait… Alembic in Blender DOES support it, it’s what I checked from the docs, particles are animated vertices, you just have to have something to replace vertices with, in this case the analytical sphere, why do you keep saying is not supported?

Cheers.

Sorry, I made the assumption that it is part of a particle system, which is not necessary of course as you pointed out correctly. Just vertex data would be sufficient which is supported in Blender’s Alembic importer.

Isn’t n.2 less complicated than setting up a particle system, and giving it proper settings and correct number of particles?

As far as i know they both work with instancing so, I’d prefer dupliverts for this task

Exactly, now what I´m not sure about is if those vertices save up enough data for motion blur, that I don´t know, so I don´t know if importing an alembic simulation from Houdini to Blender and using dupliverts for example could give us correct motion blur in render, that I don´t know…

That’s only relevant in my opinion if you are actually trying to get funding for that functionality or if you have a coder who is will do implement it. In that case, it is something that should be discussed with the coder.

No is not, because is also relevant if we want to use that Alembic vertex cache with dupliverts using polygonal spheres for it as it is today

But in any case, as I said in the first post, I´m not asking anyone to implement this, I just wanted to bring this to the table since I find in general this is a feature absent in pathtracers, and it could be a great feature, in the end Krakatoa has a whole market for itself alone, it could be great to create some competition from inside Blender

If someday I can hire a developer myself inside my studio as a full time worker then I´ll dedicate part of his time to this shorts of developments, but as of today I cannot fund it myself ( it could be great to know how much funds are needed to have that week or two of development, and I say this from my absolute ignorance about this things related with Blender) and I cannot develop myself, but it´s cool to know that there is a solution and maybe one day someone could be able to implement it

Cheers!

Why would you use a polygonal sphere?