(TL;DR How do you set up UV less Normal maps?)

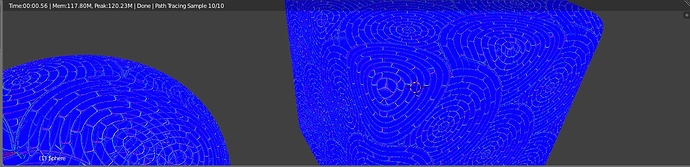

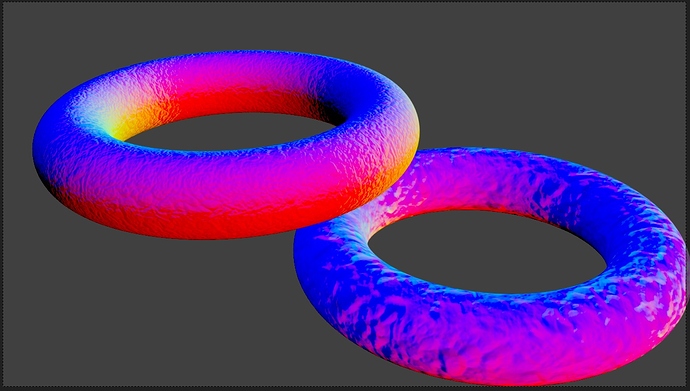

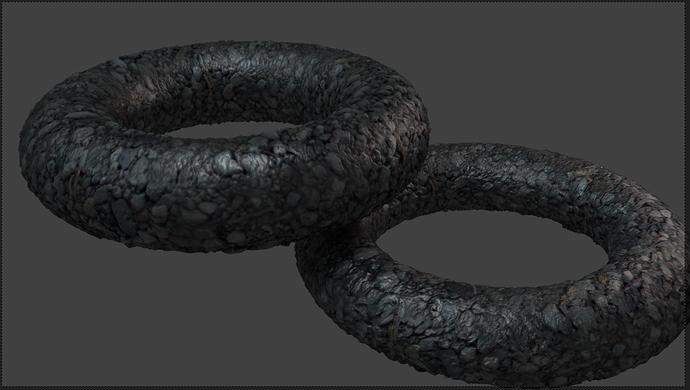

I have been working on a Cycles node setup using the Blended Box mapping technique, but I cannot work out how to properly set a normal map this way.

For those who do not know how this works (I would say most have not heard of it), Blended Box Mapping is a way of projecting textures onto an object from the X,Y & Z planes and blending the edges of where those images meet. This is great for more natural organic textures, where the you do not want to UV unwrap objects (for speed, workflow purposes, procedural generation, or parametric techniques).

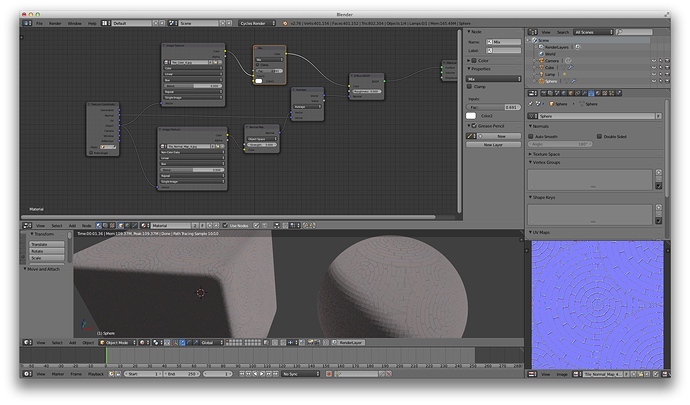

I am using the node setup from Tears of Steel as a starting point:

https://mango.blender.org/production/blended_box/

To show what happens when this is done, see this video:

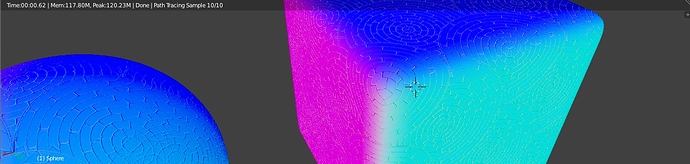

I have also seen this referred to as Triplanar mapping. Although I am not sure if there is any distinction between the two, but the example I have seen does have a different node setup. Here is a node setup under that title:

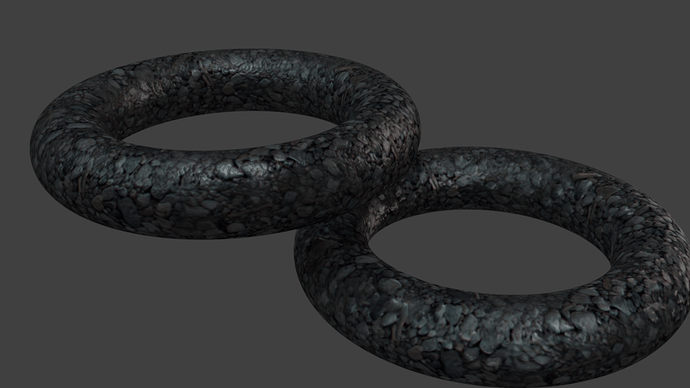

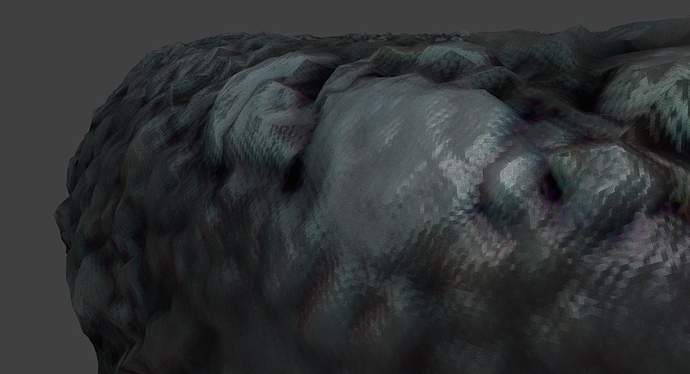

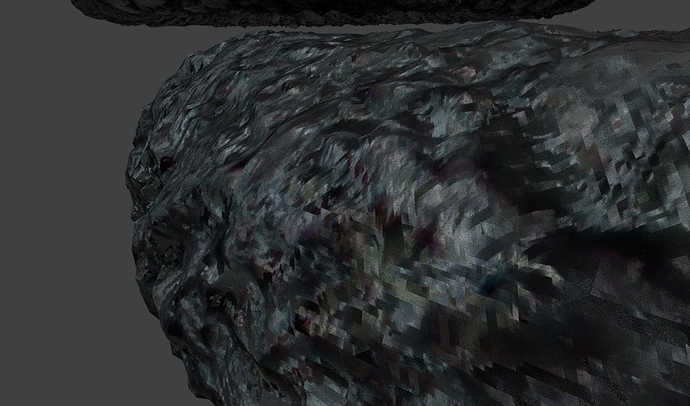

What I have created is a way to add leather textures etc to clothing, accessories etc. I can use this to separate the texturing process from the assets. Once the material shader is complete, I can use it to render out newly created items quite quickly, without having to UV unwrap multiple, complex shapes. This is about speed and efficiency.

To further improve the speed that this renders I have also thrown in a matcap node setup just for lighting reflections.

Everything works as it should but I cannot work out how to connect and use the Normal maps I have created in Bitmap2Material. I may or may not have stumbled across the solution, only to loose it again, so here I am.

Anybody know how to setup Normal maps without UVs in this case?

Looking forward to your input.

Chris Lee