Pretty cool for gaming, not sompletely sure about 3D. They do say something about raytracing but it’s not very clear. (at 24mn50s)

About 99% of The whole presentation was about gaming (including the raytracing bits), the only part where it wasn’t, they talked about AV1 hardware acceleration. They avoided direct comparisons with “the competition” and decided to play the value card with things like “the FASTEST gpu under $1000”.

This will definitely make them more appealing to gamers and budget oriented folks (no need for new power supply/PC case, no adapters, no melting worries, “only” 355W, DisplayPort 2.1 “show me them 900fps ![]() ”.

”.

For Blender though ? …

A lot of people are considering it good enough though, because the 7xxx series will reportedly be much better in price/perf, perf/watt, and dollars per gigabyte (as far as VRAM goes). I would not be surprised if sending the power consumption skyward for that 10 percent performance boost is something a few AIB vendors will do.

In fact, with Intel in the game now, Nvidia is now the stingiest of three companies in terms of the amount of VRAM you get (which currently feels constricting as far as rendering goes).

I am in the process of getting a new PC, purely for Blender; What do you think? Should I get AMD RX 7900 XTX for 999 dollar (waiting) or The 1,599 dollar (if you can find one) RTX 4090 ? Both Card has 24GB of memory. Most of my scene are very heavy; and in the end I have to render with CPU, because the GPU always run out of memory. Now hopefully these 24GB video card will help. Any recommendation which Pre Built Window PC maker should I buy from? my budget is 4K. I was looking at HP and Digital Storm.

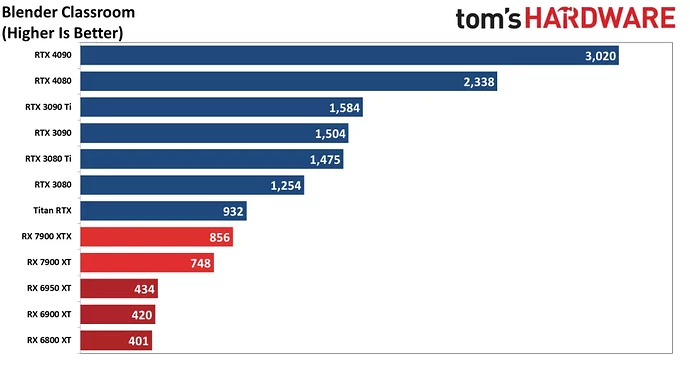

Nvidia is better for Blender by a long shot. AMD GPUs perform, for lack of a better word, terribly in Blender.

Don’t do this. Pre-built PCs are 30-50% more expensive per part, you’re wasting a ton of money for no good reason. Build your own, it will save you hundreds if not thousands, and you know it will be done right

Correct me if I’m wrong but AMD did the trick with adding FP32 to INT32 pipeline, thus doubling FP32 pipes across the whole arch. The same thing NVIDIA did with Ampere. If true this will increase rendering performance significantly.

Personally I’m still skeptical until I see 3rd party benchmarks and GPU teardown.

Intel has a new gpu and already their implementation of OneApi is good, while years of amd support through openCl in blender was mediocre. I wouldn’t buy any Amd cards until I’m sure their Hip implementation is at the same stability as Cuda/Optix. Right now there’s not even raytracing support…

Thanks for the respond. I would built it my self if for personal use. This is for work place, so I don’t want to go through all the hassle; it always come back and bit you if something went wrong.

If you can find a cheaper 3090 Ti, I’d get that. Same VRAM, smaller unit, no new power connector issues and with OptiX it will still beat any AMD card.

Unless AMD sort out ray-tracing support for Cycles rendering, they just aren’t going to get all that close to Nvidia.

Well i watched the announcement of the RDNA3 cards i was impressed with what AMD were offering. This whole thing about Nvidia being so much faster in cycles is all due to software and drivers not raw compute horse power. With more users buying RDNA3 cards will come more demand and reason to improve cycles rendering support.

And another thing is if AMD can win over the gaming crowd which is MUCH larger than the 3D rendering crowd, then it should by all rights force Nvidia to rethink their 4000 series line up and introduce MUCH better cards to compete, such as much lower price points and increased VRAM, in which case we can then take advantage of that and buy the Nvidia card for Blender use…

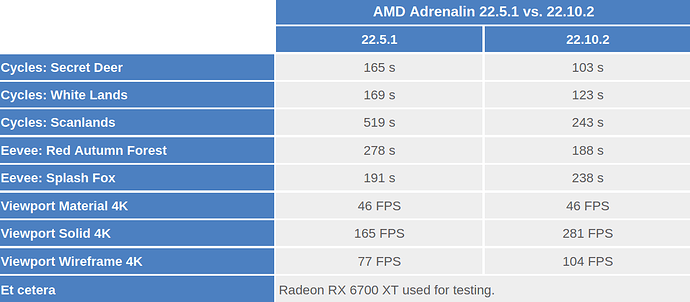

According to Techgage tests, AMD have made some significant improvements in the past 6 months:

But when put in the bigger picture, those improvements looks too minimal…

full article:

Partly but in reality it’s both software and hardware. The hardware being, for want of a better word, is ray-tracing cores that get a lot of positive and negative reviews and comments from the ‘gaming crowd’ in how much difference it really makes, if it’s worth the performance (FPS) hit, etc, etc.

But for us and Cycles rendering, RT cores are nothing but a positive and have a MASSIVE impact on rendering times and this is where Nvidia just have a clear lead over AMD. The current expectation is that the new RDNA 3 cards will have the RT performance of around the old Nvidia Ampere cards, which alone will leave them about half the speed of the now new Nvidia Ada cards.

Then comes the second problem, which is software, in that Cycles just can’t use any of the RT cores on any of the AMD cards and so, as that Techpage graph shows, means AMD just gets pounded into dust (as does Intel for that matter, but same problem, their RT cores also aren’t currently supported).

It’s frankly a big missed opportunity for both Intel and AMD. The 7900 XT with 20GB VRAM at $900 USD could be a great gaming card while giving near 3090 level Cycles rendering performance at a cheaper price and hopefully more availability, if only RT was supported in Blender.

Thanks, that is a good idea.

First reviews appearing

Appealing price, disappointing power consumption and temperatures. Competitive in general with Nvidias. For Blender users that need to get a Nvidia due to Optix superiority this is good news since the 4000’s should get a price down.

https://www.techpowerup.com/review/amd-radeon-rx-7900-xtx/40.html

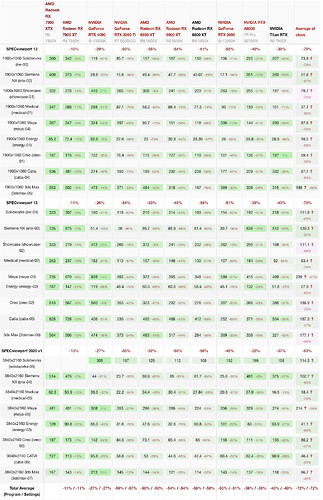

Try to understand the relative importance of that chart, and what that test really tests…

Try to understand that these results are important for users of serious tools and not cheap gaming laptops.

That is an impressive showing.

Nvidia makes different solutions for different markets. The RTX line is not designed for professional work, that’s what the “A” line of their GPUs is for (which replaced the “Quadro” brand). Of course for those people who don’t have deep enough pockets to pay for the pro line, they can make use of the “Studio” drivers for the RTX line (I mean, I doubt anyone would mind losing 5-20 fps in their games, when what you really need is precision and stability during work, right?). AMD has not this problem, since they killed the FirePro brand some years ago, that’s why they optimize for everything, but as usual, the older the card, the better, until certain point.

Blender has not much problem adapting to it, since it’s usage of OpenGL 3.xx is mostly generic. Still, Optix + CUDA > AMD offerings at this time. YMMV.

RTX line is also designed for professional work, that is why there are Nvidia Studio drivers for Geforces. The point is that making 400 fps vs 350 fps in a viewport only in marginal situations is relevant and those apps are far far from being all that is for “professional work” and what they test is also far from all that is relevant for “professional work”.