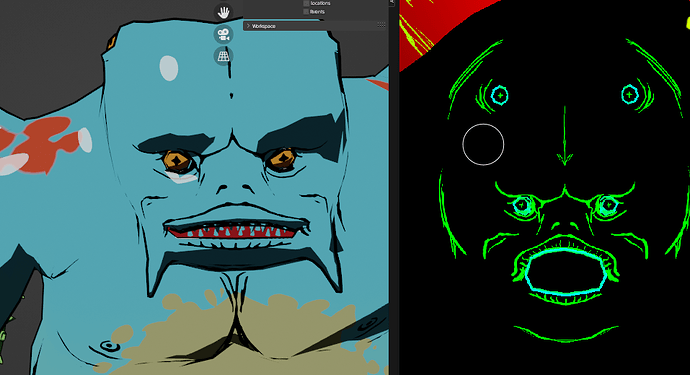

The thing that interests me most is how textures are made so sharp even in closeups. Is it simply insane resolution, or some technical trick like composing them using alpha masks with cutout? Creator Deadsound provided barely any technical details in his making-off, I only know he uses Blender. Maybe he published more info somewhere else?

It might be worth contacting the author and asking ! My bet is that it’s just insane resolution.

I don’t know how they did it in the short, but you can add extra definition in the faces, hand, feet, and some other areas can be a bit smaller in texture size. Using UDIM might help for that.

I didn’t see super close up shot either like on one eye, or the tip of a finger, and these can be done separately anyway.

It’s flat shaded so no need for other maps than colors, which allow to save memory.

Since the sets are 2D painted these can have a standard resolution, so it’s only the character that are super highres.

Finally, since it’s flat shaded it’s even possible to render with cycles CPU since probably after 16 samples it’s should be anti-aliased enough to work.

They look like vectorized images.

It could be a custom build reviving the old Vectex project.

But I think that for decor with occasional details, that may be insane resolution through UDIM and or compositing.

And for scales and feathers of characters, that may be procedural textures, rounded using Math node, guided by painted ones.

The author seemd pretty much “talkative”… so he might just even tell:

There seem to be pixel artifacts in his older works, so maybe you are right and there is no special tricks to that. Though I can only learn by contactin him, for sure

This looks amazing! 15 years old though, doesn’t give much enthusiasm

I tried using a material made of masks, with cutout (Greater Than node), and Linear filtering. It does indeed make the result smoother, but small details (like line art) fade at the distance. I guess it can be used for artistic effect. Some extra work though

Same stuff that is on his youtube, unfortunately. Little to no technical info

Ohh i didn’t looked too much into this… but i thought… as a Patreon… he might eb even more informative … ![]()

Yeah, I think the main trick is about planning and good organisation. Like if he knows in advance what kind of shot he likes, how close the character are going to be, then the textures can be optimized for that. You are more likely to get some issue if for instance you make a character without any particular purpose and later make an animated sequence.

Given the artist, I’m pretty sure that the 3D workflow isn’t super advanced, but more cleverly planned and well organised.

Say if a texture is too lowres on a particular shot, I’m sure the solution might be either to change the camera, or to do an up-res version of that texture for that shot. And maybe switch manually to lower res image.

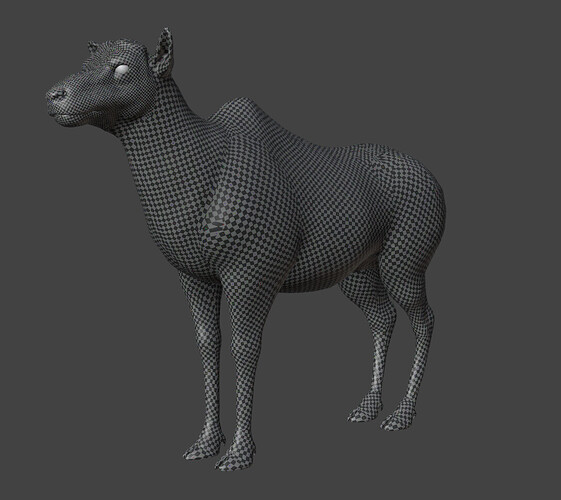

Here is a little test I did with an 8k image :

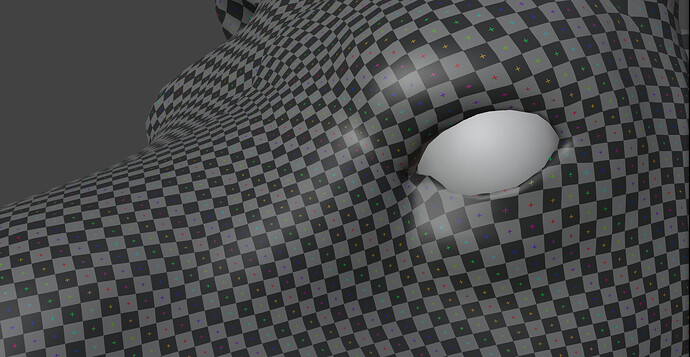

Close up shot :

Limit of the texel density, since the cross is 1px wide :

it’s 1 8K so 256Mb for 1 character.

Probably 2x 8k textures is ok for hero character, and secondaries is probably ok with one.

if on a shot we have 4 hero character : 4x512 = 2048Mb

10 unique secondaries : 10x256 : 2560Mb

That gives us 4608Mb and that leaves a lot of room for props and set that are probably lower res, and we are talking here about a corner case shot which might be rendered on CPU if needed.

Shots with only a few character can probably fit an average GPU.

If Line Art is done, using Line Art modifier, on a Grease Pencil object set to have a Screen Space Stroke thickness ; it does not fade at distance and it adapts to Camera point of view.

This looks promising. More on that topic, what happens from the technical standpoint if I exceed the VRAM size in Eevee? Will it prevent the render at all? If so, stripped down Cycles (only few samples) might be better option.

I can just try to answer this from a primitive technical viewpoint. It seems pretty clear - the textures are huge, thus sharp - but they also just use very little color, so they’re lightweight. It’s like saving one and the same image as uncompressed jpg vs., say, 8 colour png. A HUGE image thus can have only a few KB, while being razor sharp. If you compress the jpg down to that size, it becomes a mess.

They possibly were painted on, then, as someone else suggested, either traced in Illustrator with a simplified colour palette or filtered in PS/GIMP (Median filter, for example).

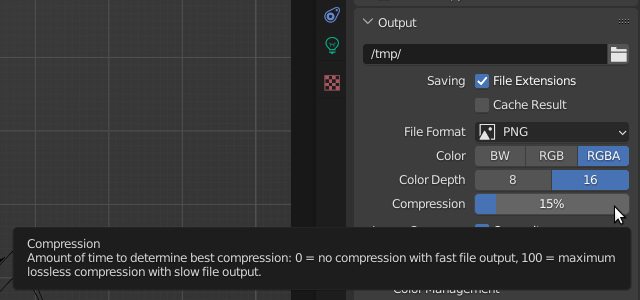

Few questions. First, does image compression impact rendering at all? I am quite sure textures get unpacked when they are loaded to VRAM; so, provided the same resolution and bits per pixel, *.png image will occupy the same memory as uncompressed *.bmp. Perhaps it matters on other stages of render pipeline. And second, I heard rumors that Blender still treats lower bit depth textures as 8 bits per channel. I hope it is wrong, since it would be better way to optimize video memory

That I can’t answer - I can only say, 8bit pngs are not compressed, they just don’t have many colors from the getgo! But you could perform an easy test, create a huge, primitive png texture and save that as various formats to test their performance.

As I said, this is a very primitive approach, but from the purely technical view of the image data itself, it would make a lot of sense.

? What ?? Where did this come from ???

Just render the default scene and make a color pick with the right mouse button clicked… colors are in float values !!

You can even save in float (EXR)… only the saved format determines the channel depth… so for example for PNG there is 8 or 16 bit per channel and RGB or RGBA… of course the result also depents on the color management… ( or if you follow the scene or choose it specificly ).

Hmm… i think i just switched to 16bit and the 15% are the default…

I have no idea if 16 bit is per-pixel or per image channel in this context.

What I meant in my post is that if you give Blender a 8 bit per pixel texture (like paletted), it’ll still allocate the same memory as for 24 bit texture (8 bit PER CHANNEL). So I am questioning if lowering texture bit depth even going to do anything

I’m talking Photoshop terms. PS has no compression for pngs or gifs, this is merely done through the reduction of colors, without altering pixel layout. JPG instead reduces color and introduces compression ans artifacts of various kind.

For png and GIF, you can only select the calculation model, the color appearance, number of colors, dither etc., but no compression. No idea what Blender does here. But you can try the same thing I proposed previously. Maybe Blender also converts the pngs internally, I don’t know.

…but you wrote what i was quoting…

Technically a “true color image” (non-palletted) uses: height * weight * R * G * B * (bit per channel / 8).

A paletted image uses: height * weight * (bit per channel / 8) + size of pallete

So for a low poly low color game engine this would make sense to use in any way… so your question about using a paletted image format to lower the used memeory… :

I think for the physical based approuches in cycles an eevee it does make no sense to use this really and they properly just use true color…

@Corniger :

Ohh…i think you are talking of lossless and lossy compression… AFAIK even JPG can be lossless if all the quantisation tricks (DCT) and thresholds are turned off and also PNG can be made a bit lossy… but i’m not 100% sure… both can have different compression ratios for a for example pure black image… ![]()

I don’t think that is true. By it’s very design and standard, JPG is a lousy format, while at the same time, PNG is lossless. Both of them use data compressing to make the actual file size smaller then a pure uncompressed format.

That’s not to say you can’t have a pretty good looking JPG image, by reducing it’s compression level down very low (as in limited compression, larger file size, more original data preserved), but there will still be data loss.

The only way a PNG image would have data loss is if the source image has a bit depth larger then what PNG supports. Hence it must reduce the number/range of colours in order to actually save the file. Think raw digital camera data then saved as a PNG, but then the resulting PNG file doesn’t have artifacts or data loss like JPG.

Having said all that, I’m pretty sure from Blenders point of view and rendering/memory usage, it makes no difference. Blender/Cycles, etc will take the image, be it JPG/PNG/TIF or whatever and uncompress/convert it to the same bit depth for rendering. The file size on the hard drive or if it’s a continuous tone vs flat colour image won’t make a difference, it’s going to use the same system/GPU memory either way.