AutoFace is a collection of scripts that creates a rigged and textured character from a single photograph. The scripts are usable, but the results are far from perfect.

Documentation is found at http://diffeomorphic.blogspot.com/p/autoface.html.

A zip file can be downloaded from https://bitbucket.org/Diffeomorphic/autoface/downloads/.

AutoFace uses quite a large number of external libraries, e.g. OpenCV, dlib and tensorflow. Since I haven’t managed to import these into Blender, the parts which use these libraries must be run as stand-alone python scripts. Tensorflow I didn’t manage to install at all on my computer. Therefore, the deep neural network must be run on Google Colaboratory, where all dependencies are already installed.

At the core of AutoFace is a deep convolutional neural network model and python code for robust estimation of the 3DMM face identity shape networks, directly from an unconstrained face image and without the use of face landmark detectors. The method is described in

A. Tran, T. Hassner, I. Masi, G. Medioni, “Regressing Robust and Discriminative 3D Morphable Models with a very Deep Neural Network”, in CVPR, 2017. The preprint version is available as arXiv:1612.04904 [cs.CV].

Code from this project has been generously shared on Github: https://github.com/fengju514/Expression-Net. That site also contains code for estimating expressions and face poses, but AutoFace does not yet make use of this data.

Whereas the Expression-Net script does the job of recreating the 3D face from a photograph, the output is not really useful for the practicing artist. The script outputs morphs for a modified Basel Face Model (BFM), which seems to be a favorite among AI researchers. The BFM is a high-density face mesh, but in practice we want a medium-density full body mesh, which is rigged and textured.

The goal of AutoFace is to transfer the output from the BFM to useful meshes, in particular the Genesis 8 Male and Female characters used in DAZ Studio. After the morphs have been transferred, we can load the characters into DAZ Studio and add hair, clothes and body morphs. The dressed characters can then be used in DAZ Studio or exported to other application. I personally use theDAZ importer to import the characters into Blender, where they can be posed and rendered.

More information about how it works under the hood: http://diffeomorphic.blogspot.com/2019/03/autoface-how-it-works.html

The pictures below show some results for images of two beloved candidates of the 2016 US presidential election.

Input images, with landmarks detected by Dlib.

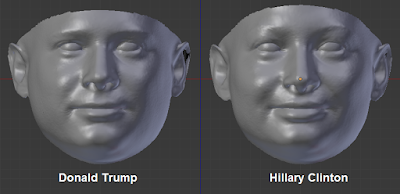

Basel Face Model

Genesis 8 Male and Female

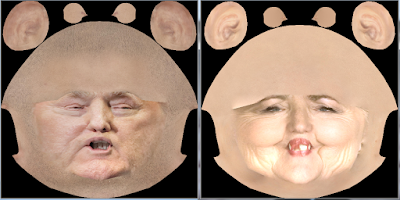

Textures

Clothes, hair and body morphs added in DAZ Studio.

Imported into Blender, posed and rendered.

As you can immediately tell from these images, the results are not perfect. The obvious flaw is that the characters look far too young. A possible workaround could be to add some aging morphs in DAZ Studio, although it is not satisfactory.

For best results we should start with a frontal photograph of a face in neutral position. The picture of secretary Clinton shows why. The deep network removes the smile from the 3D mesh, but it still lingers in the texture, in particular in the artifacts around the cheeks.